Discover how banks use AI in banking interactions for fraud detection to spot fake voices and stop scams in seconds.

Akshat Mandloi

Updated on

January 19, 2026 at 8:39 AM

You might think most bank-fraud stories begin with a stolen card or a breached database. In 2025, many of the most dangerous scams start with a casual chat: a voice call, a live chat, or a support interaction that feels perfectly normal. According to a global survey by KPMG, 81% of banks are actively trying to identify customers at risk of scams by monitoring interactions that look harmless.

When you look at AI in banking interactions for fraud detection, it’s not about scanning transactions later; it’s about analyzing tone, hesitation, and intent in real time.

Let’s dig in, what’s changed, why the conversation matters, and how AI is stepping into that space.

Key Takeaways:

AI in banking interactions for fraud detection shifts the focus from spotting fraud after it happens to stopping it mid-conversation.

Real-time voice and behavioral analytics catch subtle cues: hesitation, stress, or voice cloning before a transaction clears.

Context-aware AI agents analyze tone and phrasing to verify identity and detect manipulation during live calls.

Integrating AI through APIs and SDKs lets banks upgrade prevention systems without rebuilding their entire tech stack.

With solutions like Smallest.ai, banks gain instant, low-latency fraud defense that listens, learns, and reacts faster than fraud can move.

What Is AI Fraud Detection in Banking?

AI fraud detection in banking is what happens when fraud prevention stops being a slow, rule-based guessing game and starts becoming proactive, adaptive, and almost unnervingly fast. Instead of waiting for fraud to show up in a report, AI watches every interaction, every transaction, every tiny behavioral shift, and it does it in real time.

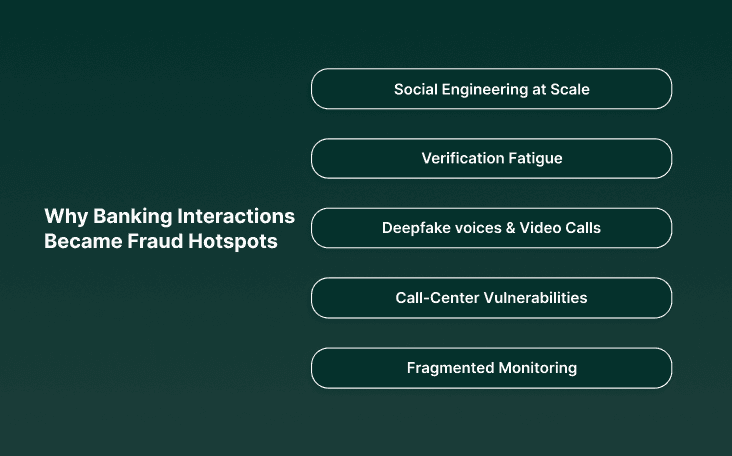

Here’s what’s making interactions the new attack surface:

Social engineering at scale: Voice AI cloning and chatbot scripts can mimic legitimate bank reps almost perfectly. Victims don’t get phishing emails anymore; they get phone calls that sound official.

Verification fatigue: Customers are tired of multiple security checks and tend to skip or rush through them, giving social engineers room to slip in.

Deepfake voices and video calls: With just a few seconds of recorded speech, scammers can clone a customer’s or employee’s voice to authorize transfers or bypass authentication.

Call-center vulnerabilities: Support staff are trained to be helpful, and fraudsters exploit that. Manipulated tone, urgency, or scripted emotional cues can push even experienced AI agents to bypass policy.

Fragmented monitoring: Most fraud systems still track transactions, not conversations. That leaves an entire layer of real-time intent and emotion unguarded.

The good news? The same technology fraudsters are using to trick people is now being used to catch them.

How AI Detects And Prevents Fraud In Banking

AI in banking interactions for fraud detection works like an instinct that never sleeps, catching what humans overlook. A tone that doesn’t match the words, a question that feels rehearsed, a customer who suddenly sounds like they’re reading a script.

So how does AI actually read between the lines in a banking conversation? Let’s break down the core systems working together behind the scenes:

Behavioral Signal Tracking

Behavioral signal tracking is about teaching AI to read the rhythm of human interaction, not just the words. It studies tone, pauses, pacing, and stress patterns to understand when someone isn’t acting like themselves. In banking, it’s how AI learns to “hear” discomfort, coercion, or scripted behavior before money ever moves.

How it works:

Captures acoustic features such as pitch contour, speech tempo, energy variance, and micro-pauses across the conversation.

Uses spectrograms and prosodic feature extraction to convert speech dynamics into measurable signals.

Applies recurrent or transformer-based models to analyze time-series changes — spotting shifts in tone or pacing.

Compares each customer’s live call against their historical interaction profile to detect anomalies.

Scores conversational “normalcy” in real time, triggering risk alerts when speech deviates significantly.

Use case:

A customer calls to reset their PIN. The request sounds fine, but the caller’s pace is slightly off, their tone strained, and their pauses unnaturally timed. The AI compares these cues to past verified interactions, detects a deviation score above threshold, and routes the call to manual verification before credentials are reset.

Natural Language Processing (NLP)

NLP lets AI understand the meaning and intent behind words, not just their sequence. In banking fraud detection, it’s what helps systems recognize when a caller or chat message sounds right linguistically but feels wrong contextually, like when a scammer’s phrasing doesn’t match a genuine customer’s typical tone or purpose.

How it works:

Transcribes speech or reads chat logs using ASR (Automatic Speech Recognition) to generate text input.

Applies transformer-based models (like BERT or GPT variants) to analyze semantics, tone, and sentiment in context.

Detects linguistic anomalies, overuse of formal language, rushed phrasing, inconsistent pronouns, or unnatural sentence flow.

Cross-references the detected intent against the customer’s current action or history (e.g., someone asking for a fund transfer during a support call).

Flags inconsistencies where what’s being said doesn’t align with why it’s being said.

Use case:

During a customer service chat, an AI model notices that the user’s phrasing and vocabulary suddenly shift, from casual conversation to overly formal, script-like language. The NLP layer identifies the mismatch in tone and intent, triggering a verification step before allowing any sensitive account changes.

Also Read: Speech-to-Text and Text-to-Speech Technology: Making Interactions Smarter

Voice Biometrics

Voice biometrics uses the unique acoustic features of a person’s speech: pitch, timbre, resonance, and cadence, to verify identity. Think of it as a fingerprint made of sound. In AI in banking interactions for fraud detection, the technology verifies that the speaker is who they claim to be, even when fraudsters use imitation or voice cloning to break through security.

How it works:

Converts live speech into a voiceprint, a mathematical model of vocal characteristics derived from spectral and frequency analysis.

Compares this live voiceprint against a database of enrolled customer profiles using cosine similarity or deep embedding matching.

Uses neural speaker recognition models (e.g., x-vector or ECAPA-TDNN architectures) for precise speaker verification under background noise or compression.

Detects inconsistencies in micro-features, such as formant transitions or jitter, that deepfake voices often fail to reproduce naturally.

Runs continuously during the call to monitor for mid-interaction changes, like a handoff between fraudsters or synthetic audio injection.

Use case:

A caller requests to update account credentials. The system authenticates the voice in milliseconds and finds a 72% match, far below the customer’s usual 98%. Before any action is taken, the transaction is frozen and a secondary verification (OTP or callback) is triggered, preventing a deepfake-assisted breach.

Anomaly Detection in Call Data

Anomaly detection in call data focuses on identifying interactions that deviate from normal conversational or operational patterns. It’s the layer that listens for what doesn’t belong, unusual phrasing, pacing, or metadata shifts that signal something off, even when the words sound fine.

How it works:

Extracts structured features from call metadata, call duration, response latency, speech-to-silence ratio, background noise, and voice amplitude.

Uses unsupervised learning methods like Isolation Forest, Gaussian Mixture Models, or Autoencoders to establish a “normal” pattern for each customer or agent.

Detects outliers in real time by comparing live call vectors to historical baselines.

Flags rapid bursts of speech, uncharacteristic silence gaps, or irregular pitch fluctuations that often accompany coaching or deception.

Integrates contextual metadata, IP origin, device ID, and time of day, to distinguish genuine anomalies from routine behavior changes.

Use case:

A bank’s contact center system spots a pattern where a customer’s typical two-minute verification calls suddenly stretch to seven minutes, with long silent pauses and frequent agent interruptions. The anomaly detection model correlates these shifts with recent account-access attempts, flags the pattern as high-risk, and locks further transactions until verified.

Multimodal AI

Multimodal AI brings together different data types, voice, text, metadata, and user behavior, to build a complete picture of an interaction. Instead of judging a call on tone or words, or device info, it merges everything to understand context. In fraud detection, that unified view helps spot inconsistencies that single-channel systems miss.

How it works:

Ingests multiple data streams simultaneously, audio (speech), text (transcripts), metadata (device, IP, location), and behavioral patterns (login timing, click paths).

Converts each input into embedding vectors using separate encoders, for example, an audio encoder for tone features and a text encoder for semantic meaning.

Projects those embeddings into a shared latent space where relationships between signals can be analyzed holistically.

Uses attention-based fusion models to weigh which signals matter most at any given moment, tone under stress, mismatch between location and claimed identity, or a speech pattern inconsistent with typical sessions.

Continuously updates profiles as new modalities are added, improving prediction accuracy with every interaction.

Use case:

During a live verification call, the system analyzes the caller’s voice (audio), their phrasing (text), and session metadata (device ID and IP). The voice sounds authentic, but the IP traces back to a foreign proxy, and the language pattern doesn’t match prior interactions.

The fused model spots the cross-modal mismatch, flags the call as potentially compromised, and triggers manual review before any transfer is approved.

Also Read: How AI Lending Is Transforming the End-to-End Borrower Experience

Knowing how the models work is one thing. Getting them to perform in live banking environments is where the real test begins.

Difference Between Traditional and AI-Powered Fraud Detection

Traditional fraud detection was built on a simple idea: catch what you already know to look for. But today’s fraud doesn’t wait. It adapts, mutates, and tests systems constantly. Attackers don’t repeat old tricks; they invent new ones on the fly. This is where AI-powered fraud detection takes a fundamentally different path.

To really see how AI transforms fraud detection, it helps to look beyond the usual talking points and compare the deeper differences that actually change outcomes.

Traditional Fraud Detection | AI-Powered Fraud Detection |

|---|---|

Relies on static logic created by humans — limited by what teams can imagine or predict. | Learns patterns humans would never think to write rules for, uncovering hidden relationships in data. |

Treats each event in isolation — transaction-by-transaction analysis with no long-term memory. | Builds a “behavior profile” for every user/device, comparing actions against historical behavior continuously. |

Flags based on fixed thresholds, often producing clusters of false positives in edge cases. | Dynamically recalibrates risk thresholds based on context, reducing false positives even when patterns shift. |

Struggles with emerging fraud tactics because updates require long cycles: analysis → rule creation → deployment. | Evolves instantly as new signals appear, adjusting detection models without human intervention. |

Heavy dependence on domain experts to manually interpret anomalies and rewrite rules. | Surfaces high-signal anomalies automatically and explains the rationale behind detections, reducing analyst load. |

Focuses on known fraudulent behaviors, limiting visibility into coordinated or networked attacks. | Detects cross-network correlations — coordinated attacks, synthetic identities, shared devices — even when individually subtle. |

Operates with a “stop-gap” mindset: block suspicious activity after matching a known pattern. | Operates with a “predictive” mindset: spots unusual intent or sequence of actions before fraud fully unfolds. |

Understanding the difference is one thing; seeing how AI actually shows up in real banking environments is another.

Use Cases for AI Fraud Detection in Banking

Fraud in banking doesn’t show up as one big, obvious red flag anymore; it shows up as thousands of tiny signals scattered across accounts, devices, networks, and behaviors. Traditional systems aren't built to connect those signals, but AI is.

Here’s where AI makes the biggest real-world impact.

1. Crypto Tracing

Cryptocurrency moves fast, crosses borders instantly, and often hides behind layers of anonymity. AI helps banks uncover what traditional tools can’t by:

Monitoring blockchain transactions for unusual movement patterns

Detecting rapid or coordinated fund transfers

Tracing stolen or illicit crypto across wallets and exchanges

AI turns decentralized data into actionable intelligence.

2. Verification Chatbots

AI chatbots are evolving from customer support tools into frontline fraud defenders. They help banks detect bad actors by:

Analyzing language patterns for signs of phishing or impersonation

Flagging unusual conversational cues tied to scam behaviors

Identifying identity thieves based on deviations from typical user behavior

Chat becomes a verification layer, not just a convenience feature.

3. Ecommerce Fraud Detection

Online purchases are a common target for fraud, and AI strengthens protection by:

Studying customer behavior and purchase history in real time

Comparing device and location data against known patterns

Flagging transactions that fall outside normal activity

Using computer vision and logic to identify risky or fake ecommerce websites

AI helps banks protect customers before a fraudulent purchase even happens.

Of course, none of this works without the right tech behind it. Prevention at this scale demands systems that can think, listen, and react in milliseconds, exactly where most traditional setups fall short.

How Smallest.ai Powers Real-Time Fraud Prevention

Most fraud detection tools chase alerts. Smallest.ai builds systems that catch intent, in real time, during the conversation itself.

Its platforms are designed for the moments where banks usually lose visibility: the few seconds between a voice command and a fund transfer, or the short pause before a “yes” on a verification call.

Smallest.ai brings together two core technologies, Waves and Atoms, to create AI that listens, understands, and acts faster than fraud can unfold.

1. Waves

Waves isn’t just a text-to-speech engine; it’s an audio intelligence layer that can generate, analyze, and react to human-like speech on the fly. In the fraud space, that opens up serious possibilities:

Voice Authentication & Anomaly Detection: When a user speaks, Waves can generate acoustic embeddings, digital fingerprints of their voice.

Real-Time Call Monitoring: In contact centers, Waves enables AI models to listen as conversations happen, flagging speech patterns that deviate from typical customer behavior: rushed tones, unnatural pauses, or inconsistent phrasing.

Training & Simulation: Fraud teams can use Waves to simulate voice scams or deepfake scenarios, training AI agents and analysts to recognize the cues before they hit production systems.

2. Atoms

Atoms is where conversational AI meets operational intelligence. These aren’t just scripted bots; they’re adaptive, low-latency AI agents capable of handling live voice and chat interactions at enterprise scale. For fraud prevention, that adaptability makes all the difference.

Transaction Verification: Atoms can confirm high-risk transactions in real time, calling customers, verifying intent through natural conversation, and spotting hesitation patterns that suggest coercion or impersonation.

Behavioral Monitoring: Each conversation feeds back into a behavioral model, helping detect account takeovers or abnormal engagement patterns before they escalate.

Scalable Response: During large-scale fraud events, Atoms can handle thousands of simultaneous verifications without compromising accuracy or tone, something human teams simply can’t match.

By turning every voice, click, and hesitation into data, it gives banks a defense system that listens, learns, and reacts faster than fraud ever could.

Conclusion

AI in banking interactions for fraud detection exists because fraud now sounds polite, scripted, and fast, until it isn’t. AI isn’t replacing human judgment; it’s extending it into the milliseconds where fraud hides.

That’s where Smallest.ai changes the equation. Its tech doesn’t wait for proof; it listens for discomfort, inconsistency, and coaching inside every interaction. Smallest.ai turns every call, click, and confirmation into an early warning system, one that protects both the bank and the person on the other end of the line.

Ready to move from reaction to prevention? Book a demo and see how their real-time AI makes fraud prevention as natural as the conversation itself.

FAQs

1. How is AI in banking interactions for fraud detection being used today?

AI analyzes live customer interactions — tone, phrasing, hesitation, timing — to identify behavior that doesn’t match a user’s usual pattern. Instead of waiting for a flagged transaction, it catches fraud during the call or chat, in real time.

2. What makes AI-based fraud prevention different from traditional systems?

Traditional systems rely on static rules and after-the-fact alerts. AI models adapt to behavior — learning from context, tone, and conversation flow — allowing banks to stop fraud before a transaction clears.

3. Can AI detect voice deepfakes or impersonation scams?

Yes. AI-driven voice analysis can spot synthetic or cloned voices by studying micro-tremors, pitch variations, and timing inconsistencies that human ears can’t catch. Solutions like Smallest.ai’s Waves are already being used for this.

4. Does AI replace human fraud analysts?

Not at all. AI handles the heavy lifting — analyzing massive volumes of interaction data — while humans focus on complex cases that need judgment, empathy, or investigation. Together, they create a hybrid defense model.

5. How does AI handle privacy and compliance in voice-based systems?

Top-tier systems anonymize and encrypt all voice and text data, keeping personally identifiable information (PII) protected. Platforms like Smallest.ai’s Atoms are built with compliance-first architecture, ensuring every interaction remains auditable and secure.

Automate your Contact Centers with Us

Experience fast latency, strong security, and unlimited speech generation.

Automate Now