Beware of scammers using AI voice cloning! Learn how to identify fake calls, protect sensitive information, and stay safe with practical tips. Stay vigilant! Click now.

Akshat Mandloi

Updated on

December 26, 2025 at 11:36 AM

Technology is evolving fast, bringing both opportunities and risks. One of the most alarming threats today is the AI voice cloning scam. Scammers now use advanced tools to replicate voices, making fake calls sound more convincing than ever.

AI voice cloning has made it easy for scammers to replicate voices, a task that once required expensive software. This poses a serious threat to personal security, businesses, and content creators, as fraudsters can impersonate executives or brands, causing financial loss and reputational damage.

In April 2023 survey, internet users in the U.S. found that 14% of respondents had experienced voice cloning AI scams firsthand. Even more alarming, 18% reported that it had happened to someone they knew. These numbers show just how widespread and dangerous this scam has become.

In this blog, we’ll explore how scammers use voice cloning, how to recognize fake calls, and, most importantly, how to protect yourself from falling victim.

The Rise of Scammers Using AI Voice Cloning Technology

AI voice cloning isn’t inherently bad—it’s a groundbreaking technology that has transformed industries like content creation, customer service, and accessibility tools.

Content creators use AI voices for audiobooks, podcasts, and video narration.

Businesses leverage AI-powered IVR systems and chatbots to provide automated yet human-like customer interactions.

Assistive technology uses AI speech synthesis to help individuals with speech impairments communicate more effectively.

But just like deepfake videos, AI voice cloning can also be weaponized.

Scammers no longer need to hack your bank account to commit fraud. Instead, they can clone a voice, trick a bank representative, and authorize a transfer in your name.

In the past, voice deepfakes required sophisticated AI knowledge and expensive tools. Today, publicly available voice cloning software allows for the generation of fake voices within minutes, and criminals are taking advantage of this.

How Scammers Misuse AI Voice Cloning

Cybercriminals are Exploiting AI Voice Cloning Scammers steal and manipulate voice samples to:

Impersonate business executives to authorize fake transactions.

Bypass customer verification in call centers and IVR systems.

Mimic public figures and influencers to spread misinformation or scams.

Manipulate families and employees using fake distress calls.

With AI cloning technology improving rapidly, this problem will only grow. The question isn’t whether scams will happen—it’s whether you’re prepared to recognize and stop them.

Real-Life Scam Examples

Imagine receiving a distress call from your CEO urgently asking you to approve a transaction. The voice sounds authentic, carrying the same tone and urgency they usually have. But it’s an AI cloning voice scam—an AI-generated fake designed to defraud the company.

In a real-world case, Jennifer DeStefano, an Arizona mother, received a call from an unfamiliar number. To her horror, she heard what sounded exactly like her 15-year-old daughter crying and pleading, claiming she had been kidnapped.

The caller demanded a $1 million ransom. Terrified, Jennifer was convinced it was her daughter's voice. However, it was later revealed that scammers had used advanced technology to clone her daughter's voice, creating a convincing fake to manipulate her.

Techniques Used by Scammers

AI voice cloning fraud doesn't need much to create realistic fake voices. They often:

Scrape social media for publicly available voice clips.

Record voicemails or public speeches from podcasts, webinars, or interviews.

Use AI-powered speech synthesis tools to manipulate and recreate a person’s voice.

Once they clone a voice, scammers generate fake distress calls, impersonate employees, or trick people into financial transactions.

Recognizing AI-Generated Calls

AI voice cloning scams are designed to sound real, making them more dangerous than traditional phishing calls. But even though these scams are more advanced, they still have weak spots that can help you detect them.

Here’s how to tell if you’re speaking to an AI-generated voice instead of a real person:

Technical Signs of AI-Generated Calls

While AI voice cloning is sophisticated, there are still signs that can help you spot a fake call:

Robotic or unnatural pauses: AI-generated calls may have slight delays between words or sentences.

Inconsistent emotions: The voice might sound flat or lack natural emotional changes.

Repetitive phrases: AI might reuse the same expressions unnaturally.

Verification Techniques

When you receive an unexpected call asking for money or sensitive information:

Hang up and call back on a known number.

Ask the caller something only they would know.

Use a pre-agreed “safe word” with close friends and family to verify identity.

Common Scam Call Patterns

Scammers rely on emotional manipulation. Watch out for these scenarios:

A distress call from a “family member” claiming they need urgent help.

A “colleague” requesting sensitive business information.

A supposed authority figure, like a police officer or bank representative, is asking for immediate action.

The Impact of Voice Cloning Scams On Various Groups

Scammers using AI voice cloning impact more than just individuals. In fact, they create serious challenges for content creators, businesses, and developers who rely on voice technology. These scams can damage trust and disrupt operations across industries.

Impact on Content Creators and Media Professionals

For podcasters, audiobook publishers, and video producers, voice cloning is a valuable tool. However, when scammers misuse this technology, it can lead to reputational damage. As a result, audiences may start questioning the authenticity of the content they consume.

Impact on Businesses and Customer Support Teams

Businesses that rely on voice for customer support are at high risk. Scammers, for example, can impersonate company representatives and trick customers into revealing personal information. This can result in data breaches and a loss of trust from customers.

Impact on Developers and AI Engineers

Scams pose unique challenges for developers integrating voice tech into apps. As cloning tools improve, it's vital to use platforms that prioritize security and ethical practices. Without proper safeguards, user trust can be easily compromised.

Emotional and Financial Impact on Individuals

Voice cloning scams can cause emotional distress and financial loss. For instance, fake distress calls from loved ones can lead to panic and rushed decisions. Moreover, victims often feel betrayed, even after discovering the call was fake.

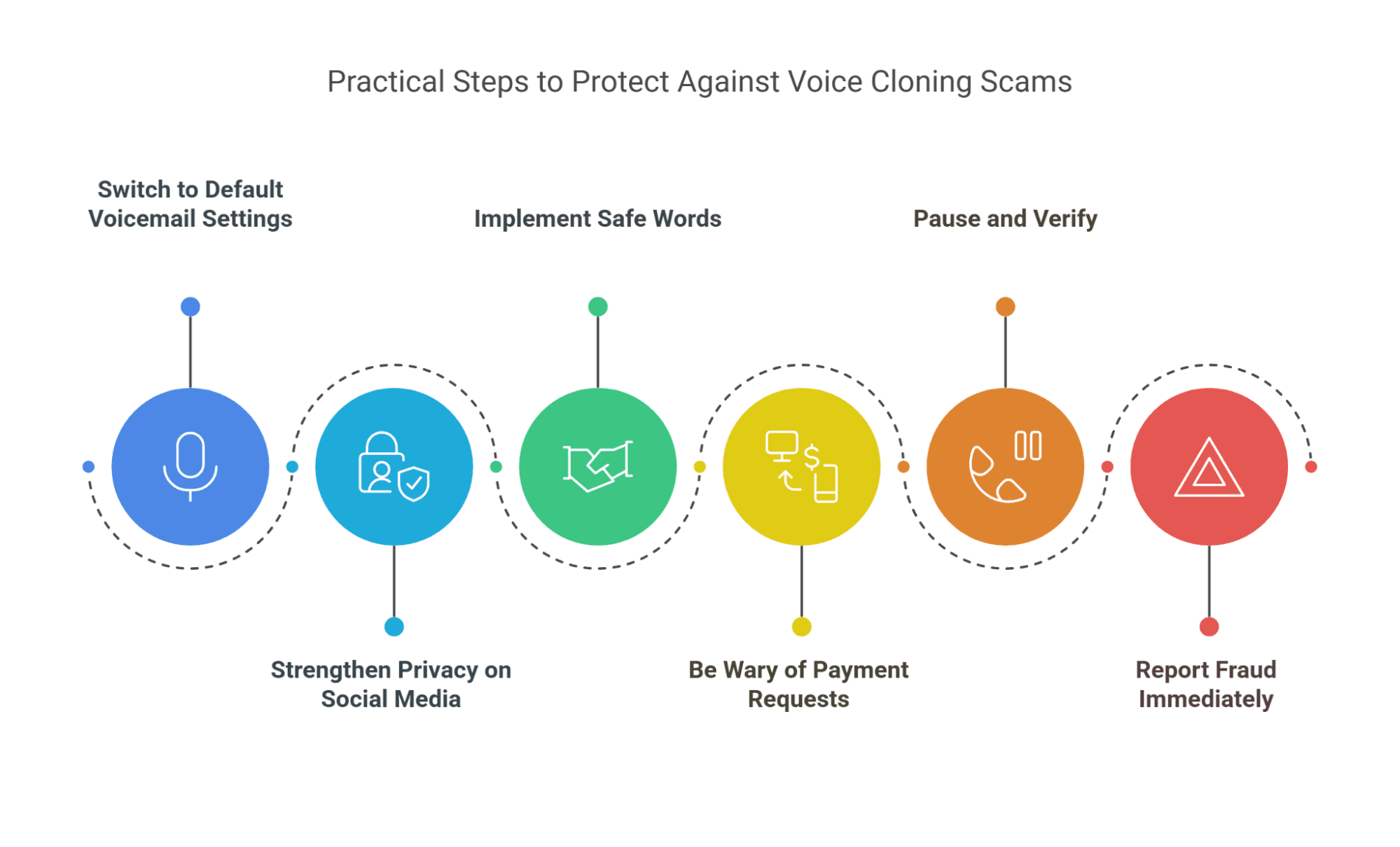

Practical Steps to Protect Against Voice Cloning Scams

Now that we’ve discussed the risks, let’s explore some proactive measures to protect individuals. Simple changes in how you manage your personal information can make a big difference in staying safe from these threats.

Follow these steps to reduce your risk and stay one step ahead of scammers.

Switch to Default Voicemail Settings

Your personalized voicemail greeting can be an easy target for scammers looking to clone your voice. Even a short greeting is enough for them to create convincing fake calls. To reduce this risk:

Use a generic voicemail message instead of recording your voice.

Avoid leaving long voicemails that scammers could potentially record and misuse.

Strengthen Privacy on Social Media

Social media platforms are a goldmine for scammers looking for voice samples. Videos, stories, and even casual voice notes can be harvested without your knowledge. To protect yourself:

Set your social media profiles to private.

Limit the sharing of videos or voice recordings publicly.

Be cautious when using voice notes in forums or groups where you don’t know all participants.

Implement Family or Workplace ‘Safe Words’

A simple code word can be the difference between falling for an AI scam voice cloning and staying safe. This method is especially effective against fake distress calls claiming to be from someone you trust. Here’s how to do it:

Share a unique, safe word with family, friends, or coworkers.

If you receive a distress call, ask for this safe word to confirm it’s truly them.

Be Wary of Unsolicited Payment Requests

Scammers use AI voice cloning to create a false sense of urgency to trick victims into sending money without thinking twice. If you receive an unexpected request for payment, keep these precautions in mind:

Never transfer funds without verifying the request through another communication channel.

Use secure payment methods like credit cards, which offer better fraud protection.

Be extra cautious with requests involving gift cards, wire transfers, or cryptocurrency—these are common tools in scams.

Pause and Verify

The key to avoiding scams is to stay calm and think critically, especially when faced with unexpected requests. Before taking any action, follow these steps to verify the authenticity of the call:

Take a moment to pause and think.

Hang up and call back using a verified number from your contacts or the official website.

Avoid responding to requests from unknown numbers or suspicious emails and texts.

Report Fraud Immediately

If you suspect you’ve been targeted by scammers using ai voice cloning, acting quickly can help limit the damage. Here’s what you should do immediately if you encounter a voice cloning scam:

Contact the Federal Trade Commission (FTC) to file a complaint.

Notify your bank if personal information was shared. AI voice cloning scam warning issued by the bank to protect customers—be sure to follow their guidance carefully.

Inform friends and family so they can stay alert to similar tactics.

By adopting the right security practices, you can stay ahead of scammers. To enhance your protection, you can also sign up for various tools that help safeguard your data and privacy.

Ethical and High-Quality AI Voice Cloning with Smallest.ai

As AI voice cloning technology advances, businesses and creators need tools that offer hyper-realistic voices while being adaptable, secure, and responsible. Smallest.ai focuses on providing businesses, developers, and content creators with secure, high-quality AI voice synthesis solutions that prioritize responsible usage and user protection.

Why Choose Smallest.ai for AI Voice Cloning?

Smallest.ai is a cutting-edge AI-powered voice generation platform that delivers studio-quality text-to-speech (TTS) and instant voice cloning. It is designed to help businesses and creators scale their voice-based interactions responsibly—whether for content creation, customer support automation, or virtual assistants.

Key Features

Waves - AI Voice Cloning & TTS: Create ultra-realistic AI voices that sound natural, expressive, and engaging.

Instant Voice Cloning: Generate a high-quality voice clone with just 5–10 seconds of recorded speech.

100+ AI Voices, 30+ Languages: Supports a diverse range of voices and languages, ensuring global accessibility.

Real-Time AI Processing: Sub-100ms latency allows for instant, human-like voice generation—ideal for IVR, virtual assistants, and AI-driven customer interactions.

Customizable API for Developers: Provides Python SDK & API access for seamless integration into applications, chatbots, and automated voice services.

Who Can Benefit from Smallest.ai?

Businesses: Improve customer service automation with human-like AI voices.

Developers: Integrate real-time voice synthesis into chatbots, apps, and AI tools.

Content Creators: Produce studio-grade voiceovers for videos, audiobooks, and podcasts.

E-learning & Accessibility: Enhance educational platforms with multi-language AI narration and assistive voice technology.

Conclusion

Voice cloning is a powerful technology with incredible potential for good. However, in the wrong hands, it becomes a dangerous tool for fraud. By understanding how scammers are using AI voice cloning, recognizing the signs of fake calls, and taking proactive steps to protect yourself, you can stay one step ahead.

As this technology continues to evolve, individuals and businesses must remain vigilant. Ultimately, the key is to protect yourself and help build awareness within your community. The more people understand these threats, the harder it becomes for scammers to succeed. Therefore, stay informed, stay skeptical, and educate those around you about these emerging threats.

When choosing an AI voice cloning provider, it's important to select platforms that prioritize quality, responsible deployment, and flexible customization—all of which Smallest.ai delivers.

💡 Want to explore high-quality AI voices? Try Smallest.ai today and experience fast, natural voice cloning for your business or creative projects.