Unlock AI fraud detection techniques for 2025. Monitor transactions in real-time, spot anomalies, and protect identities. Boost security now!

Akshat Mandloi

Updated on

January 19, 2026 at 8:39 AM

You probably think fraud is something only big banks worry about. Spoiler: you’re wrong. In 2024 alone, consumer-fraud losses jumped 25%, hitting more than $12.5 billion. And when companies look inside, one study found they lost an average of 7.7% of annual revenue to fraud last year.

So when we talk about AI fraud detection techniques in 2025, this isn’t just tech jargon; it’s a survival play. They’re getting smarter, faster, and worst of all: quieter.

In this article, you’ll find what those techniques actually look like in action, why the usual rules no longer cut it, and how you can keep up before fraud shows up uninvited.

In a Nutshell:

AI techniques like anomaly detection, GNNs, and hybrid deep-learning models now power real-time fraud prevention.

Success depends on low latency, clean data pipelines, explainable decisions, and continuous model updates.

Smallest.ai’s Waves and Atoms bring real-time voice intelligence and adaptive AI agents into fraud defense, where milliseconds matter.

The future of AI fraud detection techniques in 2025 isn’t about reacting after the fact; it’s about predicting and stopping it before it happens.

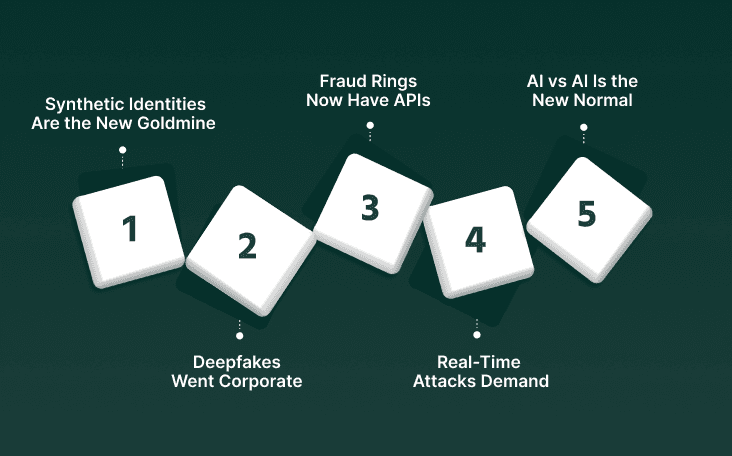

What Fraud Looks Like in 2025

You’d think with all the new security tools, fraud would be shrinking by now. It’s not. It’s mutating. What used to take a scammer hours can now be done by an AI model in seconds. Fake voices, cloned IDs, synthetic accounts: they all blend into the noise until it’s too late.

So how exactly are these scams slipping through the cracks? Let’s break down what’s driving the new wave of fraud in 2025:

1. Synthetic Identities Are the New Goldmine

Fraudsters don’t steal your data anymore; they build brand-new people from scraps of it. A real SSN here, a fake address there, and suddenly there’s a “customer” with spotless credit.

These identities can remain active for months before anyone catches on, quietly accumulating debt and disappearing before the system notices.

2. Deepfakes Went Corporate

AI voice and video tools aren’t just for viral memes. Scammers are using cloned voices to pass phone verifications, fake Zoom calls, and even entire investor presentations.

The scariest part? Most fraud-detection systems weren’t built to tell a human from a near-perfect synthetic voice.

3. Fraud Rings Now Have APIs

Gone are the messy, disorganized fraud groups. In 2025, we’re talking organized, scalable, code-driven crime. Fraud-as-a-Service platforms sell pre-trained bots that can test stolen cards, create fake accounts, and even mimic spending behavior, all on autopilot.

4. Real-Time Attacks Demand Real-Time Defense

Fraud doesn’t happen overnight anymore; it happens in milliseconds. Every transaction, login, and voice call can be gamed the moment it happens. If your fraud system isn’t catching it as it happens, it’s already too late.

5. AI vs AI Is the New Normal

The bad actors are training their own models too. They study your fraud filters, learn what gets flagged, and tweak just enough to sneak past. It’s a constant arms race, and the smarter model wins.

Also Read: Recognizing and Avoiding AI Voice Cloning Scams

The good news? AI’s just as relentless on the defense side. The tools are faster, sharper, and finally getting smart enough to catch what humans can’t.

AI Fraud Detection Techniques in 2025

You can’t outsmart fraud with static rules anymore. Fraud in 2025 moves too fast, learns too quickly, and hides too well. The only way to keep up is to fight algorithms with smarter algorithms.

But here’s the twist: AI isn’t just spotting fraud anymore. It’s predicting it. It knows when a transaction feels off before the data even screams “fraud.” So what does that actually look like in practice?

Let’s break down the AI fraud detection techniques in 2025:

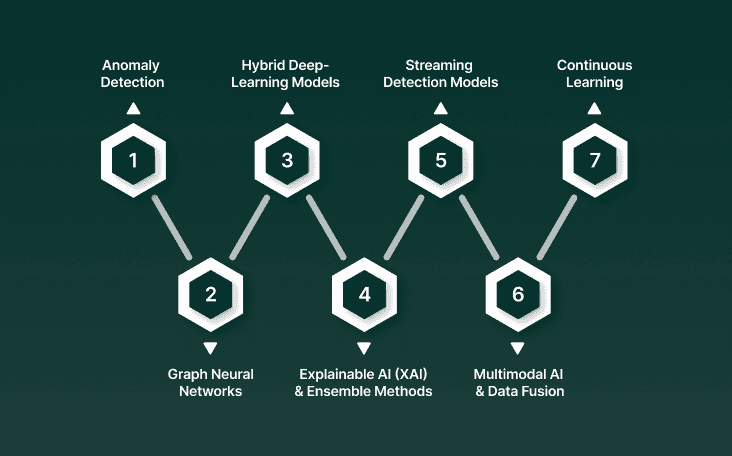

1. Anomaly Detection & Behavioral Analytics

Anomaly detection is the process of identifying data points or actions that deviate from an established norm. In fraud detection, this means teaching algorithms what “normal” looks like, then catching the moments when something quietly breaks that pattern.

Behavioral analytics adds another layer, analyzing how users interact, not just what they do. Together, they turn every click, call, and transaction into a behavioral fingerprint.

How it works in fraud detection:

Baseline modeling: The system uses unsupervised learning or statistical profiling (like Gaussian mixture models, k-means clustering, or autoencoders) to build a behavioral baseline for each entity, user, device, or account.

Feature extraction: It captures multidimensional signals such as device type, geolocation, transaction frequency, session duration, and even voice features like tone and cadence.

Deviation scoring: Every new event is scored against the baseline using distance metrics (Mahalanobis, cosine, or probability thresholds). Higher deviation = higher fraud likelihood.

Adaptive thresholds: Instead of fixed rules, thresholds shift dynamically based on recent user behavior and population trends, reducing false positives.

Feedback loop: Confirmed fraud cases are fed back into the model, letting it refine what “normal” and “abnormal” mean over time.

Use Case:

Say a customer logs into their banking app from a new device and immediately tries to move funds overseas. The anomaly detection model compares this behavior against the user’s historical profile: usual device, login time, transaction size, and destination.

The behavioral analytics layer kicks in next, checking session speed, cursor movement, and even typing rhythm to confirm it’s not the legitimate user. All of that happens before the transfer clears, in milliseconds.

2. Graph Neural Networks (GNNs)

Fraud doesn’t always hide in a single transaction; it hides in the connections between them. Graph Neural Networks (GNNs) are built to detect exactly that. Instead of treating users, devices, and transactions as isolated data points, GNNs look at how everything is connected.

How it works in fraud detection:

Graph construction: Every entity: user, device, account, transaction, becomes a node. The relationships between them (shared IPs, payment methods, shipping addresses, voiceprints) form the edges of a graph.

Embedding generation: The GNN converts each node and edge into numerical embeddings that capture both the attributes of the node and its surrounding network context.

Message passing: Nodes exchange information with their neighbors during training, allowing the model to learn how behavior propagates across connected entities. For example, if several linked accounts are flagged as fraudulent, related nodes inherit part of that suspicion score.

Fraud propagation detection: The network learns complex relational patterns — such as clusters of accounts opening in the same minute or using overlapping devices that may indicate organized fraud rings.

Real-time scoring: When new transactions or connections appear, the system updates embeddings and risk scores in real-time, enabling it to detect ring-based fraud as it forms.

Use Case:

A payment platform notices multiple new accounts using different names but sharing the same device ID and IP range. On their own, none of these accounts seems suspicious. But once represented as a graph, the GNN spots a dense cluster of shared connections, the telltale structure of a coordinated fraud ring.

It flags the group instantly, cutting off the activity before it scales.

3. Hybrid Deep-Learning Models

Fraud rarely looks the same twice, which is why relying on one type of neural network isn’t enough. Hybrid deep-learning models combine multiple architectures, like CNNs, RNNs, Transformers, and Autoencoders, to capture both sequential behavior and contextual relationships.

How it works in fraud detection:

Multi-architecture setup: A hybrid model might use an RNN or LSTM to track temporal sequences (like transaction timing), a CNN to extract local patterns from tabular or image-based data (like document scans), and a Transformer or attention layer to weigh contextual importance across signals.

Feature fusion: Outputs from these networks are fused into a unified representation, giving the model a holistic view of both short-term anomalies and long-term behavioral shifts.

Autoencoder integration: Autoencoders compress normal behavior into a latent space and flag inputs with high reconstruction error, indicating unfamiliar, potentially fraudulent behavior.

Adaptive training: The hybrid setup allows models to handle structured, semi-structured, and unstructured data simultaneously, from transaction logs and metadata to voice transcripts or chat messages.

Continuous learning: Feedback from fraud analysts and new data points retrain parts of the network incrementally, keeping it aligned with fast-changing fraud tactics.

Use Case:

An online marketplace starts seeing sudden bursts of high-value orders followed by rapid refund requests. A hybrid model processes this in layers: the RNN picks up on the suspicious timing sequence, the CNN identifies similarities in device fingerprints, and the Transformer layer correlates user behavior across sessions.

Combined, they expose a coordinated refund fraud pattern before the next wave hits.

4. Explainable AI (XAI) & Ensemble Methods

It’s one thing for an AI model to flag fraud. It’s another to explain why. In financial and regulatory settings, that difference matters a lot. Explainable AI (XAI) makes fraud detection transparent by showing which features or signals influenced a decision.

How it works in fraud detection:

Model stacking: Different algorithms, like gradient boosting, random forests, and neural networks, are trained on the same data, and their predictions are aggregated (using techniques like weighted averaging or meta-learners) for a stronger overall decision.

Feature importance mapping: XAI frameworks such as SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-Agnostic Explanations) identify which features (e.g., device ID, transaction velocity, login location) contributed most to a fraud score.

Decision transparency: These insights allow analysts and regulators to trace every fraud flag back to a logical explanation, building trust and compliance readiness.

Error calibration: Ensemble models learn from each other’s mistakes. When one algorithm overfits to noise, another model corrects the bias, stabilizing overall predictions.

Real-time interpretability: Modern systems pair streaming fraud detection with instant interpretability dashboards, helping teams understand anomalies as they happen, not after losses occur.

Use Case:

A global payment processor flags a batch of transactions as fraudulent. Instead of a black-box “high-risk” tag, the XAI layer breaks it down: 62% of the fraud probability came from velocity spikes, 25% from mismatched device IDs, and 13% from abnormal session times.

Analysts quickly verify the reasoning, approve genuine users, and freeze only the real threat, no guesswork, no delay.

5. Multimodal AI & Data Fusion

Fraud doesn’t live in one type of data anymore; it hides in the gaps between them. Multimodal AI models close those gaps by combining signals from multiple sources: text, audio, image, transaction logs, biometrics, and even behavioral cues.

How it works in fraud detection:

Cross-domain inputs: The model ingests diverse inputs, like transaction metadata, voice recordings, chat transcripts, and device telemetry, through separate encoders designed for each data type.

Representation alignment: Each encoder converts its input into an embedding vector within a shared latent space, allowing the system to compare relationships across modalities (e.g., how a caller’s tone correlates with transaction anomalies).

Fusion layer: These embeddings are merged using attention mechanisms or late-fusion architectures that weigh which modality contributes most to a risk decision in real time.

Conflict detection: When signals disagree, say, a user’s voice sounds stressed while their transaction pattern appears normal, the model flags it for deeper inspection.

Contextual learning: Continuous feedback loops teach the system which combinations of signals most often predict fraud, sharpening precision over time.

Use Case:

A fintech support line receives a call from a “customer” requesting a high-limit transfer. The voice sounds authentic, and the account details check out. But when the multimodal model cross-references voice embeddings, device fingerprints, and account activity, it detects subtle mismatches.

Also Read: Top Lightweight AI Models for Edge Voice Solutions

Models sound great in theory, until you have to plug them into messy real-world systems, juggle latency limits, and keep regulators happy. That’s where the real test begins.

Practical Considerations and Deployment Challenges

Building a fraud detection model is the easy part. Keeping it accurate, compliant, and fast enough to matter, that’s the hard bit. The jump from prototype to production is where most systems break.

Here’s what that looks like in practice.

1. Latency is a dealbreaker

A fraud model that takes a few seconds too long might as well not exist. In payments and voice verification, even a 300 ms delay can break the flow or block a legitimate user. Real-time detection means optimizing every stage, from feature extraction to model inference, without sacrificing accuracy.

That’s why production systems often use distilled or quantized models, caching key embeddings for faster scoring.

2. False positives will wreck user trust

It’s easy to catch fraud if you flag everything. The challenge is catching only the right things. False positives frustrate customers, kill conversions, and force human reviewers to waste hours. Teams now pair anomaly-based alerts with contextual rules and ensemble scoring to keep precision high.

Every false positive is logged, analyzed, and recycled back into the model’s learning loop.

3. Data pipelines are rarely clean

Fraud detection models rely on streaming, structured, and sometimes ugly data, half-complete logs, duplicated IDs, and missing timestamps. Bad ingestion kills model performance faster than bad architecture.

Teams are now investing more in data validation, schema evolution, and real-time feature stores than in the models themselves. The rule: if your data pipeline breaks, your fraud detection does too.

4. Regulatory transparency isn’t optional anymore

Whether you’re in finance, insurance, or healthcare, regulators now expect explainability. “The model said so” doesn’t fly. Systems need audit trails, clear logs of what features triggered each fraud score, how decisions were made, and who approved overrides.

This is where Explainable AI (SHAP/LIME) and versioned model tracking aren’t luxuries; they’re survival tools.

5. Model drift is silent but deadly

Fraud patterns change faster than release cycles. A model that was great three months ago might quietly degrade without anyone noticing. Continuous monitoring for drift, via PSI (Population Stability Index) or concept drift metrics, keeps teams ahead of that decay.

Smart setups now include automated retraining triggers and “shadow models” running in parallel to test new updates without risking production data.

Also Read: Enterprise Voice AI On-Premises Deployment Guide

All this talk about models, pipelines, and latency means nothing if the tools behind them can’t keep up. That’s where companies like Smallest.ai come in.

How Smallest.ai Fits Into the Fight Against Fraud

Most companies talk about “real time.” Smallest.ai actually builds it. Their platforms, Waves and Atoms, are designed for scenarios where every millisecond counts and context can’t afford to lag. In AI fraud detection techniques in 2025, timing edge is everything.

1. Waves

Waves isn’t just a text-to-speech engine; it’s an audio intelligence layer that can generate, analyze, and react to human-like speech on the fly. In the fraud space, that opens up serious possibilities:

Voice Authentication & Anomaly Detection: When a user speaks, Waves can generate acoustic embeddings, digital fingerprints of their voice.

If a cloned voice or deepfake tries to pass verification, the system detects subtle shifts in tone, pitch, or timing that AI-generated audio can’t perfectly mimic.

Real-Time Call Monitoring: In contact centers, Waves enables AI models to listen as conversations happen, flagging speech patterns that deviate from typical customer behavior: rushed tones, unnatural pauses, or inconsistent phrasing.

Training & Simulation: Fraud teams can use Waves to simulate voice scams or deepfake scenarios, training AI agents and analysts to recognize the cues before they hit production systems.

2. Atoms

Atoms is where conversational AI meets operational intelligence. These aren’t just scripted bots; they’re adaptive, low-latency AI agents capable of handling live voice and chat interactions at enterprise scale. For fraud prevention, that adaptability makes all the difference.

Transaction Verification: Atoms can confirm high-risk transactions in real time, calling customers, verifying intent through natural conversation, and spotting hesitation patterns that suggest coercion or impersonation.

Behavioral Monitoring: Each conversation feeds back into a behavioral model, helping detect account takeovers or abnormal engagement patterns before they escalate.

Scalable Response: During large-scale fraud events, Atoms can handle thousands of simultaneous verifications without compromising accuracy or tone, something human teams simply can’t match.

3. Built for Real-World Integration

Both platforms plug into modern fraud stacks through APIs and SDKs, meaning they can feed directly into existing machine learning pipelines.

Whether it’s sending voice embeddings to a fraud model or using Atoms to validate suspicious actions, Smallest.ai’s tools fit into workflows instead of forcing teams to rebuild them. And in 2025, that’s exactly what separates working AI from wasted potential.

Conclusion

The difference between staying safe and getting blindsided now comes down to how fast your systems can think, react, and adapt. AI isn’t the magic bullet everyone sells it as. It’s a toolkit, and like any tool, it’s only as good as how (and how quickly) you use it.

That’s exactly where Smallest.ai earns its name, small latency, massive impact. Whether it’s real-time voice authentication through Waves or conversational verification via Atoms, their tech shows what AI can actually look like when it’s built for the messy, unpredictable side of fraud.

Got questions or want to see how this works in your setup? Book a demo with Smallest.ai, the team that actually builds real-time AI, not just talks about it.

FAQs

1. Are AI fraud detection techniques in 2025 completely automated?

Not quite. AI handles the heavy lifting, analyzing millions of data points per second, but human analysts still close the loop. They review edge cases, label new patterns, and retrain models to handle the next wave of fraud.

2. How does AI catch voice or deepfake-based fraud?

Modern systems don’t just listen for what is said; they analyze how it’s said. AI models can pick up micro-signatures in tone, pitch, and cadence that cloned voices can’t perfectly replicate. Platforms like Smallest.ai’s Waves take this further with real-time acoustic analysis that flags potential impersonation mid-call.

3. Can AI detect fraud before it happens?

Yes, that’s the shift. Predictive models now identify behavior that leads up to fraud: unusual browsing paths, inconsistent device use, or hesitation during verification calls. It’s about pre-empting, not just reacting.

4. Is it expensive to integrate AI fraud detection tools?

Costs vary, but APIs and SDKs have made integration faster and cheaper. Platforms like Smallest.ai offer scalable pricing, from experimental setups to full enterprise deployments, so you can start small and scale without rebuilding.

5. What makes AI fraud detection in 2025 different from previous years?

Fraudsters now use AI too,from voice cloning to automated identity creation. That means fraud detection has shifted from static rule engines to adaptive AI systems that learn continuously. Real-time detection, behavioral analytics, and multimodal models are the new standard.