Learn how to find small AI models optimized for embedded systems, covering optimization techniques, use cases, and integration into resource-limited devices.

Akshat Mandloi

Updated on

January 27, 2026 at 5:20 PM

As businesses increasingly adopt AI, 78% of enterprises now use AI in at least one department, and many are turning to smaller, more efficient models that run directly on embedded devices. In 2025, small AI models are becoming more prevalent, with some models 10 to 1,000 times smaller than large models like GPT-4. These compact models deliver near-comparable performance while being more efficient, customizable, and offering better data privacy.

In this blog, we’ll explore where to find these small, optimized AI models for embedded systems. We’ll also cover key optimization tips and how to integrate them into devices with limited resources, helping you improve efficiency and performance.

What Are Embedded AI Systems?

Embedded AI systems are specialized computing systems designed to perform specific tasks with limited resources, making them distinct from general-purpose computers. These systems are found in devices like smartphones, IoT gadgets, wearables, and industrial machines, where their role is to process data and make decisions locally, without relying on the cloud.

These systems are built to perform a specific function, whether it’s managing home automation or running a factory machine. They typically come with certain constraints due to their need to operate within cost and space constraints.

Key Challenges in Deploying AI on Embedded Systems:

Limited Processing Power and Memory: With much smaller resources than traditional computers, embedded AI systems must be optimized to run efficiently.

Energy Constraints: Many embedded devices are battery-powered or rely on energy harvesting, meaning they must be designed for minimal power consumption.

Real-Time Data Processing and Low Latency: These systems often require processing data in real-time, making quick decision-making essential for tasks such as sensor data analysis or providing immediate feedback.

Given these limitations, it’s important to understand why small AI models are essential for embedded AI systems to function effectively within these constraints.

Why Small AI Models Are Key for Embedded AI Systems

Embedded AI systems are designed to perform specific tasks with limited resources, making them ideal candidates for small, efficient AI models. Here’s why small AI models are so important in these systems:

Optimized for Limited Resources: Small AI models are lightweight and can perform tasks effectively without overloading these limited resources.

Energy Efficiency: Small AI models are designed to use minimal power, ensuring long battery life while still performing complex tasks.

Real-Time Processing: Small AI models can provide quick, real-time data analysis and decision-making without relying on the cloud, thereby reducing latency.

Enhanced Autonomy: Small AI models allow embedded AI systems to operate independently, making real-time decisions based on locally processed data.

By using small, optimized AI models, embedded systems can become smarter, more efficient, and more autonomous, all while staying within their limited resources.

Ready to implement small, optimized AI models for your embedded systems? Discover how Smallest.ai’s solutions can help you maximize efficiency and performance. Book a demo to explore our range of voice agents and AI models, designed for seamless integration with embedded devices.

Now that we know why small models are crucial, let’s look at what to consider when selecting the right one for your embedded system.

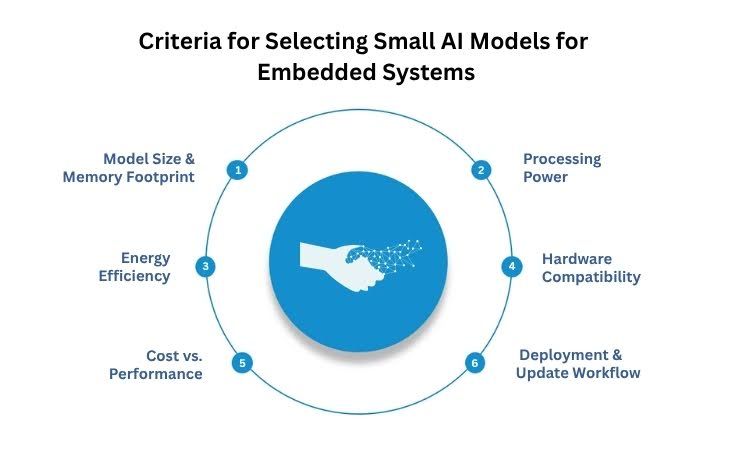

How to Choose the Right AI Model for Embedded Devices

Selecting the right small AI model for embedded AI systems involves evaluating a few key factors that ensure the model performs effectively within the constraints of embedded hardware. Here are some important considerations to keep in mind:

1. Model Size & Memory Footprint

Small AI models must fit within the limited memory of embedded devices without compromising performance. The model size directly impacts the amount of memory required for storage and processing.

Focus on compact models that require less RAM and flash memory while still delivering the necessary results. Efficient model compression techniques can be beneficial in this regard.

2. Processing Power

AI algorithms can be computationally intensive. Small models require optimization for efficient processing to run effectively without overwhelming the system.

Look for hardware with sufficient computational power, typically measured in FLOPS or TOPS, to meet the model's needs without consuming excessive energy.

3. Energy Efficiency

Small AI models should be designed to minimize energy consumption, enabling long-lasting performance in devices such as IoT sensors, wearables, or mobile units. Choose models that are optimized for energy efficiency, ensuring they perform well while minimizing the impact on battery life.

4. Hardware Compatibility

The AI model should be compatible with the target hardware, whether it’s a microcontroller (MCU), neural processing unit (NPU), or digital signal processor (DSP). This ensures the model can be deployed without needing additional complex adaptations.

Confirm that the embedded AI system’s hardware can support the chosen model and provides the necessary processing power for smooth operation.

5. Cost vs. Performance

Budget is often a deciding factor in selecting hardware and AI models. A balance between performance and cost is essential, especially when scaling solutions across multiple devices.

Aim for a model that delivers the right level of performance without exceeding your cost constraints. Efficient small models should provide the best return on investment for embedded applications.

6. Deployment and Update Workflow

A streamlined deployment process ensures that updates to the model are easily managed and don’t disrupt the system's operation.

Ensure that the model and its deployment framework support continuous integration and delivery (CI/CD) as well as over-the-air (OTA) updates. This will help keep the system running smoothly over time.

By considering these criteria, businesses can select small AI models that meet the technical needs of their embedded AI systems while aligning with operational goals.

Also Read: Top Python Packages for Realistic Text-to-Speech Solutions

With these selection criteria in mind, let's explore where you can find optimized small AI models that fit your embedded system's needs.

Top Sources to Find Small AI Models for Embedded Systems

When it comes to finding small AI models optimized for embedded AI systems, several reliable sources and platforms offer ready-to-use or customizable models.

1. Online Model Libraries and Repositories

TensorFlow Hub and PyTorch Hub are two widely used platforms that provide pre-trained AI models, including lightweight models suitable for embedded devices.

These repositories include models optimized for smaller hardware with reduced resource consumption, ensuring easy deployment on devices like microcontrollers, sensors, or edge devices.

2. Open-source AI Platforms and Communities

TensorFlow Lite is an optimized version of TensorFlow designed for running on mobile and embedded devices with limited resources. It is ideal for small AI models, as it focuses on reducing model size while maintaining performance.

Edge Impulse is a platform that simplifies machine learning operations for embedded AI systems, specifically designed for low-power devices. It offers tools for sensor data processing and deploying small, efficient models directly to edge devices.

TinyML frameworks, such as uTensor and CMSIS-NN, specialize in ultra-low-power models optimized for small microcontrollers. These frameworks are perfect for running small AI models with minimal computational overhead.

Also Read: Top Open Source Text-to-Speech Alternatives Compared

3. Cloud-based AI Model Services

Platforms such as Google Cloud AI and AWS SageMaker offer cloud-based services that enable you to train, optimize, and deploy small AI models for embedded systems.

These services often enable model tuning and compression techniques to reduce model size and improve efficiency for edge deployment.

4. Corporate AI Model Distribution Platforms

Many companies, such as Qualcomm and NVIDIA, offer specialized AI tools and models optimized for embedded AI systems. These platforms often provide solutions tailored to their hardware, ensuring efficient deployment of small AI models on devices they manufacture.

These corporate solutions can be particularly helpful for enterprises looking for a seamless integration of optimized models with their proprietary embedded hardware.

These platforms offer a range of solutions for accessing and integrating small, optimized AI models into embedded systems. This ensures efficient deployment and improved performance for your devices.

Smallest.ai provides highly optimized voice models and AI solutions, specifically designed for use in embedded systems. With a focus on performance and energy efficiency, Smallest.ai enables seamless integration of small AI models into devices across various industries. Book a demo to discover how Smallest.ai can enhance your embedded AI systems today.

Once you know where to find small AI models, the next step is understanding which frameworks can help you optimize them for your embedded systems.

Also Read: How Conversational AI Is Transforming Customer Engagement and Business Automation

Now that we understand why small models are crucial, let’s examine what to consider when selecting the right one for your embedded system.

Best Frameworks for Optimizing AI Models on Edge Devices

To develop and deploy small AI models, several frameworks are specifically designed to cater to the constraints of embedded AI systems. Here are some of the most relevant frameworks for optimizing small AI models:

Framework | Best For | Hardware Support | Notes |

|---|---|---|---|

TensorFlow Lite | General purpose, fast inference | ARM Cortex-M, EdgeTPU, NXP | Large ecosystem, model converter tools |

Edge Impulse | End-to-end ML ops for edge | STM32, Nordic, Renesas, Sony | Great for sensor processing, UI-based |

TinyML (uTensor) | Ultra-low-power MCUs | Cortex-M, Arduino, Ambiq | Optimized for small models & inference |

TVM | Model compilation & optimization | Versatile: GPU, MCU, ASIC | Flexible, supports auto-tuning |

CMSIS-NN | Efficient neural nets for ARM | ARM Cortex-M MCUs | Lightweight, great for simple models |

These frameworks and platforms offer powerful tools to help developers find, optimize, and deploy small AI models for embedded systems. The right framework or platform depends on the hardware you are working with and the level of optimization you need for your model.

After selecting the right framework, it’s time to discuss the best strategies for effectively deploying these small AI models in embedded systems.

Effective Strategies for Deploying AI Models in Embedded Systems

Deploying small AI models in embedded AI systems requires careful consideration of both technical constraints and optimization techniques. Here’s how to effectively develop and deploy AI models on devices with limited resources, ensuring both performance and efficiency.

1. Optimization Techniques for Small AI Models

To ensure that small AI models perform well on embedded systems, optimization is essential. The following techniques can help reduce model size while maintaining high performance:

Model Pruning: Remove unnecessary weights and connections to reduce model complexity.

Quantization: Lower the precision of model weights and activations to save memory and computation power.

Knowledge Distillation: Transfer knowledge from a large model to a smaller, more efficient one, while maintaining high performance.

2. Seamless AI Model Deployment

Deploying AI models effectively on embedded AI systems involves considering both hardware and software factors. Key practices include:

Model Compression: Further compress models to fit storage and memory limits of embedded devices.

Hardware Optimization: Tailor the model for specific hardware, ensuring that the AI performs optimally on the target system.

Runtime Environment: Ensure the AI model operates smoothly by providing the correct runtime environment.

3. Ensuring Reliability in Embedded AI Systems

To achieve long-term success with AI models on embedded AI systems, it’s crucial to focus on reliability and robustness:

Modular Design: Break down systems into smaller, independent modules for easier management and testing.

Thorough Testing: Test AI models in various real-world conditions to ensure their reliability.

Redundancy and Fault Tolerance: Use backup systems and components to minimize the risk of failure.

4. Security and Privacy in Embedded AI Deployments

Security and privacy considerations are critical when deploying AI models, especially in sensitive applications. Important practices include:

Data Encryption: Protect sensitive data with encryption to prevent unauthorized access.

Secure Boot: Ensure that the system’s boot process is secure, preventing tampering with the AI model.

Explainable AI: Use AI models that offer transparency in decision-making, particularly for critical applications.

Successfully deploying small AI models in embedded AI systems requires attention to optimization, effective deployment strategies, and strong security practices to ensure performance and reliability.

Smallest.ai provides optimized voice AI models designed for seamless integration into embedded systems, enabling you to achieve efficient performance and reliability. Book a demo of our offerings and see how we can help you deploy AI in your devices with ease.

Conclusion

Deploying small AI models in embedded AI systems is a crucial step toward enabling efficient, reliable, and secure AI applications across industries. By focusing on optimization techniques like model pruning and quantization, and adhering to best deployment practices, businesses can ensure their AI models perform seamlessly in resource-constrained environments.

Smallest.ai offers an ideal solution for businesses looking to implement small, optimized AI models in embedded systems. Smallest.ai offers highly efficient voice models, real-time voice agents, and advanced voice cloning technologies, helping organizations deploy AI smoothly on various embedded devices.

Ready to enhance your embedded systems with AI? Book a demo to explore how their solutions can enhance your business performance.

FAQs

1. What is embedded AI, and why does it matter?

Embedded AI is AI designed to run on devices with limited resources, like smartphones and IoT devices. It processes data locally, reducing cloud reliance, enhancing decision-making speed, and improving energy efficiency. Small AI models, optimized for these systems, ensure reliable performance while working within constraints.

2. Where can I find Lightweight AI models for my embedded system?

You can find Lightweight AI models from:

TensorFlow Lite: A lightweight version of TensorFlow for embedded devices.

Edge Impulse: A platform for machine learning on edge devices.

TinyML: Frameworks like uTensor for ultra-low-power devices.

Cloud services like Google Cloud AI and AWS SageMaker also offer model development tools.

3. How do I optimize an AI model for embedded systems?

Optimize compact AI models using:

Model Pruning: Remove unnecessary connections to reduce size.

Quantization: Lower precision to save resources.

Knowledge Distillation: Transfer knowledge from large models to smaller ones.

These techniques shrink models while maintaining performance.

4. What challenges arise when deploying AI on embedded systems?

Challenges include hardware limitations such as processing power, memory, and battery life. Balancing energy use, latency, and performance is key. Seamless integration with sensors and continuous testing are also vital for smooth deployment.

5. How can Smallest.ai help with deploying small AI models?

Smallest.ai provides efficient voice solutions for embedded systems, specifically designed for industries such as healthcare, retail, and logistics. Their AI models work with minimal resources, making it easy to deploy advanced AI on embedded devices.