Discover how conversational AI boosts spending with market growth insights and industry adoption trends. Unlock its potential today!

Prithvi Bharadwaj

Updated on

January 27, 2026 at 10:00 AM

Conversational AI has quickly evolved from simple chatbots to enterprise-ready systems capable of understanding context, reasoning through workflows, and delivering real-time, human-like interactions. For businesses, it’s no longer just about answering queries—it’s about transforming how customers engage and how operations scale.

As customer expectations rise and enterprises look for ways to reduce costs while improving service quality, conversational AI is becoming a strategic priority. It sits at the intersection of customer experience, automation, and data-driven decision-making, making it one of the most critical technologies shaping the future of business communication.

In this blog, we’ll explore the future of conversational AI in an enterprise context—market signals driving adoption, how it works at scale, the trends redefining its capabilities, implementation frameworks, and strategies to measure ROI.

Key Takeaways

Conversational AI is moving from chatbots to enterprise-grade systems, driving measurable impact in CX, operations, and decision-making.

How it works in practice: NLU/NLP, dialogue management, real-time voice (ASR + TTS), system integration, and continuous learning.

Enterprise trends shaping the future: generative convergence, real-time voice, multilingual empathy, omnichannel continuity, and agentic conversational AI.

Adoption requires a structured framework: data readiness, architecture choices, integration, governance, and MLOps.

Market Signals Driving the Future of Conversational AI (Revised)

The momentum behind conversational AI is not just hype—it’s backed by real market growth and enterprise behavior.

According to Grand View Research, the conversational AI market was valued at USD 11.58 billion in 2024, and is projected to reach USD 41.39 billion by 2030, growing at a CAGR of ~23.7% from 2025 to 2030.

Per the Deloitte 2025 Predictions Report, about 25% of enterprises that are already using Generative AI are forecast to deploy autonomous AI agents by 2025—rising to 50% by 2027.

These numbers underscore that conversational AI is rapidly moving from pilot and experimentation into mainstream enterprise usage. Organizations that prepare now will capture outsized gains in efficiency, customer engagement, and competitive differentiation.

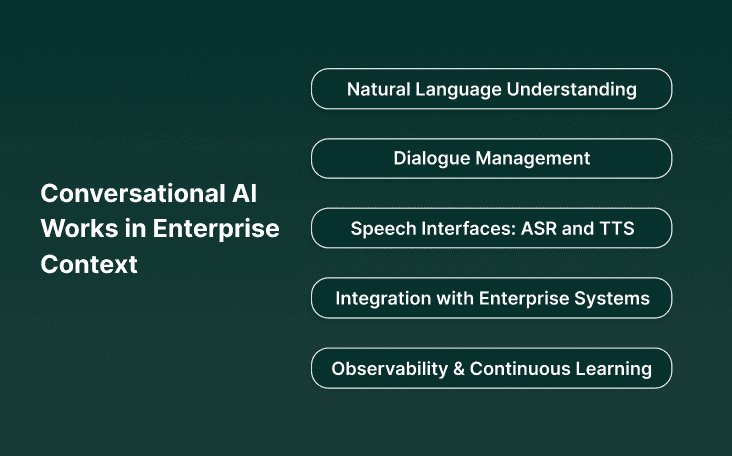

How Conversational AI Works in an Enterprise Context

For enterprises, the value of conversational AI lies not in its novelty but in how well it integrates with existing systems, scales across channels, and delivers consistent performance. At a high level, enterprise-grade conversational AI works through a combination of core components, each designed to ensure natural, reliable, and actionable conversations.

1. Natural Language Understanding (NLU) and Processing (NLP)

NLU engines interpret customer intent, entities, and sentiment, while NLP ensures responses are contextual and human-like. Advanced models can adapt to domain-specific terminology, making them critical for industries like healthcare, banking, or insurance.

2. Dialogue Management and Context Tracking

Dialogue managers keep conversations coherent, even across multiple turns or channels. They maintain context so that a customer switching from chat to voice doesn’t need to repeat themselves.

3. Speech Interfaces: ASR and TTS

Automatic Speech Recognition (ASR) converts spoken input into text, while Text-to-Speech (TTS) generates natural responses. For enterprises, the differentiator is latency—interactions must happen in under 200ms to feel seamless.

Learn more about low-latency design in Real-Time TTS Conversion Using AI.

4. Integration with Enterprise Systems

Real business value comes when conversational AI connects with CRMs, ERPs, ticketing systems, and payment gateways. This allows agents not just to talk, but to take action—resolving requests, updating records, or triggering workflows.

5. Observability and Continuous Learning

Enterprise AI requires monitoring of key metrics—intent recognition accuracy, latency, containment rate, and customer satisfaction scores. Logs feed back into retraining cycles, making the system smarter and more reliable over time.

Together, these components form the backbone of conversational AI in modern enterprises. They turn conversations from isolated interactions into actionable workflows that drive measurable outcomes.

Enterprise Trends Shaping the Future of Conversational AI

Conversational AI is no longer about answering simple FAQs—it’s evolving into a strategic layer in enterprise communication and customer experience. Several trends are accelerating this transformation:

1. Generative AI Driving Dynamic Conversations

Large language models (LLMs) enable conversational AI to generate adaptive, context-rich responses rather than relying on scripted templates. For enterprises, this means more natural interactions and the ability to scale personalization across millions of customer touchpoints.

2. Real-Time Voice as a Differentiator

Latency makes or breaks customer experience. Enterprises are prioritizing real-time voice AI with sub-200ms response times to ensure conversations feel human-like, even at scale.

Explore why this matters: What Makes a Real-Time Agent Truly Real-Time.

3. Multilingual and Emotion-Aware Capabilities

Enterprises serving global markets need agents that can switch languages, dialects, and tones seamlessly. Emerging models can detect sentiment and adjust responses accordingly, making conversations not just accurate, but empathetic.

4. Omnichannel Continuity

Customers don’t stick to one channel. They might start on a website chatbot, switch to WhatsApp, and end on a phone call. The future of conversational AI is about stateful interactions—where context carries across channels, so customers never repeat themselves.

5. Enterprise-Wide Use Cases Beyond Support

While customer support remains a core driver, enterprises are expanding conversational AI into sales, operations, HR helpdesks, IT troubleshooting, and even compliance workflows—turning it into an enterprise-wide capability rather than a single-department tool.

These trends signal a shift: conversational AI is moving from transactional tool to strategic enabler, creating measurable impact on customer satisfaction, efficiency, and scalability.

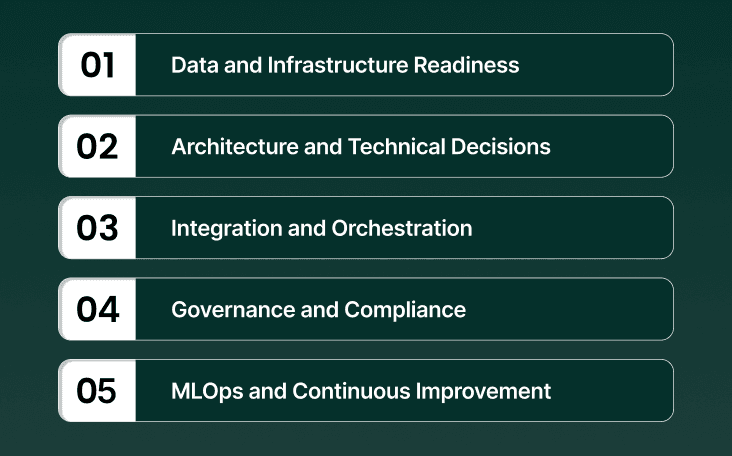

Implementation Framework for Conversational AI in Enterprises

Deploying conversational AI at scale isn’t just about deploying a model—it’s about aligning data, architecture, compliance, and operations with enterprise goals. Here’s a structured framework enterprises can follow:

1. Data and Infrastructure Readiness

Unified data pipelines: Clean, labeled conversation logs across channels (chat, voice, email).

Domain-specific training: Industry-specific vocabulary (e.g., healthcare terms, financial jargon).

Data governance: Policies for retention, anonymization, and consent management to comply with GDPR and HIPAA.

2. Architecture and Technical Decisions

Streaming vs. batch processing: Streaming is essential for real-time responsiveness in customer-facing use cases.

Hybrid pipelines: Combining ASR → NLU → Dialogue Manager → TTS for robust multimodal performance.

Observability: Monitor metrics like latency, containment rate, and intent recognition accuracy to maintain reliability.

Related insight: Why Streaming Architecture Is Non-Negotiable for Real-Time Voice Agents.

3. Integration and Orchestration

CRM and ERP systems: Sync with Salesforce, SAP, or HubSpot for seamless workflows.

Contact center platforms: Enable AI agents to manage calls and hand off to humans when needed.

Knowledge bases: Dynamic retrieval from FAQs, policy docs, and databases for accurate, up-to-date responses.

4. Governance and Compliance

Bias audits and explainability: Ensure models don’t produce discriminatory or opaque responses.

Audit trails: Capture logs for compliance in industries like finance or healthcare.

Deployment options: On-premises or VPC setups for high-security environments.

5. MLOps and Continuous Improvement

Retraining cycles: Use live interaction data to refine models regularly.

Performance monitoring: Track KPIs such as average handle time (AHT) reduction and deflection rates.

Rollback strategies: Ensure safe recovery if a model introduces errors post-deployment.

This framework ensures conversational AI moves from a pilot project to a scalable, enterprise-grade system—delivering both customer impact and operational resilience.

Vendor Selection Checklist for Conversational AI Solutions

Choosing the right conversational AI solution can make the difference between a pilot that stalls and a system that scales enterprise-wide. Here are the critical factors enterprises should evaluate:

1. Real-Time Performance and Latency

Sub-200 ms response times for natural interactions.

Benchmarks for word error rate (WER) and Mean Opinion Score (MOS) for speech quality.

Ability to handle thousands of concurrent sessions without degradation.

2. Security and Deployment Options

SOC 2, HIPAA, and GDPR compliance.

Options for on-premises or VPC deployment in industries with strict data residency needs.

End-to-end encryption of both voice and text interactions.

3. Integration Flexibility

APIs and SDKs for CRMs (Salesforce, HubSpot), ERPs (SAP, Oracle), and contact centers.

Pre-built connectors for popular collaboration tools (Teams, Slack, Zoom).

Support for event-driven architectures and streaming data pipelines.

4. Observability and Monitoring

Dashboards tracking latency, containment rate, escalation triggers, and customer satisfaction scores.

Automated alerts for performance anomalies.

Transparent logs for audits and compliance checks.

5. Multilingual and Personalization Capabilities

Native support for multiple languages and dialects.

Ability to adjust tone, emotion, and style to align with brand voice.

See an example: Evaluating the Lightning-v2 Multilingual TTS Model.

6. Pricing and Scalability

Flexible pricing models: per-interaction, per-seat, or enterprise license.

Cost predictability as adoption scales across business units and geographies.

Clear ROI benchmarks from existing deployments.

By using this checklist, enterprises can move past generic demos and select conversational AI solutions that deliver real-world performance, compliance, and ROI.

KPIs and Measuring ROI of Conversational AI

Enterprises don’t invest in conversational AI because it’s innovative—they invest because it delivers measurable business outcomes. Tracking the right key performance indicators (KPIs) is essential for proving value and guiding continuous improvement.

1. Containment Rate

Percentage of interactions resolved entirely by the AI agent without human escalation. A higher containment rate means lower support costs and faster resolutions.

2. Average Handle Time (AHT) Reduction

AI agents can shorten call durations by resolving queries faster or by pre-qualifying customers before handing them to a human agent. Monitoring AHT highlights efficiency gains.

3. Deflection Percentage

The share of queries deflected from high-cost channels (like voice) to lower-cost ones (like chat or self-service). Even small improvements here can lead to substantial savings.

4. Net Promoter Score (NPS) and CSAT Uplift

Customer satisfaction should be tracked before and after AI deployment. Effective conversational AI improves experience by reducing wait times and providing consistent answers.

5. Cost Per Interaction

AI-driven interactions are significantly cheaper than human-led ones. Enterprises can calculate ROI by multiplying reduced costs per interaction by the total interaction volume.

Example ROI Snapshot

An enterprise contact center handling 1 million calls annually at $5 per human-handled call could:

Deflect 30% of calls with AI agents.

Save $1.5M annually while also reducing wait times and improving customer satisfaction.

To ensure reliable ROI, enterprises should benchmark these metrics continuously and align them with broader business goals like customer retention, operational efficiency, and revenue growth.

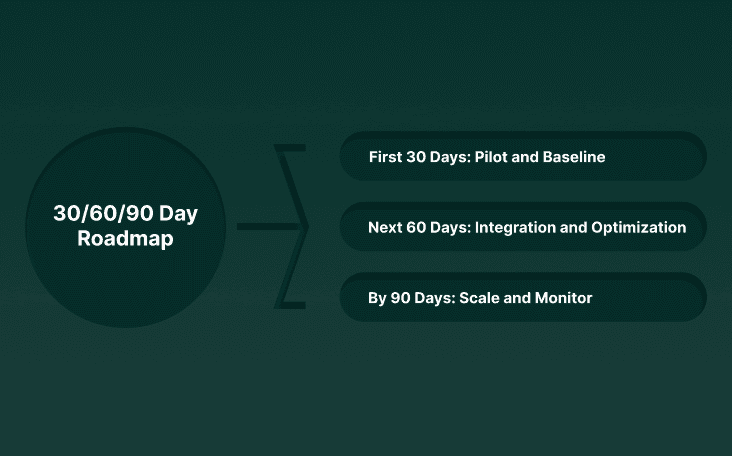

30/60/90 Day Roadmap for Enterprise Adoption

A successful conversational AI rollout doesn’t happen overnight. Enterprises that succeed treat it as a staged journey—balancing speed with governance. Here’s a practical roadmap:

First 30 Days: Pilot and Baseline

Choose one high-volume use case (e.g., password resets, order status, appointment scheduling).

Deploy AI agents on a single channel (chat or voice) to limit complexity.

Establish baseline KPIs: containment rate, AHT, cost per interaction, CSAT.

Collect qualitative feedback from both customers and agents.

Next 60 Days: Integration and Optimization

Expand integrations into CRMs, ticketing, or knowledge bases for richer responses.

Enable human handoff flows, ensuring smooth escalation when AI can’t resolve.

Introduce retraining loops with real-world conversation logs to improve accuracy.

Start testing multilingual support or domain-specific vocabulary.

By 90 Days: Scale and Monitor

Add voice channels with low-latency speech-to-text and text-to-speech.

Deploy monitoring dashboards to track performance metrics in real time.

Expand to two or more business units (e.g., sales and support).

Implement governance guardrails: bias audits, compliance checks, and rollback strategies.

By the end of 90 days, enterprises should have a scalable, compliant, and data-driven conversational AI system—ready to expand across geographies, languages, and customer touchpoints.

Risks and Mitigations for Enterprise-Grade Conversational AI

While conversational AI promises measurable impact, enterprise adoption comes with risks that must be addressed upfront. A proactive risk framework helps ensure deployments are scalable, compliant, and trusted.

1. Data Privacy and Compliance Risks

Handling sensitive customer data (health, financial, personal) introduces GDPR, HIPAA, and PCI obligations.

Mitigation: Deploy in on-premises or VPC environments with end-to-end encryption, role-based access controls, and audit trails.

2. Hallucinations and Model Errors

Generative models may produce inaccurate or misleading responses, eroding customer trust.

Mitigation: Use fallback flows to human agents, apply prompt safety filters, and continuously monitor output accuracy.

3. Integration Bottlenecks

Legacy CRMs, ERPs, and telephony systems often slow down adoption or limit AI functionality.

Mitigation: Adopt modular integration strategies using APIs and middleware, with phased rollouts rather than “big bang” deployments.

4. Employee and Customer Adoption Challenges

Employees may fear job displacement; customers may resist non-human interactions.

Mitigation: Position AI as an augmentation tool that reduces repetitive tasks, while emphasizing human-AI collaboration in communications.

5. Vendor Lock-In

Relying on proprietary black-box platforms can restrict flexibility and increase costs over time.

Mitigation: Choose providers with transparent benchmarks, open APIs, and clear migration paths.

Enterprises that address these risks early can ensure conversational AI becomes a trusted, long-term capability rather than a stalled pilot.

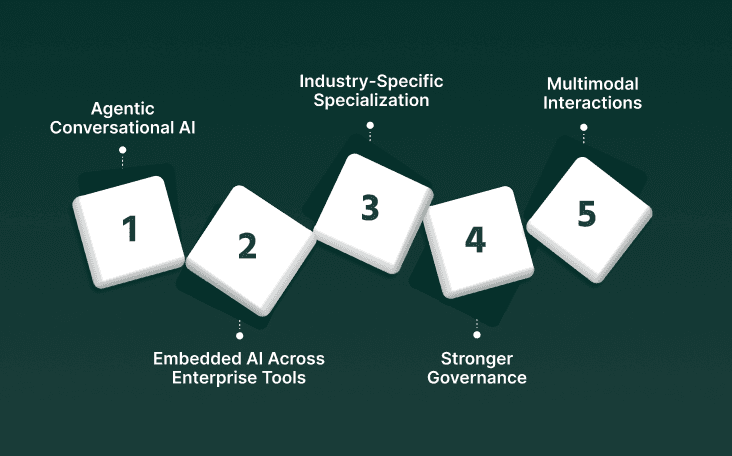

The Road Ahead: Future of Conversational AI in Enterprises

Conversational AI is shifting from a customer support tool to a strategic enterprise capability—embedded across functions, channels, and industries. The next wave will be defined by deeper intelligence, stronger compliance frameworks, and tighter integration with enterprise ecosystems.

1. Agentic Conversational AI

AI agents will evolve from reactive assistants into proactive, goal-driven systems. Instead of waiting for a query, they’ll anticipate customer needs, trigger workflows, and optimize outcomes autonomously.

2. Embedded AI Across Enterprise Tools

Conversational AI won’t be confined to chatbots or call centers. Expect embedded agents in platforms like Microsoft Teams, Zoom, or Slack—summarizing meetings, assigning tasks, and ensuring follow-ups happen without manual effort.

3. Industry-Specific Specialization

The future isn’t one-size-fits-all. Conversational AI will specialize by domain:

Healthcare: patient intake, follow-up scheduling, compliance documentation.

Banking & Insurance: claims processing, fraud alerts, customer onboarding.

Retail & E-commerce: real-time order updates, voice-enabled shopping, personalized recommendations.

4. Stronger Governance and Ethical Standards

Regulators are catching up with AI adoption. Frameworks for explainability, bias audits, and data transparency will become mandatory, making governance as important as accuracy.

5. Real-Time and Multimodal Interactions

The next leap is real-time, multimodal AI—where customers move fluidly between text, voice, and even video interactions, with the AI maintaining full context across formats.

How Smallest.ai Accelerates Enterprise Conversational AI

Enterprises don’t just need conversational AI that works—they need systems that are real-time, compliant, and scalable across global markets. That’s exactly what Smallest.ai delivers.

1. Real-Time, Human-Like Conversations

With sub-100ms latency, Smallest ensures conversations feel as natural as speaking to a human. This responsiveness is critical for customer-facing use cases where even small delays can erode trust.

2. Seamless Enterprise Integration

Smallest’s APIs and SDKs make it simple to connect conversational AI with CRMs, ERPs, telephony systems, and collaboration tools. Enterprises can deploy quickly without ripping out existing infrastructure.

3. Multilingual Voice and Personalization

With Lightning-v2 multilingual TTS, enterprises can engage customers in their preferred language, accent, and tone—providing authentic experiences across global markets.

4. Enterprise-Grade Security and Compliance

Whether in finance, healthcare, or government, compliance isn’t optional. Smallest supports on-premises and VPC deployments, with alignment to SOC 2, HIPAA, GDPR, and PCI standards.

Measurable Business Impact

By adopting Smallest’s conversational AI:

Support costs shrink through automation of high-volume inquiries.

Customers enjoy faster, more personalized experiences.

Enterprises scale globally with confidence, knowing data security and performance are never compromised.

Ready to see how conversational AI can transform your enterprise? Explore Smallest.ai Enterprise Solutions today.

FAQs on Conversational AI in Enterprises

1. How does conversational AI differ from traditional chatbots?

Traditional chatbots follow predefined scripts, while conversational AI uses NLU, context tracking, and real-time decisioning to deliver more natural, adaptive conversations.

2. What are the key components of conversational AI architecture?

Core components include NLU/NLP, dialogue management, speech interfaces (ASR + TTS), enterprise integrations, and monitoring for performance and compliance.

3. What KPIs should enterprises track for conversational AI?

Containment rate, AHT reduction, deflection percentage, NPS/CSAT uplift, and cost per interaction are essential for measuring ROI.

4. What challenges do enterprises face when implementing conversational AI?

Common challenges include data privacy compliance, hallucinations, legacy system integration, employee adoption, and vendor lock-in.

5. How is generative AI shaping the future of conversational AI?

Generative models enable richer, more context-aware interactions—powering dynamic conversations that adapt to tone, intent, and domain-specific knowledge.

6. What should enterprises look for when evaluating conversational AI vendors?

Key factors include real-time performance, security and compliance, integration flexibility, multilingual capabilities, and transparent ROI metrics.

Automate your Contact Centers with Us

Experience fast latency, strong security, and unlimited speech generation.

Automate Now