A deep dive into OpenAI’s Realtime API—latency, conversation flow, tool-calling, noise resilience, and multilingual handling tested in real calls.

Wasim Madha

Updated on

February 19, 2026 at 6:58 AM

Among the latest advances in real-time voice AI, OpenAI’s gpt-realtime stands out as a production-ready speech-to-speech system designed to replace traditional cascaded pipelines.

This study delivers a comprehensive evaluation across critical dimensions: latency, interruptions, noise resilience, voice stability, memory, language switching, tool-calling, and scalability.

Using a reproducible test harness, we conducted controlled calls in varied conditions—short exchanges, long conversations, noisy environments, and multilingual shifts.

The objective is clear: establish what gpt-realtime can reliably achieve today, where it excels, and where its limitations remain.

Findings are based on controlled trials, manual annotations, and a single testing environment. Broader validation across varied networks, larger sample sizes, and automated metrics would provide stronger generalization to real-world conditions.

Latency

Methodology

Latency was defined as the user-perceived delay between the end of spoken input and the onset of the model’s audio response.

All experiments used the gpt-realtime model (tested September 2025). Calls were placed from India on a local machine integrated with Telephony under stable broadband conditions.

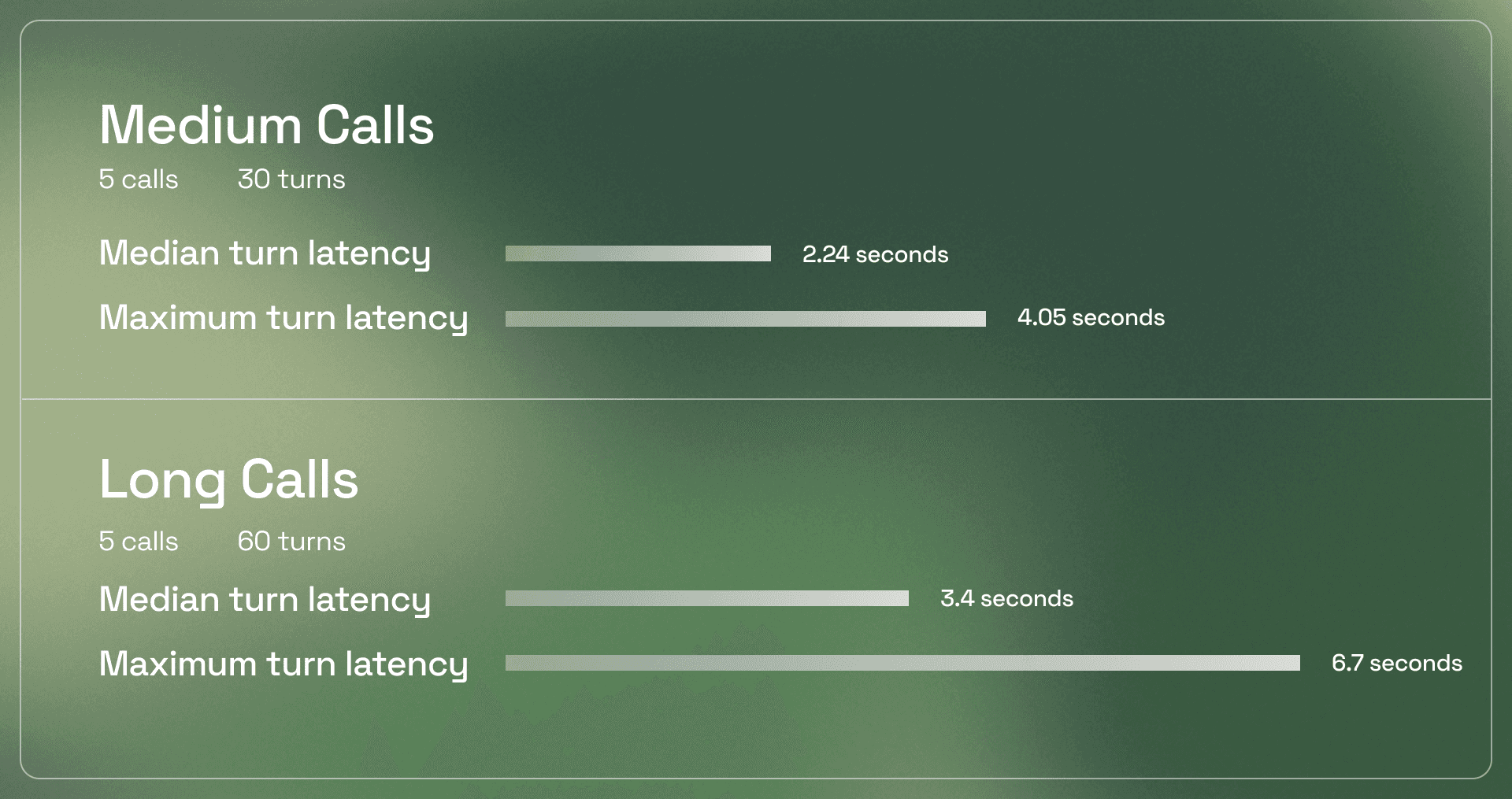

Two sets of calls were performed. The first involved five medium-length calls, each lasting approximately 2.7 minutes, totaling 30 conversational turns. The second consisted of five extended calls lasting 10–12 minutes, totaling 60 turns. For each call, audio streams were captured and turn boundaries annotated manually using Audacity to compute per-turn latencies. Additional calls exceeding five minutes were conducted to assess stability during prolonged interaction.

Results

Medium calls (5 calls, 30 turns): Median turn latency was 2.24 seconds, with a maximum of 4.05 seconds.

Long calls (5 calls, 60 turns): Median turn latency was 3.4 seconds, with a maximum of 6.7 seconds.

Analysis

The system maintains a responsive feel in short exchanges, but latency spikes are more pronounced during extended sessions. While overall conversational fluidity is preserved, predictability becomes an important factor for production workloads, particularly in long-form interactions.

Limitations

These measurements were based on manual annotation and a single testing environment. Broader validation across varied network conditions and automated timestamping would further strengthen the findings.

Conversational Flow & Interruptions

Methodology

In ~20 test calls (15 short, 5 extended, 3 flows), interruptions were introduced systematically, ranging from name clarifications to abrupt mid-sentence interjections. Three distinct conversational flows were tested:

Hotel Booking

Financial Advisor Consultation

Real Estate Lead Qualification

We measured three outcomes:

Whether the system recovered to the correct flow.

The latency of that recovery.

Whether the goal of the call was ultimately accomplished (e.g., booking confirmed, financial query answered, or lead qualified).

Results

Results show that in all interruptions tested, the system recovered within a single turn, maintaining both context and instruction fidelity.

Interruptions involving names and confirmation steps were handled with particular ease. Most importantly, in every test call across all three flows—including those with multiple interruptions—the system successfully achieved the intended call objective.

Across all test calls, the system did not break flow, and every call objective was successfully accomplished.

Analysis

These results provide strong evidence of improved robustness in conversational flow handling.

Limitations

While the findings are based on controlled conditions and manual annotation, the sample size and inclusion of three distinct flows (Hotel Booking, Financial Advisor, and Real Estate Qualification) make them a reliable early indicator.

Flow example:

Language Switch Handling

Methodology

We evaluated three scenarios for multilingual handling across 10 turns each with given prompt:

Explicit Switch to Hindi – Caller explicitly requests Hindi (e.g., “Can we continue in Hindi?”).

Explicit Switch Back to English – Caller explicitly requests return to English.

Implicit Switch to Hindi – Caller begins responding in Hindi without explicitly requesting a change.

Prompt

LANGUAGE SWITCH HANDLING

- If the caller says “Can we continue in Hindi?” or responds in Hindi, switch to Hindi for all future dialogue (questions, confirmations, closings).

- If the caller begins speaking in Hindi, immediately switch to Hindi and continue in Hindi for all subsequent turns.

Results

In explicit switch-to-Hindi tests, success was 10/10.

In explicit switch-back-to-English tests, success was 10/10.

In implicit switch-to-Hindi tests, success was 6/10.

In 4/10 implicit cases, the model continued in English despite the user speaking Hindi.

Analysis

The system is highly reliable when language changes are explicitly instructed, but its adherence weakens when the user relies on implicit switching. This gap highlights a divergence between prompt expectations and real behavior.

Limitations

Findings are based on short controlled calls; robustness in spontaneous multilingual conversations remains to be validated.

Language Switch Examples:

Long Calls & Guardrailing: Holding Context

Methodology

We extended conversations beyond five minutes to evaluate memory, recall, and adherence to guardrails. A total of five calls were conducted over telephony, with an average duration of ~10 minutes. During these calls, the user repeatedly probed for information outside scope (e.g., system prompts, model details, user prompts).

Results

Across all calls, the agent stayed on track and did not divert from the scripted flow.

Guardrails were respected — it did not leak system-level details.

Despite prolonged irrelevant queries, the system consistently maintained context and ultimately carried the flow through to completion.

Recall for names and emails remained strong.

Latency increased during longer calls after 5 mins, averaging ~5–6 seconds per turn as call goes beyond 5 mins.

Analysis

The system demonstrated strong resilience in extended conversations. Even with repeated distractions and off-topic probing, it adhered to the intended flow, preserved guardrails, and completed the booking process without deviation.

Limitations

Results are limited to 10-minute calls in one environment. Longer or varied conditions may surface different weaknesses.

Long call Example

Tool Calling

Methodology

We tested tool-calling behavior in approximately ten short calls of 2–3 minutes each, focusing on both end_call and search_corpus. For search_corpus, we created a dummy knowledge base and instructed the model to retrieve answers from it. Queries were resolved using simple string matching against this knowledge base to verify whether the model could correctly extract and surface the requested information.

Results

For end_call triggered by goal completion, the model invoked the function correctly in all 15/15 cases.

For end_call triggered by explicit user request, the model succeeded in 6/10 cases but continued the flow in 4/10 cases instead of ending.

In all cases where search_corpus was expected, the function was invoked correctly.

The agent reliably extracted relevant booking details before triggering tool calls.

Conversational flow remained smooth, and tool use did not break natural dialogue.

Analysis

Tool-calling performance was generally strong. The system consistently executed end_call when the booking flow was complete, showing deterministic behavior for goal-driven termination. However, it was less reliable at respecting explicit user requests to end, where it occasionally persisted in dialogue instead of invoking end_call.

Calls to search_corpus were accurate and contextually appropriate. Overall, the system balanced natural conversation with functional execution, though the gap in honoring explicit termination requests indicates an area for improvement.

Limitations

The test set was small and focused on hotel booking; broader tool scenarios are needed for confirmation.

Example:

Description:Agent calls search_corpus for check-in/check-out times and Deluxe room details, then end_call after booking confirmation.

Noise & Speaker Resilience

Methodology

All tests were conducted over telephone calls using a mobile device on speaker mode. Two noisy conditions were evaluated:

Background Noise (Synthetic Sources): Five calls with varied background audio (e.g., YouTube videos, customer-care chatter), totaling ~40 turns.

Overlapping Real Voices: Five calls with other people speaking loudly near the device, simulating competing human speech.

Results

In the background noise condition, the model generally handled synthetic interference. Confusion occurred in ~6 turns, where it either stopped unexpectedly or allowed interruptions to break flow.

In the overlapping voices condition, the model failed in most cases (5/5 calls) to maintain continuity under overlapping human voices. It frequently repeated entire flows and failed to maintain continuity in the conversation.

Analysis

The system demonstrates moderate resilience to synthetic noise, with only occasional disruption. However, overlapping human voices, the model cannot separate the primary caller from competing speakers, leading to breakdowns in dialogue flow. This sensitivity indicates a gap in robustness when deployed in uncontrolled real-world environments with multiple human voices present.

Limitations

Tests were limited to speakerphone conditions. Results may differ with headset or telephony hardware changes.

Example:

Conclusion

OpenAI’s Realtime API shows just how far real-time voice AI has come. Conversations feel natural, interruptions are handled smoothly, and switching between languages is more seamless than ever. For many teams, it finally delivers the demo-ready experience that cascaded pipelines struggled to match.

But it’s not flawless. Structured outputs, long-context stability, and fine-grained controllability remain areas to watch—especially as latency stretches to ~5–6 seconds in extended sessions.

With availability on Smallest Atoms, the path forward is flexible. Teams can tap into OpenAI’s strengths while using Atoms for enterprise-grade configurability, workflow integration, and deployment control. The result: real-time voice AI that can be mixed, matched, and tuned to fit your product and your customers- without compromise.