Related Blogposts

Sudarshan Kamath

Updated on

February 16, 2026 at 8:43 AM

Every spoken interaction influences how users experience technology. A misheard command, an incorrect transcription, or a delayed response can frustrate users, disrupt workflows, and reduce trust. At scale, small recognition errors in speech-to-text systems compound into bigger inefficiencies for businesses.

That is why teams implementing Automatic Speech Recognition (ASR) often ask: how can we reliably convert speech into accurate text and actionable information across accents, noise conditions, and real-time conversations? The stakes are high. Industry data shows the global conversational AI market is rapidly expanding, with forecasts projecting more than 19.6% compound annual growth through 2025-2031 as adoption accelerates across customer service, sales, and enterprise automation.

ASR goes beyond transcription, it enables AI systems to understand context, detect intent, and trigger meaningful actions. In this guide, we break down how ASR works, the technologies behind it, its value across industries, challenges to plan for, and the next steps in voice-driven AI systems.

Key Takeaways

ASR Converts Speech to Action: Modern systems go beyond transcription to interpret intent, context, and meaning for actionable results.

Accuracy Relies on Layered Technologies: Signal processing, feature extraction, deep learning, language models, and NLP improve precision across accents and noisy environments.

Context and Intent Detection Enable Automation: Understanding what a user wants allows AI systems to respond efficiently.

Applications Span Multiple Industries: ASR is used in customer service, healthcare, automotive, enterprise automation, and accessibility.

Challenges Can Be Managed: Accent variation, background noise, domain-specific terms, and changing language are addressed with adaptive models, diverse training, and continuous updates.

What Is Automatic Speech Recognition (ASR)?

ASR captures spoken language and converts it into structured digital data using AI models. Modern systems combine machine learning, deep neural networks, and natural language processing to:

Interpret context

Detect intent

Support real-time actions

Unlike traditional speech recognition, ASR doesn’t just transcribe words, it helps systems respond intelligently in call centers, virtual assistants, AI agents, and smart devices.

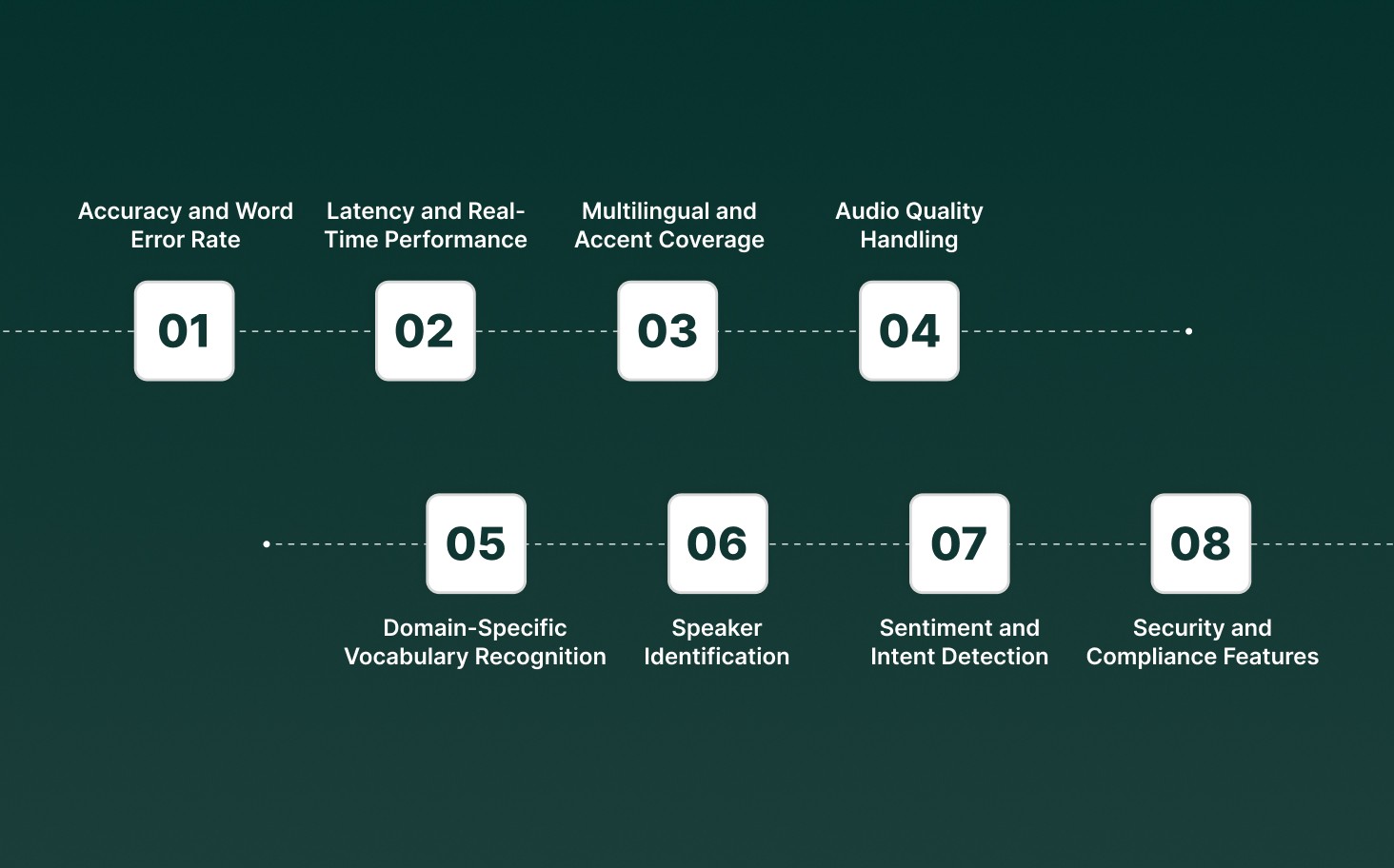

Automatic Speech Recognition (ASR) does more than just turn speech-to-text. It drives voice-based workflows, powers AI agents, and enables real-time insights. Here are the main metrics and features every strong ASR system should have:

Accuracy and Word Error Rate (WER): ASR should transcribe speech with minimal errors. Correctly recognizing “account number 4521” during a customer support call prevents transaction errors.

Latency and Real-Time Performance: The system should process speech in real time, supporting live conversations without noticeable delay. Virtual assistants in banking apps can respond within sub-second times to maintain smooth interactions.

Multilingual and Accent Coverage: Effective ASR can recognize multiple languages and regional accents. An AI agent in India accurately handles both Hindi-accented and Tamil-accented English.

Noise Robustness and Audio Quality Handling: ASR must maintain accuracy in noisy environments or with low-quality microphones. Transcription in a busy call center remains precise even with background chatter.

Domain-Specific Vocabulary Recognition: The system should accurately handle technical terms, jargon, and numbers. Healthcare AI agents capture drug names like “Amoxicillin” and medical codes without errors.

Speaker Identification and Diarization: ASR distinguishes between multiple speakers in a conversation, enabling accurate attribution of statements during conference calls.

Sentiment and Intent Detection: ASR can analyze emotion and intent to guide responses. Detecting frustration in a customer’s voice triggers escalation to a human agent.

Security and Compliance Features: Enterprise ASR encrypts sensitive voice data and complies with regulations such as HIPAA and GDPR, ensuring patient consultations in telemedicine platforms are protected.

With these features in mind, the next step is to understand how ASR differs from older speech recognition methods.

Traditional vs Automatic Speech Recognition (ASR): Key Differences

Understanding the differences between traditional speech recognition and modern ASR helps businesses choose the right technology for their needs. While both systems convert speech into text, ASR goes further by capturing context, intent, and real-time outbound interactions.

The table below highlights the main distinctions between the two approaches.

Feature | Traditional Speech Recognition | Automatic Speech Recognition (ASR) |

Accuracy | Works well with clear, slow speech but struggles with accents or background noise | Handles multiple accents, noisy environments, and varied speech patterns effectively |

Context Understanding | Limited – focuses only on converting words to text | Strong – understands intent, context, and can support follow-up actions |

Real-Time Use | Often offline or batch processing | Supports live transcription and immediate response for AI agents and IVRs |

Adaptability | Fixed rules and dictionaries; updates require manual intervention | Continuously improves with training data and user feedback |

Applications | Simple voice-to-text apps, dictation software | AI-powered call centers, virtual assistants, customer support automation |

Integration | Basic – limited connection to other systems | Advanced – connects with AI workflows, CRMs, and enterprise tools |

This comparison shows why ASR is better suited for modern applications, enabling smarter, faster, and more reliable voice-driven workflows. Next, let’s see how ASR processes speech step by step.

How ASR Processes Speech: 7 Essential Steps and Core Components

To achieve accurate and reliable results, each stage of speech processing plays a critical role. From capturing the audio to refining the final text, these steps ensure voice-driven systems perform efficiently across applications

Audio Input Capture: The pipeline begins by recording clear, high-quality audio from microphones, phones, or other sources. Capturing sound accurately sets the foundation for the entire recognition process, as poor input can significantly affect downstream performance.

Preprocessing: Once audio is captured, it is cleaned to remove background noise, echoes, and other inconsistencies. This step ensures that the system analyzes only the meaningful parts of speech, improving recognition accuracy.

Feature Extraction: Clean audio is then converted into measurable features such as frequency, pitch, and spectral patterns. These features provide the system with a structured representation of speech, enabling it to detect subtle differences in sounds.

Acoustic Modeling: Using the extracted features, the acoustic model identifies phonemes, the smallest units of sound in speech. This allows the system to distinguish similar-sounding words and recognize speech even in challenging environments.

Language Modeling: Acoustic data alone is often not enough for accurate transcription. The language model adds context by analyzing grammar, word probabilities, and common phrases. This helps the system predict the most likely sequence of words, thereby reducing transcription errors.

Decoding: The decoder combines outputs from the acoustic and language models to generate the final transcription. This step balances phonetic accuracy with contextual understanding to produce the most reliable text.

Postprocessing: The final stage refines the text by adding punctuation, formatting, and domain-specific corrections. Postprocessing ensures the output is readable, professional, and ready for use in applications such as voice assistants, AI agents, and analytics platforms.

And here’s how enterprises can use Pulse, Smallest.ai's speech-to-text engine, to transcribe live calls in real time, monitor customer sentiment, and feed insights directly into dashboards or CRMs. Pulse supports multiple languages and accents, making it ideal for fast, accurate, and actionable voice workflows.

Types of Automatic Speech Recognition Algorithms

Speech recognition algorithms form the core of Automatic Speech Recognition (ASR), converting spoken words into text for real-time applications and actionable insights. These algorithms can be implemented using traditional statistical methods or advanced deep learning models.

Traditional Statistical Algorithm

Traditional ASR relies on techniques such as Hidden Markov Models (HMM) and Dynamic Time Warping (DTW):

Hidden Markov Models (HMM): Trained on transcribed audio, HMMs predict word sequences by adjusting parameters to maximize the likelihood of observed speech.

Dynamic Time Warping (DTW): Uses dynamic programming to compare unknown speech with known word sequences, identifying the closest match based on timing and pronunciation.

These methods perform well under controlled conditions but often struggle with accents, background noise, and natural conversational speech.

Deep Learning Algorithm for Modern ASR

Deep learning approaches have revolutionized speech recognition, delivering higher accuracy and greater adaptability. Neural network-based models can handle dialects, multiple languages, and noisy environments, producing reliable transcription even in complex scenarios.

Popular acoustic models include Quartznet, Citrinet, and Conformer, each suited to different performance and application requirements.

Deep learning ASR goes beyond transcription; it can understand context, detect intent, and manage multi-turn conversations, making it ideal for virtual assistants, customer support AI, and enterprise automation.

Now that we understand the pipeline and algorithms, let’s look at the technologies powering ASR.

Key Technologies Behind Automatic Speech Recognition

Behind every accurate transcription and intelligent voice interaction lies a stack of advanced technologies. Each layer of this stack works together to clean audio, extract meaningful features, recognize patterns, and interpret intent, ensuring high accuracy and actionable output across industries.

Here’s a breakdown of the key technologies that drive ASR systems and how they contribute to performance:

Technology Layer | Function | How It Works | Why It Matters |

Signal Processing | Prepares audio | Removes noise, normalizes volume, and separates speech from silence | Provides cleaner input for accurate recognition |

Feature Engineering | Converts audio to data | Extracts frequency, pitch, energy, MFCC features | Transforms sound into structured AI-ready inputs |

Machine & Deep Learning | Learns patterns | Uses neural networks to map features to words | Improves adaptability and precision |

Neural Network Architectures | Handles time-series speech | CNNs, RNNs, and Transformers detect phonemes & word sequences | Enables natural, continuous recognition |

Hidden Markov Models | Predicts sequences | Probability-based modeling reduces word errors | Improves sentence-level accuracy |

Language Models | Context application | Scores likely word combinations | Ensures grammatically coherent output |

Natural Language Understanding (NLU) | Extracts meaning | Identifies intent, entities, and context | Supports actionable responses |

With technology in place, now it’s time to explore the types and variants of ASR systems.

Also Read: The Ultimate Guide to Contact Center Automation.

Major Types and Variants of Automatic Speech Recognition (ASR)

Automatic Speech Recognition (ASR) systems come in multiple types and variants, each designed to meet specific needs, environments, and user interactions. The types and variations given below determine how the system processes speech, handles multiple speakers, adapts to different languages, and integrates into real-world applications.

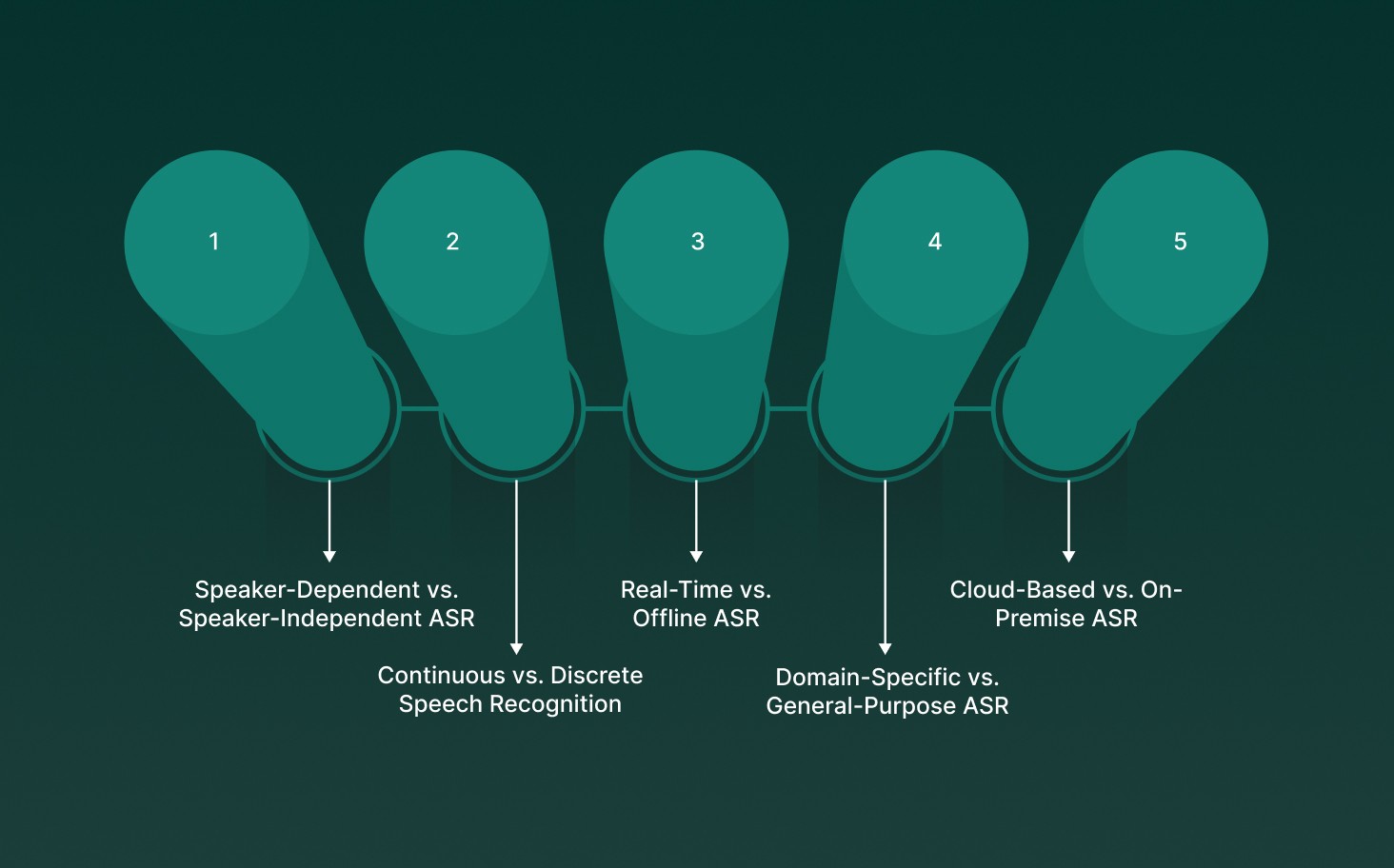

1. Speaker-Dependent vs. Speaker-Independent ASR

Speaker-Dependent: Models are trained on a specific speaker’s voice (voice Cloning), capturing nuances like accent, pitch, and speaking style. This yields near-perfect recognition but requires initial calibration. Commonly used in dictation software and personalized voice assistants.

Speaker-Independent: Designed to handle any speaker without prior training. These systems rely on massive, diverse datasets to generalize across accents, dialects, and age groups. Critical for call centers, AI-driven customer support, and multilingual environments.

Why it matters: Choosing the right approach affects both deployment complexity and recognition accuracy across diverse user populations.

2. Continuous vs. Discrete Speech Recognition

Continuous ASR: Processes natural, flowing speech in real time without requiring pauses. It uses advanced language models to predict word sequences and maintain context, making it ideal for live transcription, virtual assistants, and multi-turn conversations.

Discrete ASR: Handles one word or phrase at a time, requiring deliberate pauses between commands. Typically used in voice-controlled devices, legacy systems, or environments where error tolerance must be minimized.

Why it matters: Continuous ASR is preferred for human-like interaction, while discrete systems excel in command-based precision applications.

3. Real-Time vs. Offline ASR

Real-Time ASR: Converts spoken words into text as they are spoken, with latency measured in milliseconds. Supports instant insights like sentiment detection, intent recognition, and task automation in live customer interactions.

Offline ASR: Processes pre-recorded audio files, enabling batch transcription and analytics without network dependency. Often used in compliance monitoring, research, or situations where live processing is unnecessary.

Why it matters: Real-time ASR enables proactive decision-making, while offline ASR ensures flexibility, security, and resource efficiency.

4. Domain-Specific vs. General-Purpose ASR

Domain-Specific ASR: Optimized for specialized vocabulary, acronyms, and jargon. For example, clinical terms in healthcare or legal terminology in law firms. Accuracy improves significantly when combined with context-aware language models.

General-Purpose ASR: Trained on broad datasets covering everyday speech patterns. Suitable for consumer devices, virtual assistants, and cross-industry applications.

Why it matters: Domain adaptation reduces transcription errors and improves operational efficiency in specialized workflows.

5. Cloud-Based vs. On-Premise ASR

Cloud-Based ASR: Hosted remotely and scalable to thousands of simultaneous users, offering real-time updates, multi-language support, and AI model improvements. It suits high-volume environments like enterprise call centers.

On-Premise ASR: Deployed locally within organizational infrastructure, providing full control over data, compliance, and latency. Essential for highly regulated industries like banking, healthcare, and government services.

Why it matters: Deployment choice impacts security, compliance, cost, and system flexibility.

Also Read: How AI Chatbots Drive Customer Service: 7 Key Use Cases

While ASR has advanced, real-world deployment presents challenges.

4 Key Challenges in Automatic Speech Recognition Systems and Practical Solutions

Automatic Speech Recognition (ASR) has advanced significantly, but deploying it in real-world environments comes with challenges. Accuracy, reliability, and compliance depend on how well systems manage variation, noise, sensitive data, and evolving language.

Below are the key challenges and recommended best practices to overcome them.

1. Accent and Dialect Variability

Challenge: Speech differs across regions, cultures, and languages. AI models may misinterpret regional pronunciations, mixed-language speech, or local dialects.

Best Practices:

Train models on diverse datasets representing multiple accents and dialects.

Continuously update training data with new regional speech samples.

Use adaptive learning techniques to fine-tune models for specific user populations.

2. Background Noise and Audio Quality

Challenge: Environmental noise, overlapping speakers, or low-quality microphones can distort audio and reduce recognition accuracy.

Best Practices:

Apply noise reduction, echo cancellation, and speech enhancement during preprocessing.

Use high-quality microphones and standardized audio capture practices.

Implement real-time audio quality monitoring to detect and handle low-quality inputs.

3. Numeric and Domain-Specific Terms

Challenge: Phone numbers, account IDs, medical terms, legal jargon, or technical vocabulary are prone to misrecognition.

Best Practices:

Include domain-specific dictionaries and custom vocabulary in ASR models.

Use context-aware models to understand surrounding words for accurate transcription.

Periodically review transcripts to identify and correct recurring errors.

4. Data Privacy and Regulatory Compliance

Challenge: Voice recordings often contain sensitive personal, financial, or health-related information, creating privacy risks.

Best Practices:

Encrypt audio data both in transit and at rest.

Implement strict access controls, anonymization, and detailed audit logs.

Ensure compliance with HIPAA, PCI, SOC 2, GDPR, or other relevant regulations.

By addressing these challenges with structured best practices, ASR systems can maintain high accuracy, reliability, and security, ensuring smooth performance in complex, real-world environments.

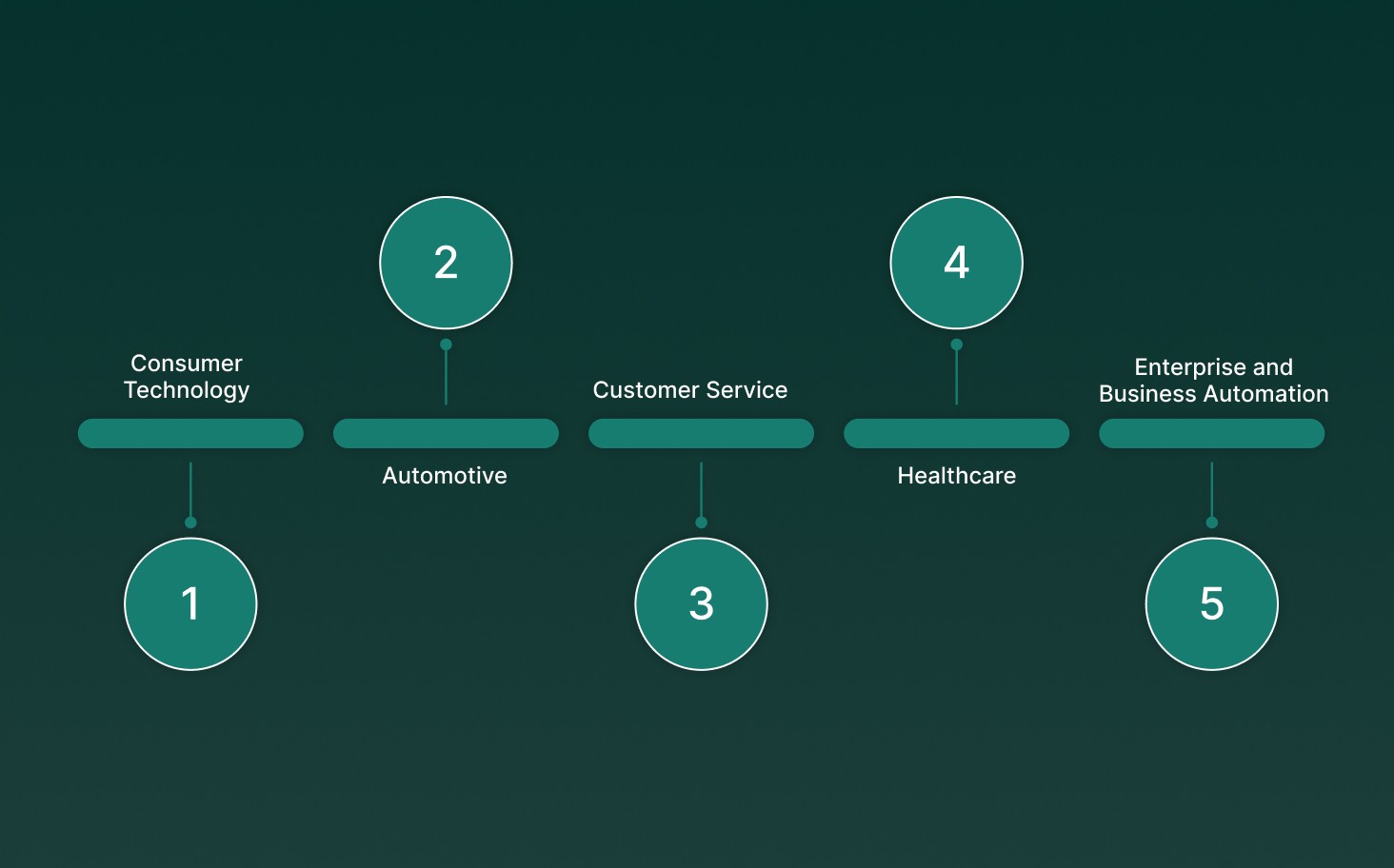

5 Real-World Applications of Automatic Speech Recognition (ASR)

Across industries, ASR is being used to improve efficiency, enhance customer experiences, and enable hands-free control. It helps businesses and individuals save time, reduce errors, and automate routine processes.

Here’s how ASR is being used across major industries today.

1. Consumer Technology

In everyday devices, ASR makes interactions faster and hands-free, improving convenience and accessibility.

Smartphones, tablets, and wearable devices respond to voice commands, allowing users to perform tasks without touching the screen.

Smart home systems use voice recognition to control lights, appliances, and security devices easily.

Accessibility tools help people with disabilities operate devices using spoken instructions.

Voice search and app navigation let users find information or complete tasks without typing.

2. Automotive

In vehicles, ASR improves safety and convenience by reducing manual controls.

Drivers can operate navigation, media, and phone calls hands-free, keeping focus on the road.

In-car voice assistants provide guidance, reminders, and support while driving.

Voice commands allow drivers to control vehicle systems without distraction.

Spoken alerts and confirmations help ensure critical instructions are heard immediately.

3. Customer Service

ASR enhances customer support by automating interactions and helping agents work efficiently.

Interactive voice response (IVR) systems can understand and respond to spoken queries.

AI-powered voice agents handle call routing, answer questions, and manage requests automatically.

Calls can be transcribed live to assist agents or supervisors in real-time.

Speech analytics helps monitor call quality, detect trends, and maintain compliance.

For example, many companies now use ASR to automatically answer common customer queries, freeing agents to handle more complex issues.

4. Healthcare

ASR is helping healthcare professionals save time and reduce the burden of documentation.

Clinical notes can be transcribed in real-time during patient consultations, freeing up time for care.

Patient intake and information collection are simplified through voice-driven forms and workflows.

Medical staff can interact with digital tools using speech, improving workflow efficiency.

Remote patient monitoring systems use voice commands for easy interaction and data capture.

5. Enterprise and Business Automation

ASR connects voice input directly with internal workflows, helping businesses automate tasks and improve efficiency.

Voice agents can handle bookings, reminders, and confirmations automatically.

Speech analytics interprets intent and sentiment to guide next actions.

Sales and support teams can use voice-driven processes to save time and reduce errors.

ASR can trigger workflows in enterprise systems, ensuring tasks are completed without manual intervention.

Overall, ASR has changed from a simple transcription tool into a powerful technology that drives efficiency, accuracy, and automation across industries.

What’s Next in Automatic Speech Recognition: 5 Future Prospects

With voice interactions growing across support, sales, and automation, ASR systems are being built to handle scale, speed, and real-world complexity. Automatic speech recognition is moving fast from basic transcription to real-time decision support for businesses.

Here are the key trends shaping the future of ASR:

Stronger multilingual and accent support: Global customer calls often involve non-native accents, and newer ASR models trained on diverse regional data are reducing recognition errors across markets such as India and Southeast Asia.

Emotion and sentiment detection in live calls: Modern ASR can detect stress or frustration in real time, helping contact centers resolve issues faster by triggering the right response early.

Context memory for multi-step conversations: Advanced systems track intent across longer interactions, allowing tasks like verification, rescheduling, and follow-ups to happen in one continuous flow.

Stronger privacy and secure deployment models: Enterprises are adopting on-device and private cloud ASR to reduce data exposure and meet compliance needs in sectors such as banking, healthcare, and telecom.

Deeper integration with AI agents: ASR now feeds real-time speech data into AI agents that can update CRMs, trigger workflows, and complete actions during the call.

Expansion into voice-first enterprise tools: Voice input is becoming common in enterprise software, field operations, and AR and VR systems, with many enterprise apps expected to support voice interfaces in the coming years.

As these trends develop, ASR will play a central role in voice automation, customer service, and AI-driven operations, acting as the foundation for faster, smarter, and more reliable voice systems.

How Does Pulse Speech to Text Improve Automatic Speech Recognition?

Automatic speech recognition is the foundation of real-time voice systems. Smallest.ai builds enterprise-ready voice AI by combining fast speech-to-text with accuracy, scale, and security.

Here is how Smallest.ai makes ASR work reliably in real business environments:

Ultra Low Latency Transcription: Speech is converted to text in under 70 milliseconds, keeping calls and AI agents responsive without delays.

High Multilingual Accuracy: Supports more than 30+ languages and accents, helping teams manage regional speech patterns with low error rates.

Reliable Capture of Critical Information: Important details such as account numbers, OTPs, and compliance phrases are recognised clearly, even in noisy calls.

Industry-Leading Real-Time Recognition Speed: Pulse STT delivers sub-70 ms time-to-first-transcript, allowing systems to respond as speech happens rather than after audio processing.

Live Speech Intelligence: The system detects intent, emotional cues, and escalation risk while the conversation is still in progress, using products such as text-to-speech (Lightning) or speech -to-speech (Hydra) conversations.

Natural Two-Way Conversations: Handles speaker turns and overlaps smoothly, allowing conversations to flow without losing context.

Full-Duplex Conversation Processing with Hydra: Hydra’s architecture preserves overlapping speech and natural conversational timing, ensuring AI voice recognition captures multi-speaker interactions without losing context.

Enterprise Scale Performance: Processes thousands of voice streams at the same time without slowing down or losing accuracy.

By pairing fast, accurate ASR with workflow-ready AI agents and real-time voice output, Smallest.ai enables voice systems that don’t just listen, but act reliably at scale.

Conclusion

Automatic speech recognition helps voice systems listen, respond, and act in real time. It turns spoken words into clear, usable data that powers AI agents, automated support, and live voice interactions. For this to work well, ASR must be fast, accurate, and able to follow context during conversations.

Smallest.ai offers ASR built for real business use. It supports multiple languages, handles high call volumes, and works with low delay, making voice automation reliable at scale.

If you want to improve voice-based automation and customer interactions, try real-time ASR with Smallest.ai. Book a demo to see how it works.

Answer to all your questions

Have more questions? Contact our sales team to get the answer you’re looking for