Learn how voice AI personalization in customer service works, where centers lose context, and how enterprises deploy it at scale securely today. Read more.

Hamees Sayed

Updated on

February 23, 2026 at 9:23 AM

A customer calls and says, “I’m following up on the return I initiated yesterday.” The system responds, “Please explain the issue.” That short exchange exposes the problem. The call is live, but the context is not. Identity, history, and intent exist somewhere in the stack, yet none of them surfaces inside the conversation. This gap is why enterprises are actively searching for voice AI personalization in customer service. The goal is not to automate calls, but to preserve continuity while conversations are unfolding.

That shift is accelerating. The voice AI agents market size is valued to increase by USD 10.96 billion at a CAGR of 37.2% from 2024 to 2029, as enterprises replace static IVRs with systems that adapt in real time. Today, voice AI personalization in customer service determines whether a follow-up call feels informed or redundant, especially at scale.

In this guide, we break down how personalization works inside live voice interactions, where traditional contact centers lose context, and what enterprises need to deploy it reliably across operations.

Key Takeaways

Personalization Occurs During the Call: Voice AI adapts in real time using intent, context, and emotion while the conversation is live.

Legacy Systems Lose Context: IVRs and agent handoffs reset conversations and force repetition.

Enterprise Personalization Needs Orchestration: Reliable personalization depends on coordinating data, memory, and responses in real time.

Global Scale Requires Language Intelligence: Multilingual personalization relies on live language detection and regional execution.

Smallest.ai Runs Personalization at Scale: Real-time voice infrastructure allows consistent behavior under high call volumes.

What Voice AI Personalization Means in Live Customer Conversations

Voice AI personalization in live calls refers to how the system adapts in real time based on who the caller is, what they are saying, and how the conversation is evolving second by second. This personalization happens during the call, not before or after it.

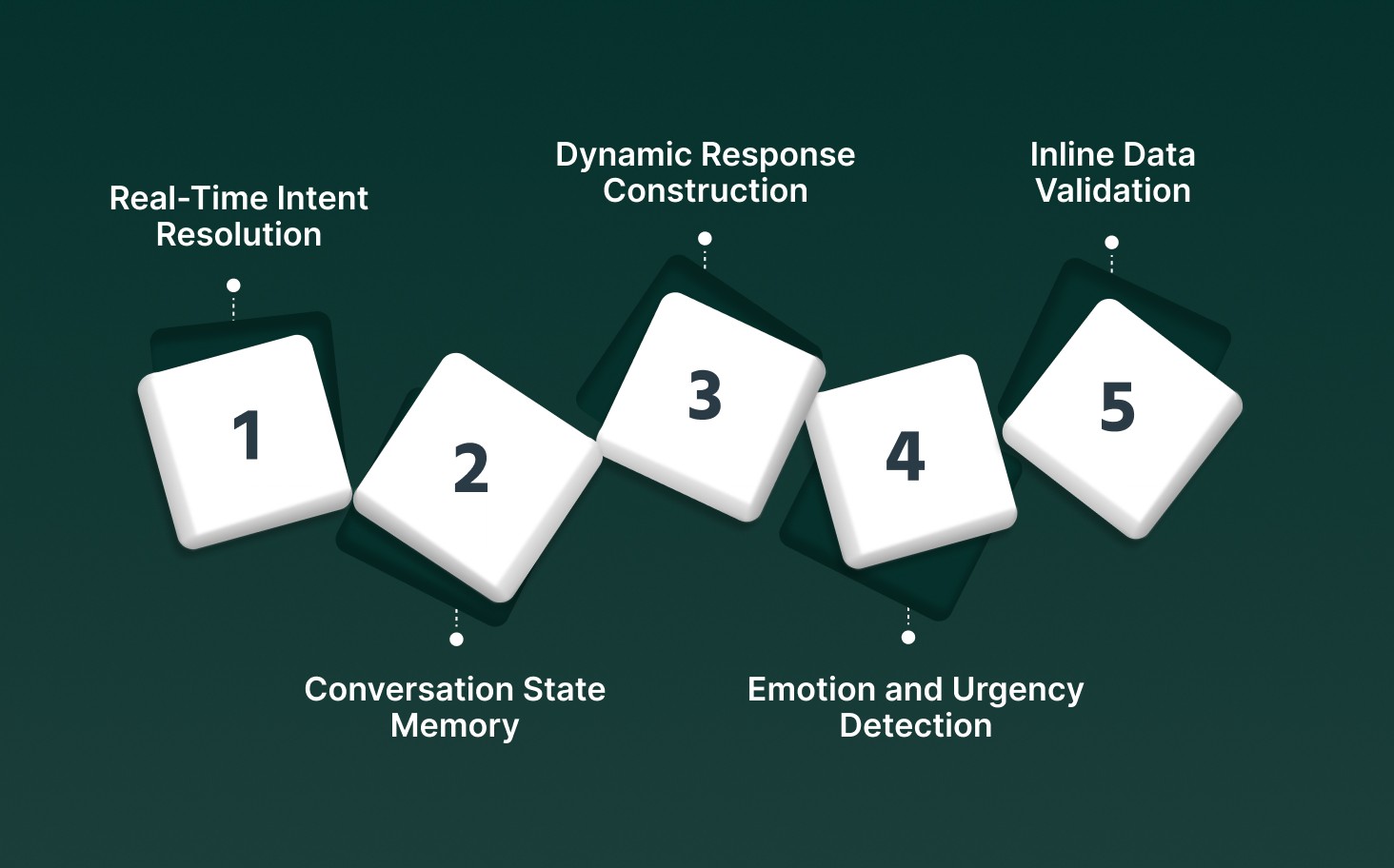

Real-Time Intent Resolution: The agent identifies caller intent continuously, adjusting responses as the user shifts topics instead of locking into a single predefined flow.

Conversation State Memory: Context such as prior questions, confirmations, and partial actions persists across turns, preventing repeated prompts or resets mid-call.

Dynamic Response Construction: Spoken responses are assembled at runtime using live data sources, not pre-recorded scripts, allowing precise answers tied to the caller’s situation.

Emotion and Urgency Detection: Changes in pacing, tone, and interruptions are detected and reflected in response timing and phrasing during the same interaction.

Inline Data Validation: Sensitive inputs like account numbers or dates are verified and re-read with controlled cadence to reduce errors without breaking conversational flow.

Live voice AI personalization operates inside the call loop itself, combining intent tracking, memory, and speech control to maintain continuity and accuracy throughout the conversation.

If you are planning to deploy voice AI within existing contact center systems and workflows, start with Customer Service Voice Bots: Enterprise Integration Guide

Where Traditional Call Centers Break Personalization

Traditional call centers lose personalization because their systems are optimized for routing and throughput, not for maintaining conversational context. The breakdown happens inside the call flow itself, not at the customer experience layer.

Session Context Fragmentation: Context resets when calls move between IVR, queue, and agent desktops, forcing customers to restate intent and details.

Static Script Dependency: Agents and IVRs rely on fixed scripts that cannot adapt when a caller changes direction mid-conversation.

Delayed Data Availability: Customer data loads after call pickup or only on agent request, preventing early personalization during the first seconds of the call.

Manual Emotional Interpretation: Tone and urgency are assessed subjectively by agents, leading to inconsistent handling across shifts and regions.

Language Routing Constraints: Language and accent handling depend on queue assignment rather than live detection, causing misroutes and slow resolution.

Personalization fails in traditional call centers because conversational context, data access, and emotional signals remain disconnected throughout the call lifecycle.

5 Voice AI Personalization Use Cases at Enterprise Scale

At enterprise scale, voice AI personalization operates as a real-time orchestration layer that continuously evaluates conversational context, customer state, emotional signals, and enterprise data while the call is live. The system makes micro-decisions every few hundred milliseconds, determining what to say, how to say it, and whether to continue, redirect, or escalate the interaction.

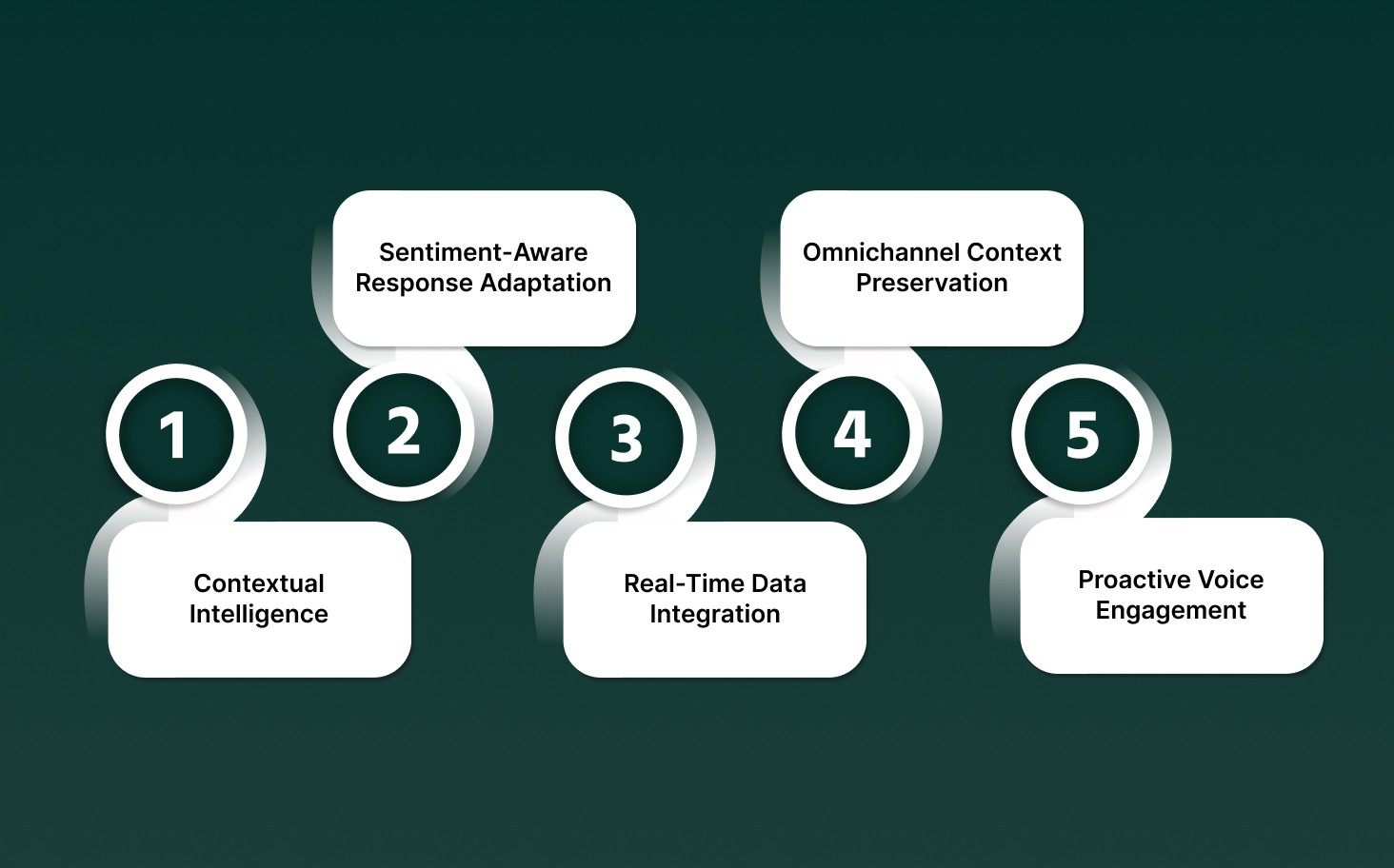

1. Contextual Intelligence and Memory-Driven Conversations

This use case addresses the most visible failure of legacy systems: loss of context across turns, calls, and channels. Enterprise voice AI replaces linear call flows with persistent conversational memory.

Key Details

Layered Memory Architecture: Short-term memory stores active session variables such as current intent, partial confirmations, error states, and conversational forks, while long-term memory retains historical interactions, prior resolutions, preferred authentication methods, and behavioral patterns.

Context Rehydration on Call Start: The system reconstructs conversational state within the first seconds of the call by correlating caller identity, device metadata, recent tickets, and unresolved actions.

Progressive Verification Logic: Identity confidence increases incrementally using voice biometrics, call origin, historical behavior, and inline confirmations, allowing the system to bypass full verification when risk thresholds are met.

2. Emotional and Sentiment-Aware Response Adaptation

This use case allows voice AI to respond differently based on emotional trajectory, not static intent classification, allowing the system to intervene before escalation or abandonment occurs.

Key Details

Acoustic Feature Extraction: Real-time analysis of pitch variance, speech tempo, interruption frequency, silence duration, and volume fluctuation is used to infer emotional state continuously.

Emotion-State Transitions: The system tracks emotional drift across the call, detecting escalation patterns such as frustration compounding after repeated clarifications or de-escalation after resolution acknowledgment.

Adaptive Interaction Control: Response length, confirmation frequency, and escalation thresholds dynamically adjust based on emotional intensity, shifting from explanatory to directive modes when urgency rises.

3. Real-Time Data Integration and Behavioral Segmentation

This use case connects live voice interactions directly to enterprise systems, allowing decisions that reflect the customer’s current situation, not stale segmentation.

Key Details

Low-Latency Data Retrieval: CRM, billing, inventory, and case-management systems are queried via cached replicas or read-optimized endpoints to meet sub-second response requirements.

Behavioral State Modeling: Customers are classified in-session using recent actions such as failed payments, repeated contact attempts, incomplete transactions, or policy exceptions.

Decision-Time Personalization: Offers, resolutions, and routing decisions are generated conditionally based on behavioral state rather than fixed customer tiers or demographic segments.

4. Omnichannel Context Preservation

This use case guarantees personalization persists across time and channels, preventing fragmentation when customers interact through multiple touchpoints.

Key Details

Unified Interaction Ledger: Every conversational event is logged into a shared state store accessible across voice, chat, email, and messaging channels.

Channel-Aware Continuity Rules: The system preserves progress markers such as completed steps, pending actions, and verification status when switching channels mid-journey.

Preference and Constraint Persistence: Language choice, escalation tolerance, communication pace, and accessibility needs are consistently applied across all subsequent interactions.

5. Predictive and Proactive Voice Engagement

This use case shifts enterprise voice AI from reactive handling to anticipatory engagement driven by behavioral signals and temporal patterns.

Key Details

Event-Based Triggering: Outbound engagement is initiated based on signals such as approaching payment deadlines, abnormal usage patterns, renewal windows, or clinical risk thresholds.

Propensity and Risk Scoring: Predictive models estimate churn likelihood, default probability, or upsell readiness using historical behavior and cohort-level trends.

Outcome-Oriented Outreach Logic: Call scripts, offer structures, and escalation paths are dynamically selected to maximize resolution probability while minimizing customer effort.

At enterprise scale, voice AI personalization functions as a continuously adaptive system that synchronizes memory, emotion, live data, and prediction to control conversations in real time, producing measurable gains in resolution speed, customer trust, and operational stability.

If you are evaluating voice AI for production use, Smallest.ai allows real-time, multilingual voice agents with low-latency execution, enterprise deployment control, and consistent performance under high call volumes.

Voice AI Personalization Across Global and Multilingual Operations

Global voice AI personalization requires maintaining consistent conversational quality while adapting to language, region, and cultural context in real time. At scale, this depends on language-aware models, region-specific logic, and low-latency execution across geographies.

Language Detection at Call Start: Spoken language is identified within the first utterance using phoneme and acoustic pattern analysis, eliminating IVR-based language selection.

Accent-Aware Speech Recognition: Region-specific acoustic models handle pronunciation variance, code-switching, and localized vocabulary without degrading transcription accuracy.

Localized Response Generation: Responses incorporate region-appropriate phrasing, honorifics, and numeric formats such as dates, currency, and phone numbers.

Mid-Call Language Switching: Active language context updates instantly when callers switch languages during a conversation, preserving intent and session state.

Geography-Aware Routing Logic: Escalation paths, compliance checks, and data access rules adapt dynamically based on caller location and regulatory boundaries.

Multilingual voice AI personalization succeeds when language, region, and compliance logic operate in line with the conversation rather than as pre-call configuration.

For teams focused on reducing handle time and improving first-call resolution without adding headcount, explore How Voice AI Automation Can Speed Up Resolution Times

How Personalized Voice AI Integrates Into Existing Contact Centers

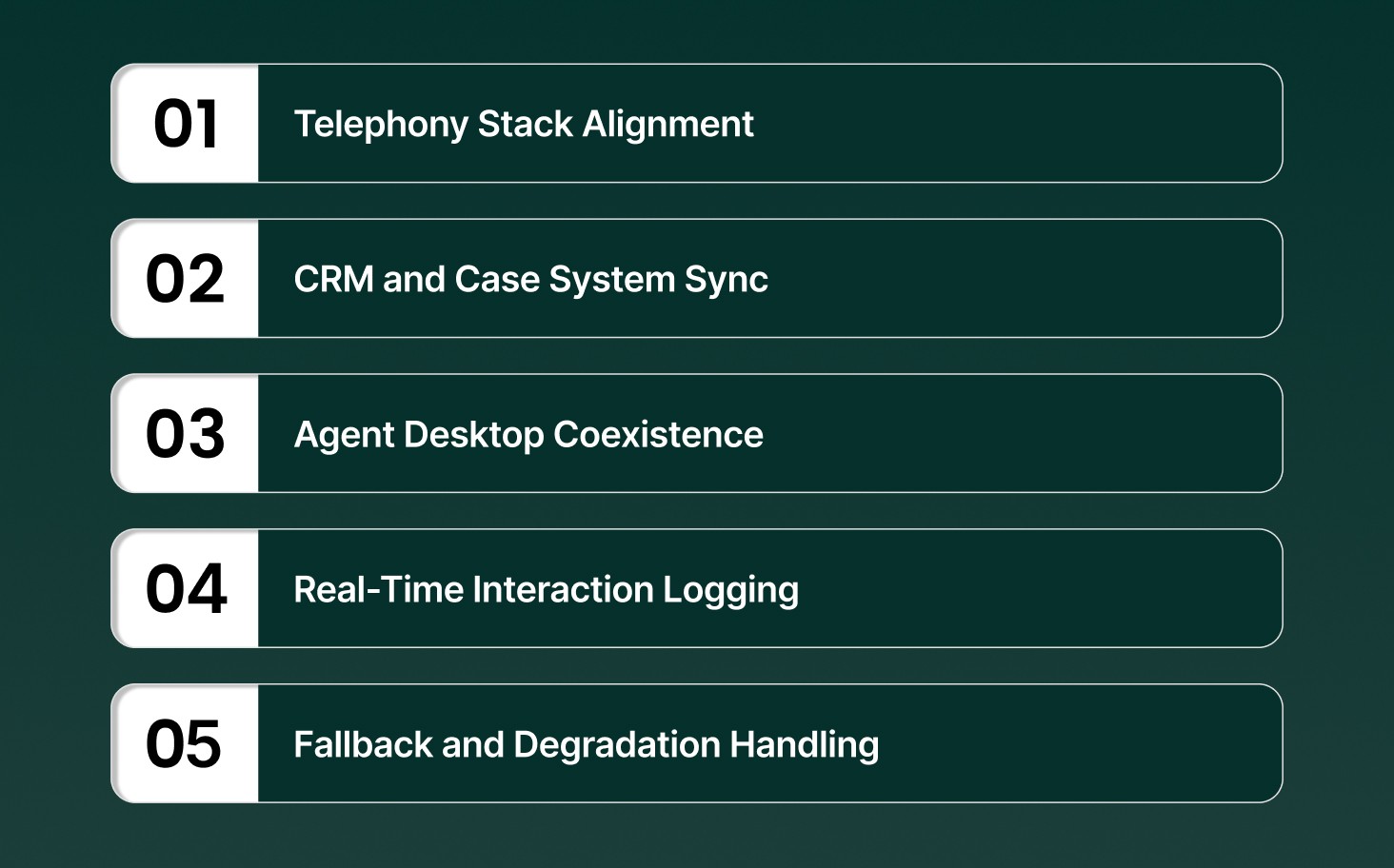

Personalized voice AI integrates into contact centers as a real-time orchestration layer, operating alongside existing telephony, agent desktops, and backend systems without replacing core infrastructure. Integration focuses on low-latency data flow, controlled handoffs, and operational observability.

Telephony Stack Alignment: Voice AI connects directly with SIP, PSTN, and cloud telephony platforms, managing call initiation, hold states, transfers, and termination without altering carrier workflows.

CRM and Case System Sync: Customer records, open tickets, and resolution history are read and updated during live calls through secured APIs to preserve context continuity.

Agent Desktop Coexistence: When escalation occurs, the agent receives a structured conversation summary, detected intent, emotional state, and completed steps before speaking to the caller.

Real-Time Interaction Logging: Transcripts, timestamps, decision paths, and system actions are streamed into analytics and compliance systems as the call unfolds.

Fallback and Degradation Handling: Predefined failover logic reroutes calls to human agents or alternative workflows when downstream systems or integrations become unavailable.

Effective integration places voice AI inside existing contact center workflows, coordinating systems, agents, and data in real time without introducing operational fragility.

Implementing Voice AI Personalization Without Disrupting Operations

Deploying personalized voice AI in live contact centers requires a controlled rollout strategy that isolates risk, preserves service continuity, and validates impact before scale. The focus is on parallel operation, measurable checkpoints, and reversible changes.

Use Case Isolation: Initial deployment targets high-volume, low-variance call types such as status checks or appointment scheduling to limit operational risk.

Shadow Mode Validation: Voice AI runs in parallel with human agents, observing and scoring interactions without customer exposure to benchmark accuracy and decision quality.

Progressive Traffic Ramping: Call routing expands incrementally based on predefined performance thresholds for latency, resolution accuracy, escalation rate, and system stability.

Agent Enablement Workflow: Support teams are trained to interpret AI summaries, emotional flags, and escalation triggers before assuming live calls.

Operational Telemetry Monitoring: Real-time dashboards track call outcomes, system health, fallback frequency, and integration latency to detect issues before customer impact.

Non-disruptive deployment depends on incremental exposure, continuous monitoring, and the ability to reverse decisions instantly while maintaining service stability.

How Smallest.ai Delivers Voice AI Personalization at Scale

Smallest.ai delivers voice AI personalization by controlling the entire real-time voice stack, from speech understanding to response generation and execution. Personalization is enforced at the infrastructure level, not added as a surface feature, allowing consistent behavior under high concurrency and live call conditions.

End-to-End Real-Time Voice Stack: Lightning Voice AI handles transcription, intent detection, response generation, and speech synthesis within a single low-latency pipeline, preventing context loss between components.

Electron Intelligence Models: In-house Electron SLMs trained on millions of real conversations retain conversational state, handle domain-specific language, and adapt responses during multi-turn calls.

Live Call Orchestration Engine: Voice agents manage interruptions, confirmations, number-heavy inputs, and topic shifts inline, preserving conversational flow without reverting to scripts.

Enterprise-Grade Deployment Control: Models run in cloud, on-prem, or hybrid environments, allowing enterprises to own inference, meet data residency requirements, and maintain predictable latency.

Operational Visibility and Evaluation: Call logs, transcripts, fallback events, and agent handoffs are captured in real time and exported to enterprise analytics systems for continuous performance review.

Smallest.ai delivers personalization by embedding intelligence directly into live voice execution, maintaining context, accuracy, and control even when thousands of calls run in parallel.

Conclusion

Personalization in voice support has become an execution problem, not a conceptual one. The difference between fragmented conversations and consistent customer experiences now comes down to how well voice systems maintain context, adapt in real time, and operate under production load. Enterprises that treat voice AI as core infrastructure rather than surface automation gain predictability in customer interactions, operational stability during peak volumes, and clearer control over how conversations unfold at scale.

Smallest.ai is built for that execution layer. Its real-time voice stack, multilingual models, and enterprise deployment controls allow teams to run personalized voice conversations reliably across regions, use cases, and call volumes.

If you are evaluating how to move from scripted automation to live, context-aware voice interactions, talk to our team to see how Smallest.ai can support your production rollout.

Answer to all your questions

Have more questions? Contact our sales team to get the answer you’re looking for

Automate your Contact Centers with Us

Experience fast latency, strong security, and unlimited speech generation.

Automate Now