Discover how pre-trained multilingual voice agents work, their models, features, and benefits. Explore the possibilities for your AI voice assistant today!

Akshat Mandloi

Updated on

December 26, 2025 at 11:28 AM

Talking to technology feels natural today, thanks to voice agents that turn speech into text, understand meaning, and reply with a lifelike voice.

The real challenge is language. People expect to speak in their own tongue, with their own accent, and still be understood. OpenAI’s Whisper was trained on about 680,000 hours of speech across nearly 99 languages, making it strong with accents and noisy audio.

Meta’s XLS-R goes even broader. It learned from recordings in over 100 languages, which helps it perform well even where little training data exists. This kind of reach opens the door to multilingual voice agents.

This guide explores what makes a voice agent truly multilingual, the pre-trained models behind them, and the key features that help them handle real conversations.

Key Takeaways:

Multilingual voice agents turn speech into text, understand meaning, and respond naturally, supporting multiple languages and accents.

Pre-trained models like Whisper, XLS-R, and Meta’s MMS provide ready-to-use ASR, TTS, and end-to-end frameworks for fast deployment.

Core features include wide language coverage, accent-aware speech recognition, natural voice synthesis, code-switching, context retention, and domain-specific fine-tuning.

Benefits and limits: they speed up deployment and reduce costs, but low-resource language support, bias, and advanced customization may require extra work.

What Makes a Voice Agent “Multilingual”?

A voice agent is multilingual when it can listen, understand, and speak in more than one language. It needs speech recognition to hear, language detection to know what’s being said, translation to switch languages, and voice synthesis to reply naturally.

English-only models handle just one language, while multilingual ones can communicate in dozens of languages and accents.

With these abilities in mind, the next question is whether developers can start with models that already have this multilingual knowledge built in.

Are There Pre-Trained Models for English and Multilingual Voice Agents?

Yes. Developers can start with models that already know multiple languages, which speeds up building an AI voice assistant. These models fall into three main types, each handling a key part of a conversational system.

They come with pre-learned patterns for pronunciation, intonation, and sentence structure, which make adapting them to specific industries or customer scenarios easier.

Here’s a clear breakdown of how these models are organized:

Type | Purpose | Language Support |

|---|---|---|

ASR (Speech Recognition) | Converts spoken words into text | English-only or 90+ languages |

TTS (Voice Synthesis) | Produces natural speech from text | Single or multiple languages |

End-to-End Frameworks | Integrates recognition, dialogue, and speech | Often multilingual with extensions |

Using pre-trained models also reduces the effort of collecting and labeling audio for every language. They maintain consistent voice quality and responsiveness, making conversations smooth and reliable across languages.

Also Read: Is your Voice Agent Prepared to Handle Enterprise Needs?

With a clear understanding of the types of pre-trained models, it helps to look at what specific features make them effective for building multilingual voice agents.

Core Features of Pre-Trained Multilingual Voice Agent Models

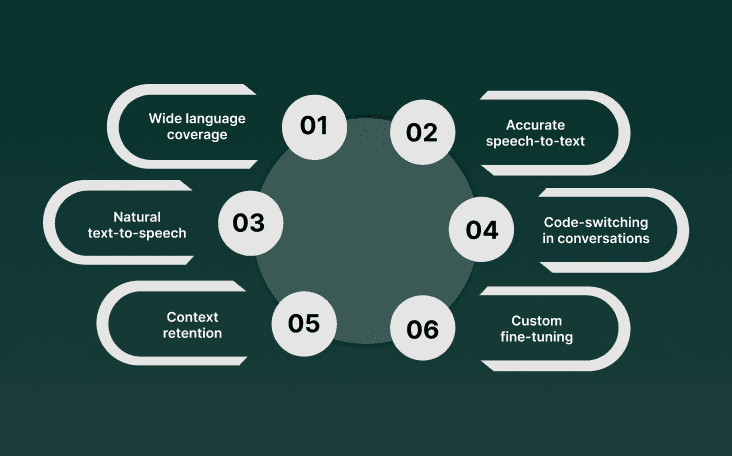

Alt text: Core Features of Pre-Trained Multilingual Voice Agent Models

A powerful multilingual voice agent goes beyond just knowing languages. It listens carefully, understands the meaning behind words, replies in a voice that feels alive, and remembers the flow of the conversation.

It requires a specific blend of skills to effectively handle real-time conversations with real people. Here’s what makes it work:

Wide language coverage: A multilingual voice agent can interact across regions without retraining. Research models like Meta’s MMS support over 1,000 languages, while enterprise-focused solutions such as Smallest.ai provide 16 languages optimized for real-world business use.

Accurate speech-to-text across accents: Voice agents can recognize different pronunciations and tones. They capture speech clearly, even with background noise or regional variations.

Natural text-to-speech: TTS models generate voices that sound real. They can match local accents and speech patterns so users feel like they are talking to a person, not a machine.

Code-switching in conversations: Agents can switch languages mid-sentence. For example, a user can mix English and Spanish in one query, and the agent responds correctly without losing context.

Context retention and understanding intent: The agent remembers what was said earlier. It tracks the conversation and responds appropriately over multiple turns.

Custom fine-tuning for industry-specific words: Businesses can teach the agent product names, jargon, or slang. This ensures interactions feel relevant and professional.

With these capabilities in mind, the next step is to look at the pre-trained models and platforms that make them possible.

Popular Pre-Trained Models and Platforms

When it comes to building multilingual voice agents, several top AI voice agents provide a ready starting point. Each platform brings unique strengths, from wide language coverage to flexible customization.

Here’s a closer look at some of the popular options.

1. Whisper (OpenAI)

Whisper is built to understand multiple languages. It was trained on 680,000 hours of speech and can handle different accents and noisy backgrounds.

It can even translate spoken words into English. It’s open-source, so anyone can use it to add multilingual speech recognition to their apps.

2. Google Speech-to-Text and Dialogflow CX

Google’s tools are easy to work with for multiple languages. Speech-to-Text can transcribe in real time. Dialogflow CX adds the ability to build full conversational agents that talk to users in different languages. Together, they make it simple to create multilingual voice experiences.

3. Amazon Lex and Polly

Amazon Lex listens and understands voice input. Polly speaks back in natural voices across many languages. Using them together lets businesses create apps that both understand and talk to users in different languages. It’s all integrated within AWS, so it’s easy to scale.

4. Microsoft Azure Cognitive Services

Azure covers speech recognition, translation, and text-to-speech. It handles several languages, which is great for global applications. You can transcribe, translate, and speak in multiple languages all in one system.

5. Meta’s MMS (Massively Multilingual Speech)

Meta's MMS models are designed to identify over 4,000 spoken languages and support speech-to-text and text-to-speech for more than 1,100 languages. This extensive language coverage makes it suitable for applications requiring broad linguistic support.

6. Rasa with Plugins

Rasa is open source and highly customizable. You can add plugins to support multiple languages. This makes it easy to build voice agents for different regions or industries without starting from scratch.

Also Read: Top AI voice agents for BFSI (Banking, Financial Services, and Insurance) in 2025?

Understanding these platforms gives a clear overview of what’s possible. Next, let’s look at the benefits they bring and the limitations to keep in mind.

Benefits and Limitations of Using Pre-Trained Models

Pre-trained voice models make life easier for businesses. You can get an agent up and running fast. They can speak numerous languages and save money since you don’t have to train huge models yourself.

However, there are limits. Training data can include biases, which may affect the recognition of certain accents or dialects. Coverage for low-resource languages like Yoruba or Nepali is weaker. Customizing the agent for industry-specific terms may require additional fine-tuning.

Here’s a clear look at the benefits and limitations of pre-trained voice models:

Aspect | Benefits | Limitations |

|---|---|---|

Deployment | Fast setup without training from scratch | Requires some additional configuration for specific needs |

Language Support | Handles dozens of languages and accents | Coverage may be weaker for low-resource languages |

Cost | Reduces the high cost of training large models | Customization can increase costs |

Accuracy | Proven transcription and speech generation | Biases in training data can affect certain accents or dialects |

Customization | Supports basic adjustments | Limited domain-specific tuning without extra work |

Also Read: How AI Voice Agents Are Transforming Real Estate Lead Handling and Scheduling

Pre-trained models offer speed, accuracy, and broad language support, but they have limits in customization and low-resource coverage. Smallest.ai provides a platform to put these models to work effectively across multiple languages.

How Smallest.ai Supports Multilingual Voice Deployments

Scaling multilingual voice agents is not simple. Accuracy across languages, the ability to manage thousands of calls daily, and strict compliance requirements often stretch standard solutions.

Smallest.ai takes on these challenges with enterprise-ready AI voice agents that are built for real-world use. This approach makes it possible to:

Support conversations in 16 global languages, from English and Hindi to Spanish, French, Arabic, and German.

Manage thousands of calls in parallel while staying reliable, even in complex workflows like onboarding or debt collection.

Handle sensitive details, such as credit cards or phone numbers, with flawless accuracy.

Deploy on either cloud or on-premises infrastructure, keeping enterprises in control of their own inference and data.

Meet the highest compliance standards, including SOC 2 Type II, HIPAA, and PCI.

Integrate smoothly through SDKs in Python, Node.js, and REST APIs.

The result is a multilingual voice platform that helps enterprises deliver consistent, natural conversations at scale, without compromising security or performance.

Conclusion

Multilingual voice agents are changing how people connect with technology. Success comes from choosing tools that fit real conversations, not just running models. The real value lies in meeting users where they are, understanding accents, languages, and code-switching naturally.

Looking ahead, these agents will keep evolving, helping organizations reach more people and create smoother, more human interactions across languages.

Enterprise-ready platforms like Smallest.ai bring these capabilities to life, offering multilingual AI voice agents that scale reliably and integrate seamlessly into business workflows.

Explore Smallest.ai today and see how enterprise AI voice agents handle multilingual interactions seamlessly.

FAQs

1. How do multilingual voice agents handle privacy and data security?

Enterprise platforms like Smallest.ai store voice data on secure cloud or on-premises infrastructure. They comply with SOC 2 Type II, HIPAA, and PCI standards to protect sensitive information during conversations.

2. Can multilingual voice agents work offline?

Yes. Models like OpenAI Whisper and VITS can be deployed on local servers for offline use. Offline deployment ensures functionality in locations without internet access while keeping data fully on-premises.

3. How do voice agents adapt to different industries?

Through custom integrations and plugins, agents can follow industry-specific workflows, whether it’s banking, healthcare, or e-commerce, without needing to retrain core models.

4. What role does voice agent analytics play in business decisions?

Analytics track usage patterns, call duration, user satisfaction, and topic trends. This data helps businesses improve customer experience and optimize conversational flows.

5. Are multilingual voice agents suitable for real-time live support?

Yes, many agents can handle real-time voice interactions at scale, managing thousands of concurrent conversations while maintaining accuracy and responsiveness.