Explore the top speech technology trends for 2026, including real-time voice solutions and AI-powered customer support, transforming industries worldwide.

Akshat Mandloi

Updated on

February 9, 2026 at 12:58 PM

Speech technology is rapidly becoming a cornerstone of customer interaction, but many businesses still struggle to provide seamless, natural experiences. Traditional systems often fail to deliver the level of engagement and personalization that today’s consumers expect, leading to frustration and a disconnect between brands and their audiences.

This gap not only impacts customer satisfaction but also results in missed opportunities and increased operational costs. As businesses strive to keep up with the growing demand for high-quality, real-time voice interactions, the need for more sophisticated and human-like solutions is becoming critical.

In this blog, we’ll explore how advanced speech technology is making a difference. We’ll look at how text-to-speech and speech recognition help businesses deliver personalized and efficient voice solutions. These technologies enable scalable interactions that enhance customer satisfaction and improve operational efficiency.

TL;DR

Speech technology bridges the gap between human communication and AI, combining speech recognition and text-to-speech for real-time, personalized interactions.

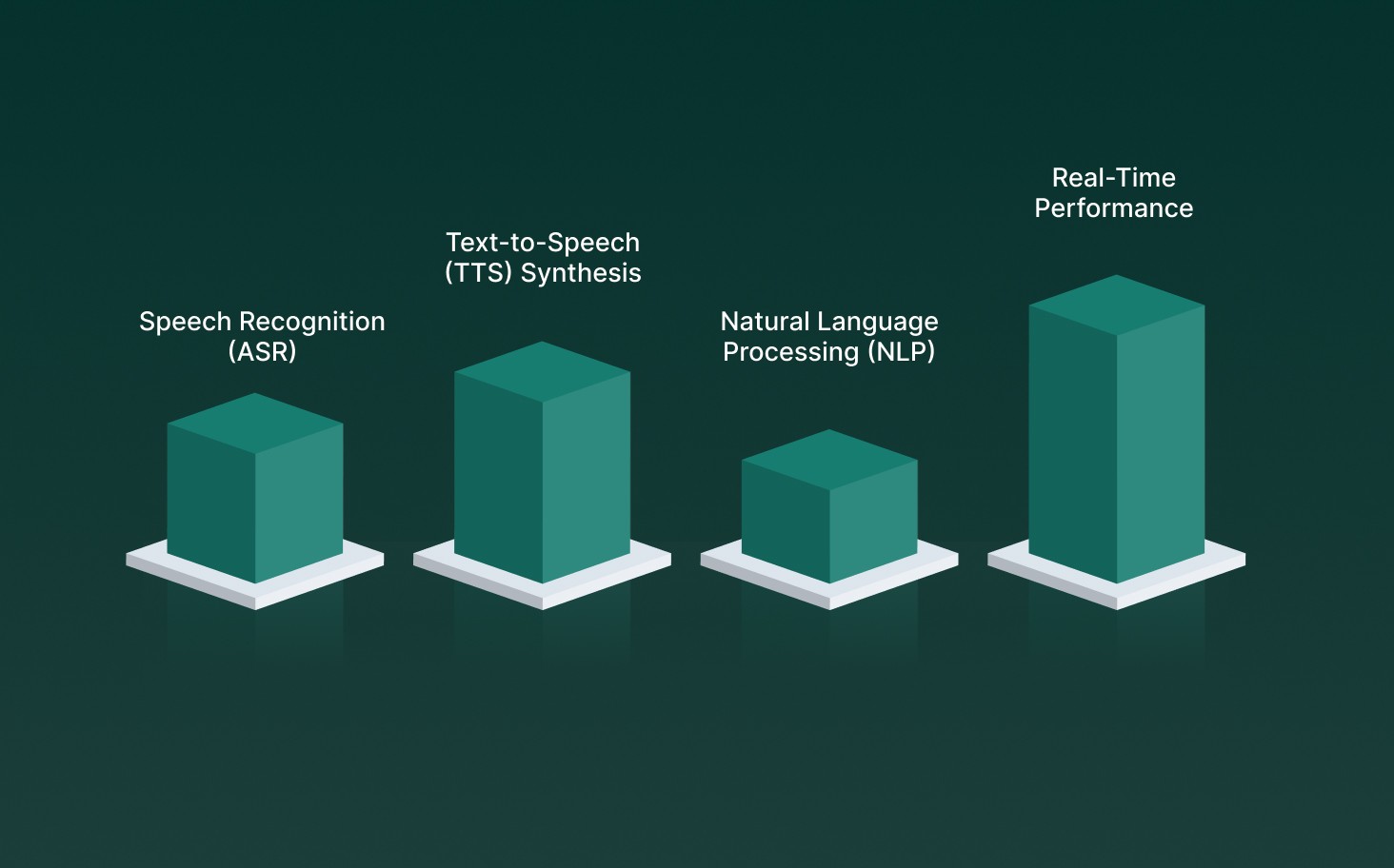

Key components include Speech Recognition, Text-to-Speech synthesis, and Natural Language Processing (NLP) for enhanced conversation flow.

Popular use cases: customer support automation, content creation, accessibility, and healthcare appointment scheduling.

Benefits: improves customer experience, operational efficiency, scalability, cost reduction, and provides data insights.

Future trends: real-time low-latency systems, personalized voices, multi-language support, and voice biometrics for enhanced security.

What is Speech Technology?

Speech technology enables machines to interact with humans using spoken language, bridging the gap between human communication and artificial intelligence. It relies on two key components: Speech Recognition, which converts spoken words into text, and Text-to-Speech (TTS), which generates human-like speech from text.

Beyond basic conversion, this technology creates natural, responsive, and personalized interactions. With developments in natural language processing (NLP) and machine learning, speech technology is evolving to support more complex, emotional, and multilingual conversations.

How Does Speech Technology Work?

Speech technology combines multiple components to create seamless, real-time voice interactions. Here's a breakdown of the process:

1. Audio Input and Feature Extraction

The process begins by capturing raw sound from a microphone. The audio is processed to remove noise, normalize volume, and segment it into short frames. These frames are transformed into feature representations like Mel-Frequency Cepstral Coefficients (MFCCs), which are used for machine learning models to interpret the audio.

2. Automatic Speech Recognition (ASR)

ASR converts spoken words into text. Modern ASR systems use deep neural networks to process large datasets and recognize speech patterns.

Components:

Acoustic Model: Maps audio features to phonetic units, using models like CNNs, RNNs, or Transformer-based architectures.

Language Model (LM): Helps resolve ambiguities by assigning probabilities to word sequences, ensuring the text makes sense in context.

Decoder: Combines the acoustic and language model outputs to select the most likely transcription.

End-to-End Models: Recent advancements use end-to-end ASR systems that integrate these components into a single neural network, simplifying the training process and improving performance.

3. Natural Language Processing (NLP)

Once raw text is produced by ASR, NLP processes it to derive meaning, identify intent, and manage conversation flow.

Core NLP Tasks

Tokenization: Splitting the text into meaningful chunks (words or phrases).

Named Entity Recognition (NER): Identifying specific items in the text, such as names, places, or dates.

Dependency Parsing: Understanding the grammatical structure and relationships between words.

Sentiment Analysis: Understanding the tone or emotional content of the text.

Intent Classification: Determining the underlying action or request in the conversation (e.g., "book a flight" or "play music").

NLP models often feed back into ASR systems to improve the quality of the transcriptions. These models can predict punctuation, correct grammar, and adjust phrasing, ensuring the overall text is syntactically and semantically coherent.

4. Text-to-Speech (TTS) Synthesis

TTS converts written text back into speech. This involves predicting how the spoken version of the text should sound based on context, intonation, and emotion.

Text Processing: First, the input text is processed and converted into phonetic representations, often broken down into linguistic units like phonemes or syllables.

Acoustic Modeling: Neural networks are used to predict the acoustic features from the processed text. Deep learning models like WaveNet or Tacotron generate a detailed representation of speech characteristics such as pitch, speed, and tone.

Vocoder: A vocoder converts the predicted acoustic features into a waveform that can be played as audio. Modern systems like WaveGlow or FastSpeech use neural vocoders to produce high-quality, natural-sounding speech.

TTS technology has evolved from earlier rule-based synthesis to modern neural models that can adjust speech dynamics, such as pitch, rate, and emotional tone, to sound more human-like.

5. Real-Time Performance and Latency Optimization

Real-time speech systems, such as voice assistants or IVR systems, need to process input and generate output within milliseconds to create a natural, interactive experience.

Latency Requirements: Real-time systems must operate with sub-100 millisecond latency to avoid delays in conversation, allowing for fluid and immediate responses.

Parallel Processing: Advanced models are designed to process multiple inputs simultaneously, which is particularly important for high-volume systems like customer service lines or automated phone systems.

Edge vs Cloud Processing: For low-latency applications, edge computing processes speech data locally on the device. However, more complex tasks like deep learning-based ASR and TTS may rely on cloud computing, introducing network delays.

These components work together to deliver human-like interactions in real-time, enabling enterprises to engage with customers more effectively.

Popular Use Cases for Speech Technology

Speech technology is revolutionizing how businesses and industries interact with customers and users. Below are some of the most impactful use cases:

1. Customer Service & Support

Voice Bots & IVR Systems: Automate routine inquiries, reduce wait times, and lower operational costs by providing 24/7 support.

Real-Time Assistance: AI-driven systems offer instant responses, ensuring improved customer satisfaction and quicker issue resolution.

2. Content Creation

Audiobooks & Podcasts: Generate lifelike, expressive narrations at scale, reducing the time and cost of manual voice recording.

Video Voiceovers: Create professional-quality voiceovers for marketing videos, training materials, and promotional content.

3. Accessibility & Inclusion

Speech-to-Text Transcriptions: Provide real-time captions for individuals with hearing impairments, enabling them to access audio content.

Voice-Activated Assistants: Offer hands-free control for users with physical disabilities or those needing accessibility support.

4. Smart Devices & Consumer Tech

Virtual Assistants: Power smart speakers, home automation, and mobile devices with intuitive voice interaction.

Voice Commands: Simplify user interaction with appliances, vehicles, and other consumer tech through hands-free control.

5. Healthcare & Professional Services

Appointment Scheduling: Automate patient bookings and inquiries, streamlining the scheduling process for healthcare providers.

Telemedicine: Enable real-time voice interaction between patients and healthcare providers, minimizing the need for in-person visits and increasing convenience.

By automating tasks, enhancing accessibility, and offering more natural interactions, speech technology is reshaping the way industries deliver services and engage with customers.

Also Read: AI in Health Insurance: 6 Use Cases Top Carriers Depend On

Benefits and Business Impact of Speech Technology

Speech technology offers a wide range of advantages that drive significant business outcomes. Here are the key benefits:

24/7 Availability: AI-powered voice systems provide round-the-clock support, reducing wait times and ensuring customers can get help whenever they need it.

Enhanced Customer Experience: Real-time, natural-sounding voice interactions make conversations feel more human and less transactional, increasing customer satisfaction and engagement.

Improved Operational Efficiency: Routine queries and repetitive tasks can be handled automatically, allowing human agents to focus on complex or high-value interactions.

Cost Reduction: Automating large volumes of voice interactions lowers the need for extensive support teams and reduces operational overhead.

Increased Scalability: Speech systems can manage thousands of simultaneous interactions without compromising quality, supporting business growth without proportional staffing increases.

Global Reach: Multi-language and accent support allows businesses to serve diverse customer bases consistently across regions.

Actionable Data Insights: Voice interactions generate valuable data on customer behavior, intent, and pain points, helping organizations refine services and improve decision-making.

Stronger Competitive Advantage: Businesses that adopt advanced speech technology demonstrate innovation, deliver faster service, and build stronger brand loyalty compared to competitors relying on traditional systems.

What’s Next for Speech Technology? Key Trends to Watch

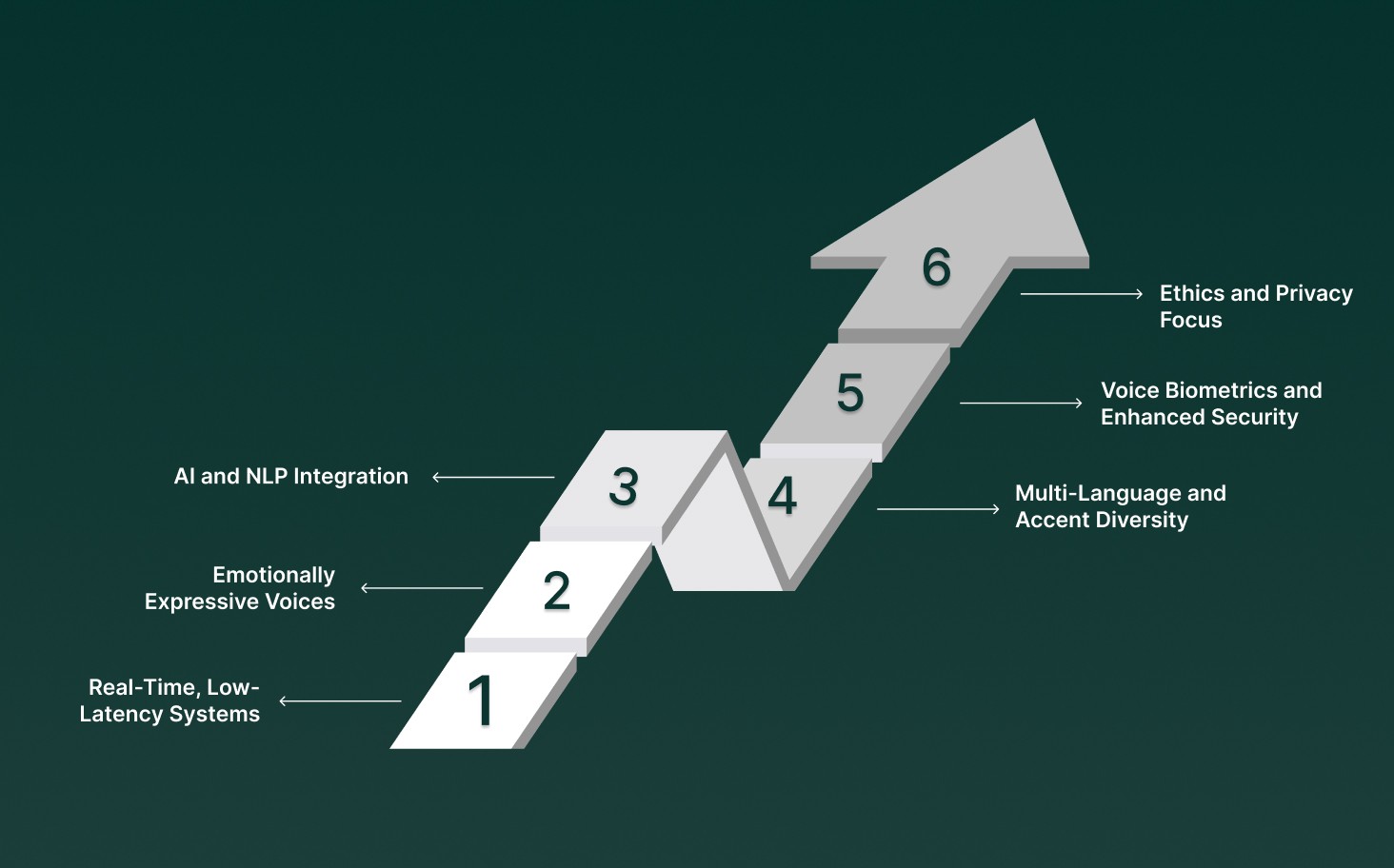

Speech technology is evolving at a rapid pace, discovering new possibilities across industries. As we look ahead, here are the key trends transforming the landscape:

Real-Time, Low-Latency Systems: With sub-100ms latency becoming the standard, businesses can now deliver seamless, instant voice interactions, improving real-time customer service and voice command responses.

Personalized and Emotionally Expressive Voices: The ability to inject emotion, tone, and personalization into voice interactions is making AI voices feel more human-like, driving richer, more engaging experiences.

AI and NLP Integration: Combining speech technology with AI and natural language processing is promoting more intelligent, context-aware conversations, allowing systems to understand nuanced intent and complex queries.

Multi-Language and Accent Diversity: As businesses expand globally, speech systems are evolving to support multiple languages and regional accents, making them more inclusive and effective in a wide range of markets.

Voice Biometrics and Enhanced Security: Voice recognition is becoming a key player in security, offering additional layers of protection through voice authentication and fraud detection, ensuring more secure transactions.

Ethics and Privacy Focus: With the rise of AI-generated voices, there’s a growing emphasis on protecting user data and ensuring ethical use, pushing forward regulations that govern the safe use of speech technology.

Also Read: Why Debt Collection Agencies Are Turning to Conversational AI in 2025? 6 Applications & Benefits

Challenges in Implementing Speech Technology

Implementing speech technology comes with its own set of obstacles that organizations should handle to ensure smooth deployment and effective performance. Below are some of the key risks associated with speech technology, along with strategies to mitigate these issues.

1. Accuracy and Error Handling

Risk: Speech recognition systems may misinterpret accents, slang, fast speech, or background noise, leading to incorrect transcriptions and flawed responses.

Mitigation: Train models on diverse, real-world datasets and continuously retrain them to improve performance across edge cases. Add fallback mechanisms such as confidence scoring and human handoff when the system detects uncertainty.

2. Language and Accent Diversity

Risk: Systems often struggle with multilingual environments, regional accents, and code-switching between languages.

Mitigation: Use multilingual models trained on globally diverse speech datasets and continuously fine-tune them for regional variations. Design systems that can dynamically adapt language models based on user location or interaction history.

3. Privacy and Data Security

Risk: Voice interactions frequently include sensitive personal or financial information, increasing the risk of breaches and regulatory violations.

Mitigation: Encrypt voice data in transit and at rest while enforcing strict access controls and audit logging. Ensure compliance with data protection rules such as GDPR, HIPAA, or CCPA depending on the industry.

4. Computational Power and Latency

Risk: Real-time ASR and TTS require significant processing power, which can introduce latency in high-volume or large-scale deployments.

Mitigation: Optimize models for low-latency inference using compression, quantization, or streaming architectures. Combine edge processing with scalable cloud infrastructure to balance speed and cost.

5. Ethical Concerns and Deepfakes

Risk: Voice cloning and synthetic speech technologies can be misused for impersonation, fraud, or misinformation.

Mitigation: Implement voice authentication, watermarking, or synthetic speech detection mechanisms to reduce misuse. Restrict voice cloning capabilities to verified users and enforce strict usage policies.

How Smallest.ai Enables Real-Time, Scalable, and Explainable Voice AI

Smallest.ai ensures explainability and scalability in live voice interactions, automating processes across industries while maintaining transparency and compliance with regulations.

Speech to text Intelligence: Convert live conversations into text to detect sentiment, emotional cues, and intent instantly. Speaker diarization distinguishes between speakers, helping teams better understand interactions and respond more effectively.

Multilingual Reach: Engage customers across the globe with support for 16+ languages, enabling consistent communication in diverse markets.

Emotion-Aware AI Voice Agents: Deliver responsive, human-like interactions through AI agents designed to recognize tone and adapt conversations in real time.

Flexible Customization: Tailor voice agents to reflect your brand’s personality, including tone, accent, and speaking style for a more natural customer experience.

Seamless System Integration: Connect effortlessly with existing tools like CRMs, help desks, and enterprise workflows to ensure smooth operations.

Scalable for Any Business Size: Whether supporting a startup or a global enterprise, the platform handles high call volumes without compromising performance.

Enterprise-Grade Security: Maintain trust and compliance with robust data protection aligned with SOC 2 Type II, HIPAA, and PCI standards.

High-Fidelity Voice Cloning: Create studio-quality voice replicas from just seconds of audio, ideal for voiceovers, virtual assistants, and branded audio experiences.

Smallest.ai delivers explainable voice AI by ensuring real-time execution with transparent, audit-friendly processes.

Final Thought

As speech technology continues to evolve, the key to success isn’t just the sophistication of the AI, but how seamlessly it integrates into existing workflows and how effectively it enhances real-world interactions. With speech tech, the ability to deliver accurate, human-like responses in real-time, while maintaining full control over performance and outcomes, is what truly sets businesses apart.

This is where Smallest.ai excels. Their real-time, scalable solutions like Waves and Atoms provide businesses with the power to automate interactions, all while ensuring that every speech-driven conversation remains transparent, measurable, and compliant.

If you’re ready to bring speech automation into your organization without sacrificing control, reach out to a Smallest.ai expert to see how it can work for you.

Answer to all your questions

Have more questions? Contact our sales team to get the answer you’re looking for