Learn how speech recognition in AI works, its top use cases, core components, challenges, and future advancements in real-time, multilingual automation.

Rishabh Dahale

Updated on

January 27, 2026 at 5:38 AM

Ever found yourself wishing your systems could just understand what people are saying without hours of manual intervention, especially when every second of audio matters? That’s exactly the moment teams start searching for speech recognition in AI. Whether it’s scaling up automation, handling nuanced conversations, or finally getting reliable transcripts on the first pass, speech recognition becomes a strategic priority when accuracy and productivity are on the line.

And the market is responding; the global speech and voice recognition market is projected to grow from USD 9.66 billion in 2025 to approximately USD 23.11 billion by 2030, at a compound annual growth rate (CAGR) of 19.1%, driven in large part by increased adoption of real-time transcription, voice interfaces, and voice-enabled automation solutions.

If you’re exploring speech recognition to improve speed, consistency, or operational resilience, you’re asking the right questions at the right time.

In this guide, we’ll walk through how speech recognition works, its core components, real-world applications, and what to look for when adopting it in production.

Key Takeaways

Neural Architectures Drive ASR Accuracy: Modern ASR uses CTC, RNN-T, and transformer models to balance latency with high-accuracy transcription across domains.

Real-Time ASR Responds Within Milliseconds: Streaming pipelines emit partial tokens every few milliseconds, allowing intent detection within 300 ms.

Telephony Audio Creates Recognition Challenges: VoIP compression and background noise distort phonemes, increasing substitution and deletion errors in real interactions.

Deterministic Normalization Protects Data Integrity: Generic normalization breaks structured fields; ASR requires rule-based rewriting for dates, amounts, and alphanumerics.

ASR Is Moving Toward Sub-100 ms Latency: Future systems target ultra-low latency, multimodal signals, and on-device inference for speed and compliance.

What is Speech Recognition in AI?

Speech recognition in AI refers to systems that convert spoken language into machine-readable text with high accuracy across accents, noise conditions, and real-time environments. Modern architectures rely on neural acoustic models, large-scale language models, and streaming inference pipelines that support instant transcription for contact centers, voice automation, and enterprise workflows.

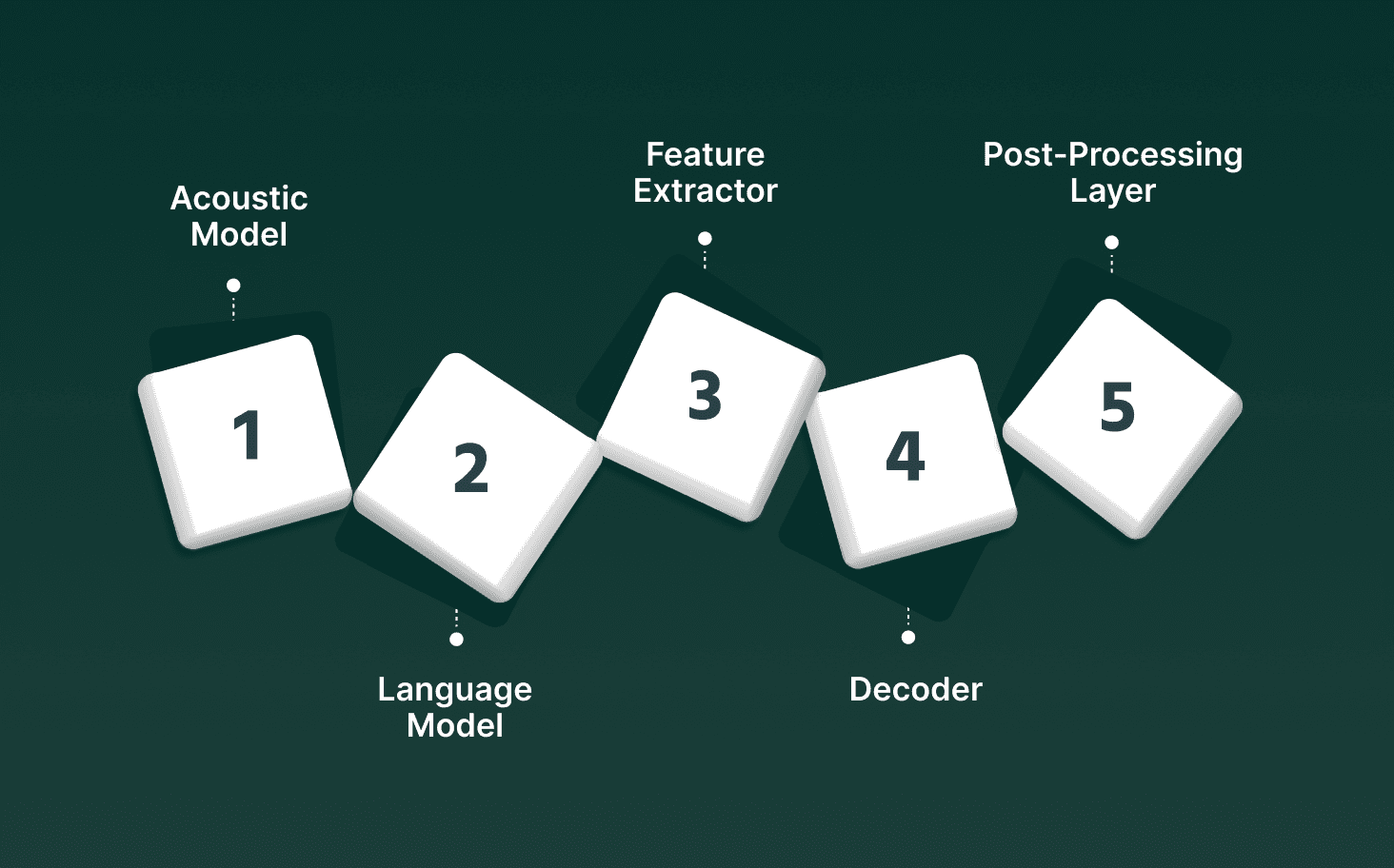

Core Components

Acoustic Model: Converts raw waveforms into probability distributions of phonemes using CNNs, RNN-T, or transformer encoders trained on large multilingual datasets.

Language Model: Predicts word sequences based on context to reduce substitution and insertion errors, often using transformer LMs optimized for domain-specific vocabulary.

Feature Extractor: Generates MFCCs, log-Mel spectrograms, or streaming embeddings that preserve temporal detail for accurate phoneme prediction.

Decoder: Aligns acoustic outputs with likely text sequences through CTC, RNN-T, or attention-based decoding, balancing latency with accuracy.

Post-Processing Layer: Applies punctuation, number formatting, profanity filters, diarization tags, and domain-specific replacements to produce production-ready text.

Speech recognition in AI operates as a precise, model-driven pipeline built to convert live or recorded speech into structured text with accuracy, speed, and domain awareness across enterprise environments.

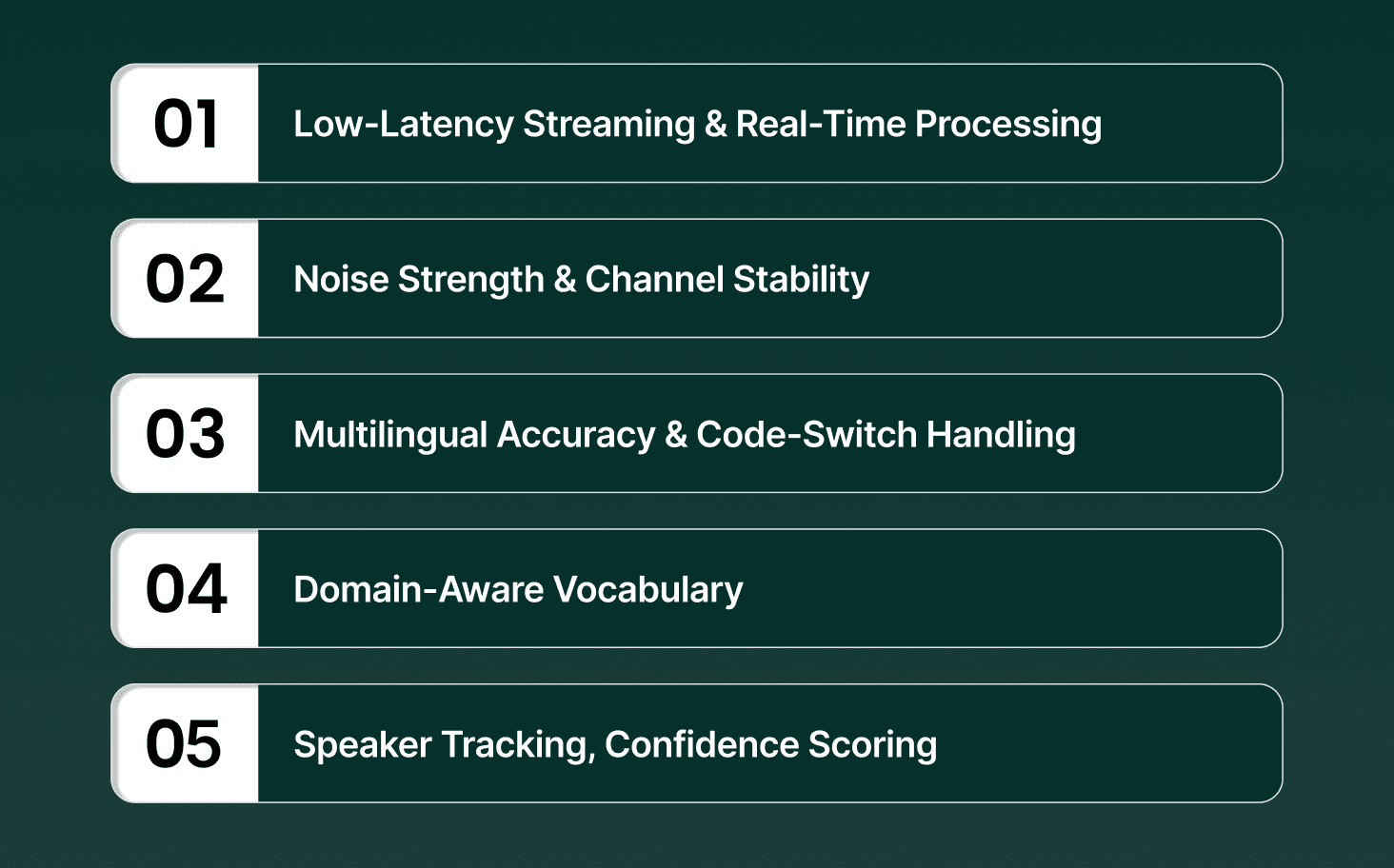

Key Features of Speech Recognition in AI

Speech recognition systems are built to process audio at scale with accuracy that holds under variable conditions such as background noise, accent shifts, channel distortion, and real-time latency requirements. Modern engines use neural encoders, domain-specific language models, and adaptive normalization pipelines to keep transcripts stable across enterprise workloads.

Low-Latency Streaming & Real-Time Processing: Supports transcription with partial hypotheses emitted every few milliseconds, allowing live routing, agent actions, and instant workflow triggers.

Noise Strength & Channel Stability: Maintains accuracy across VoIP compression, handset distortion, far-field mics, and background noise using augmentation techniques and noise-trained acoustic models.

Multilingual Accuracy & Code-Switch Handling: Processes quick language shifts within a single utterance through mixed-token vocabularies and joint acoustic modeling suitable for diverse speech patterns.

Domain-Aware Vocabulary & Structured Output Formatting: Recognizes domain-heavy terms (IDs, SKU codes, medication names) and applies consistent punctuation, dates, and numeric formatting for automation-ready text.

Speaker Tracking, Confidence Scoring & Scalable Architecture: Separates speakers through diarization, assigns token-level confidence scores for verification logic, and supports high-concurrency workloads via GPU or mixed-precision inference.

Speech recognition in AI centers on precise, latency-aware processing that converts speech into structured text reliably across domains, languages, and real-world acoustic conditions.

To see how speech recognition connects with automated calling systems, explore What Are AI Phone Agents and How They Work.

Top Use Cases of Speech Recognition in AI

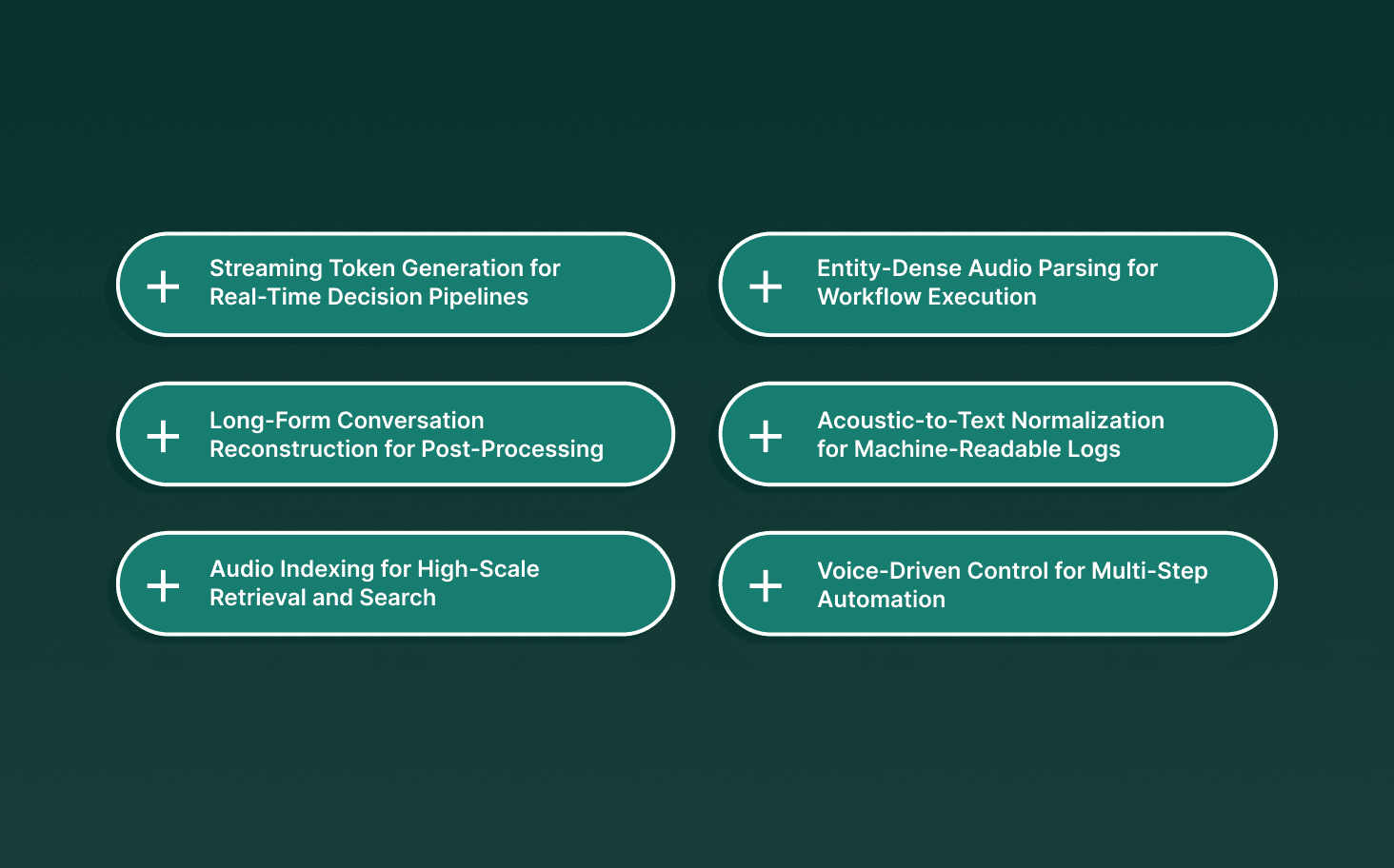

Speech recognition supports systems that depend on time-aligned token streams, stable entity extraction, deterministic formatting, and long-context reconstruction. These use cases reflect how ASR functions inside automation engines, LLM pipelines, and audio-processing infrastructures.

1. Streaming Token Generation for Real-Time Decision Pipelines

ASR emits partial hypotheses every few milliseconds so downstream engines can act before an utterance finishes. This allows mid-sentence decision updates and prevents context lag in real-time systems.

Benefits

Early Intent Signals: Supplies incremental text that updates routing or dialog logic while the user is still speaking.

Low-Latency Conditioning: Feeds LLMs a continuous context to prevent misalignment or hallucination during live interactions.

Stable Alignment: Maintains monotonic token order required for reliable real-time inference.

Example: A transducer model emits “transfer me to…” within the first 300 ms of speech, allowing the system to preselect a routing path before the sentence completes.

2. Entity-Dense Audio Parsing for Workflow Execution

ASR extracts structured elements such as long digit sequences, alphanumeric identifiers, and timestamp phrases without language-model drift that could corrupt downstream automation.

Benefits

Precision Slot Filling: Captures structured values like “A91–B3–77” without autocorrection.

Canonical Output: Converts spoken variants of numbers or codes into machine-readable forms.

Ambiguity Resolution: Differentiates acoustically similar segments using specialized scoring and rewrite rules.

Example: ASR transcribes “four zero zero dash nine delta” as “400-9D” instead of an LM-biased guess like “400ID.”

3. Long-Form Conversation Reconstruction for Post-Processing

ASR produces time-stamped, speaker-consistent transcripts required for summarization, topic extraction, reasoning tracking, and multi-step context retention in longer interactions.

Benefits

Turn Accuracy: Preserves speaker separation even during overlap.

Context Stability: Minimizes drift across long segments to maintain reference continuity.

Precise Timestamping: Supports chronological reasoning and event detection.

Example: ASR aligns tokens to speaker turns in a 40-minute interaction, allowing an LLM to attribute commitments or questions to the correct speaker.

4. Acoustic-to-Text Normalization for Machine-Readable Logs

ASR outputs are normalized into deterministic formats, especially for digits, dates, and symbols, so automation systems can reliably ingest and transform them.

Benefits

Consistent Structure: Converts variants like “twenty three slash oh four” into “23/04.”

Deterministic Rewrites: Avoids LM-driven formatting deviations that break downstream processing.

Redaction Compatibility: Produces stable token boundaries for sensitive-data masking.

Example: Normalization maps “five hundred and six dollars” to “$506.00” using predefined rewrite rules instead of probabilistic inference.

5. Audio Indexing for High-Scale Retrieval and Search

ASR transforms long-form audio into searchable text streams with timestamp alignment, allowing phrase-level navigation and retrieval across large datasets.

Benefits

High-Recall Searchability: Supports exact and semantic phrase retrieval across hours of audio.

Timestamp Linking: Allows instant playback of the exact audio moment tied to any token.

Hybrid Embedding Use: Combines text tokens and acoustic vectors for strong matching.

Example: A retrieval system surfaces every occurrence of the phrase “threshold exceeded” and opens each exact moment in the audio using ASR timestamps.

6. Voice-Driven Control for Multi-Step Automation

ASR interprets multi-parameter spoken commands, corrections, and chained steps, allowing complex workflows rather than simple one-step actions.

Benefits

Parameter Extraction: Identifies multi-part instructions in a single utterance.

Disfluency Handling: Interprets corrections without misfiring on earlier segments.

Confidence Gating: Pauses execution when ASR certainty drops below thresholds.

Example: The command “create entry, tag urgent, add note: follow-up in two days” is parsed into three distinct system actions with parameter boundaries preserved.

These use cases show how speech recognition provides precise, time-aligned, and structurally reliable outputs that allow real-time decision systems, retrieval engines, automation workflows, and long-context AI reasoning.

See how Smallest.ai delivers precise multilingual recognition, flawless numeric handling, and large-scale voice automation built for real operations. Get a demo today.

What To Know About ASR Technology

Automatic Speech Recognition (ASR) technology relies on neural architectures that convert continuous audio signals into structured text with strict latency, accuracy, and scalability requirements. Modern ASR engines focus on handling unpredictable speech patterns, acoustic variability, and domain-specific language constraints to support enterprise workflows.

Core Model Architectures for Accuracy and Latency: ASR uses CTC, RNN-Transducer, and transformer encoder–decoder models, each optimized for different latency–accuracy trade-offs in streaming and offline transcription.

Data Diversity & Tokenization Shape Model Performance: Accuracy depends on large, diverse datasets (accents, speaking rates, devices, noise) and subword tokenization methods like BPE or unigram models that support multilingual scripts.

Streaming vs. Non-Streaming Inference Modes: Streaming ASR generates incremental transcripts in real time, while non-streaming ASR processes full audio segments for higher accuracy and long-context stability.

Normalization, Formatting & Domain Mapping: ASR pipelines rely on deterministic rules to convert spoken dates, numbers, currencies, and alphanumerics into structured forms required for downstream automation.

Reliability Constraints: Latency, Acoustic Variability & Evaluation Metrics

Models must operate under 300–500 ms end-to-end latency, handle distortions like codec compression or reverberation, and are measured using WER, CER, and entity-specific accuracy metrics.

ASR technology combines architecture design, dataset diversity, latency controls, and domain-aware processing to produce accurate, structured text outputs across real-world audio conditions.

Challenges With Speech Recognition in AI

Speech recognition systems operate under strict accuracy, latency, and strength requirements. The challenges often surface in real-world telephony conditions, mixed-language speech, domain-dense vocabulary, and environments where formatting precision is critical for downstream automation.

Challenge | What Makes It Hard | Impact in Real Deployments |

Telephony Compression & Noise | VoIP codecs (G.711, Opus) distort high-frequency cues; background noise masks phonemes. | Higher substitution and deletion errors during support calls or IVR routing. |

Accents, Dialects & Speaking Variability | Quick shifts in pronunciation, pace, and prosody require diverse acoustic coverage. | Models misinterpret domain entities like account numbers, item names, or medication terms. |

Code-Switching | Mixed-language utterances break monolingual token prediction patterns. | Partial transcripts or dropped words are common in multilingual regions. |

Domain-Heavy Terminology | Policy IDs, SKU lists, alphanumeric strings, and medical terms fall outside general corpora. | Incorrect rendering of identifiers, regulatory statements, and operational instructions. |

Over-Normalization | Generic normalization rules distort structured data (dates, charges, claim codes). | Downstream systems receive inconsistent or unusable text. |

Real-Time Latency Constraints | Streaming ASR must produce partial hypotheses within 200–500 ms. | Slow transcripts block live agent assist, call routing, or automated actions. |

Speech recognition faces challenges rooted in signal quality, linguistic variability, domain specificity, and latency pressures, all of which must be addressed for accurate, production-grade enterprise use.

If you're exploring how voice technologies fit into your workflow, take the next step by reading Understanding What Text-to-Speech Is and How It Works.

Industry Examples of Speech Recognition in AI

Speech recognition supports sectors that rely on high-volume audio streams, regulated communication, structured data capture, and real-time interaction. Adoption centers on telephony, compliance operations, healthcare documentation, authentication, and field environments where manual transcription is impractical.

Contact Centers: Transcribes live calls for real-time QA, routing, sentiment tracking, and automated summaries. Systems identify policy numbers, order IDs, account verification phrases, and interruption patterns with high accuracy in telephony audio.

Healthcare: Captures physician dictation, clinical notes, medication names, and procedure terms using domain-adapted vocabularies. Reduces time spent on manual charting and supports structured EMR entry.

Financial Services: Supports call logging for audits, keyword detection for compliance, and transcription of claims, payment instructions, and KYC data. ASR engines must handle strict accuracy requirements for numbers and regulatory terms.

Insurance: Converts adjuster notes, claims calls, and field reports into searchable text. Models focus on coverage terms, damage descriptions, and long alphanumeric sequences.

Logistics and Field Services: Allows technicians to create hands-free job notes, equipment readings, and inspection reports in noisy outdoor or warehouse conditions.

Retail and E-Commerce: Transcribes support calls, voice-based order tracking, and product inquiries across mixed languages and varied customer accents.

Government and Public Safety: Processes emergency calls, incident descriptions, and radio communications where clarity, timestamp accuracy, and speaker separation are critical.

Speech recognition appears across sectors that depend on precise, timestamped, and domain-aware transcription to support operations, compliance, and real-time decision systems.

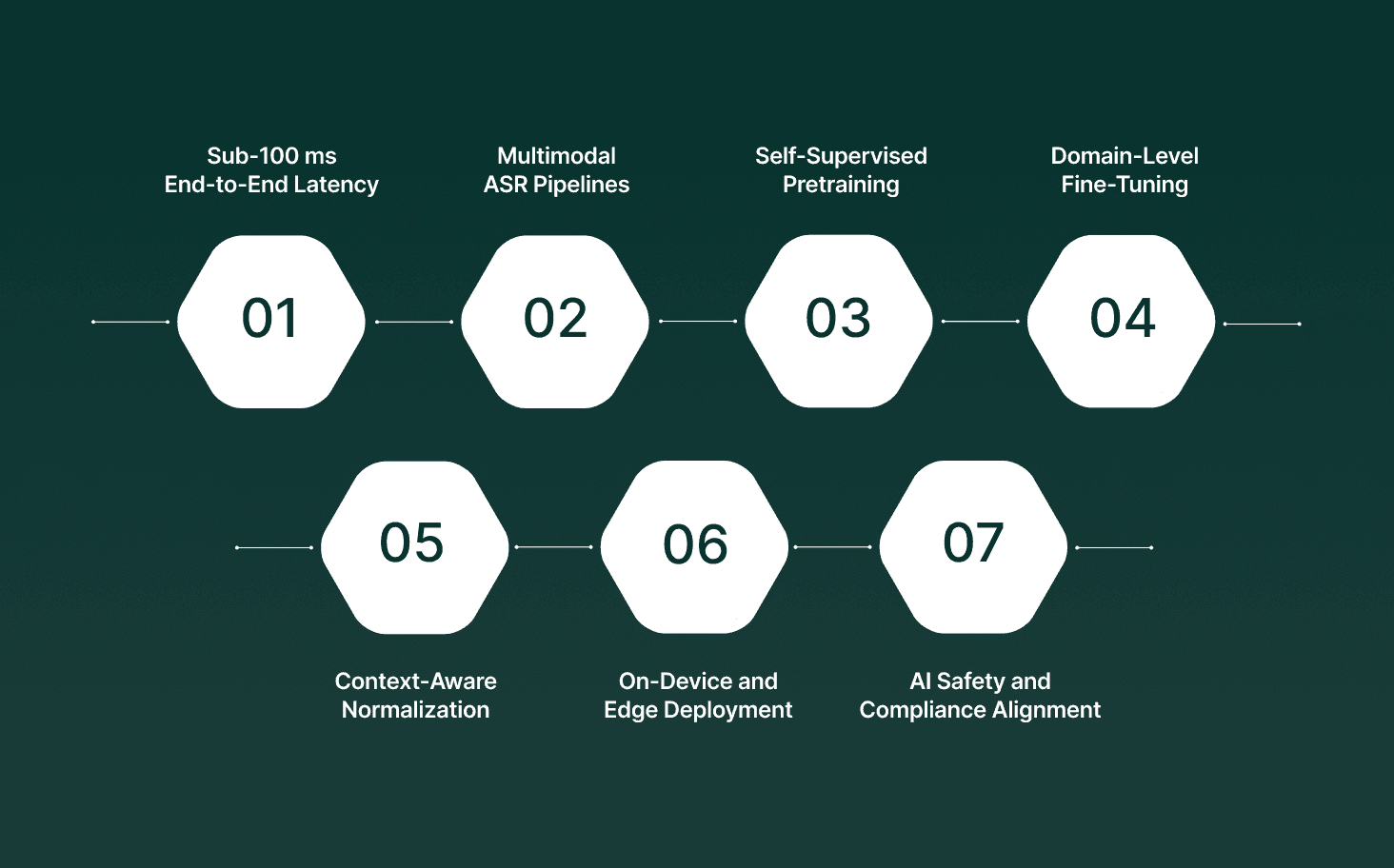

The Future of Speech Recognition in AI

The next stage of speech recognition centers on ultra-low latency models, domain-adaptive training pipelines, and multimodal systems that interpret speech alongside context signals such as sentiment, intent, and structured metadata.

Advances focus on accuracy under telephony noise, multilingual fluidity, compliance alignment, and tight integration with automation systems.

Sub-100 ms End-to-End Latency: Models will complete acoustic encoding, decoding, and formatting within tens of milliseconds, allowing real-time agent actions, dynamic call routing, and instantaneous voice-driven workflows.

Multimodal ASR Pipelines: Future engines will combine audio, text history, metadata, and domain graphs to reduce ambiguity, improving recognition of entity-heavy speech such as claim numbers, medication codes, or product SKUs.

Self-Supervised Pretraining at Scale: Large audio–text pretraining will allow ASR systems to generalize across unseen accents, low-resource languages, and spontaneous speech with fewer labeled datasets.

Domain-Level Fine-Tuning: Enterprises will train lightweight adapters rather than full models, allowing quick updates for new terminology, regulatory language, or seasonal vocabulary shifts.

Context-Aware Normalization: ASR outputs will automatically apply formatting rules based on industry context, such as replacing spoken tax IDs, policy sequences, or dosage instructions with standardized written forms.

On-Device and Edge Deployment: Smaller quantized models will run on telephony gateways, kiosks, or handheld devices where data locality is required for privacy, speed, or regulatory constraints.

AI Safety and Compliance Alignment: Systems will integrate redaction, bias checks, and automatic anomaly detection (e.g., misheard digits in financial or medical contexts) to meet audit and governance standards.

Speech recognition is moving toward latency-free inference, domain-aware accuracy, and multimodal intelligence that supports precise, compliant, and real-time automation across operational environments.

How Smallest.ai Strengthens Speech Recognition Capabilities

Smallest.ai strengthens speech recognition by supporting real-time, multilingual, entity-precise voice automation built for large-scale call handling, on-premise deployment, and enterprise security requirements. Its voice agents operate with high stability across complex workflows, numeric-heavy speech, and diverse accents, making ASR outputs more reliable for downstream automation.

Key Features

Custom Trained Voice Agents: Models are adapted to your data to improve recognition of domain-specific terms, numeric patterns, and frequently occurring phrasing, increasing reliability in specialized environments.

On-Premise Deployment: Supports running ASR and agent models on your hardware, allowing inference control, reduced latency, and strict alignment with compliance or data residency requirements.

High-Volume Concurrency: Built to manage thousands of simultaneous calls, maintaining transcript stability and timing accuracy across parallel audio streams.

Numeric Accuracy Guarantees: Voice models are engineered to handle digit sequences, credit cards, phone numbers, and multi-part codes with high precision, minimizing substitution or formatting errors.

Multilingual Recognition: Supports speech understanding across 16+ global languages, allowing accurate ASR for accents, mixed-language phrases, and regional pronunciation variations.

Complex Workflow Handling: Agents process hundreds of predefined corner cases, improving transcript continuity and reducing misinterpretation in long, branching conversational flows.

Analytics and Evaluation Layer: Provides detailed interaction logs and performance insights, allowing systematic review of ASR accuracy, entity stability, and turn-by-turn behavior.

Developer SDKs: Python, Node.js, and REST APIs integrate speech pipelines directly into telephony or backend systems without custom infrastructure overhead.

Smallest.ai strengthens speech recognition by delivering precise numeric handling, scalable real-time performance, multilingual accuracy, and customizable workflow behavior supported by enterprise-grade deployment and security controls.

Conclusion

Speech recognition in AI is becoming a foundational layer for teams that rely on accurate, real-time interpretation of unscripted speech. What matters most now is the stability of transcripts under real conditions, mixed accents, numeric sequences, noise, and high-volume workloads, rather than idealized accuracy in controlled settings.

If your team is exploring ways to strengthen reliability and automation quality, adopting speech recognition in AI through a platform engineered for operational depth can make a measurable difference. Smallest.ai provides voice agents and infrastructure built for precision, scale, and multilingual performance.

See how Smallest.ai can support your speech workflows. Get a demo today.

FAQs About Speech Recognition in AI

1. How does AI in speech recognition handle overlapping speakers?

Most systems use diarization models that create speaker embeddings, allowing AI in speech recognition to separate voices even when talking simultaneously.

2. Can AI in speech recognition detect uncertainty or hesitation in a user’s voice?

Yes. Modern models track acoustic cues like pauses, pitch changes, and elongated syllables, which help downstream systems interpret hesitation or uncertainty.

3. Does speech recognition AI example data include noisy or distorted audio during training?

High-quality datasets intentionally include background noise, reverberation, and codec artifacts so models perform reliably in real telephony conditions.

4. How does AI prevent misreading long numbers or codes in speech recognition tasks?

Specialized scoring rules, constrained decoding, and post-processing layers reduce autocorrection drift in digit-heavy utterances.

5. What is a real-world speech recognition AI example that doesn’t involve transcribing conversations?

A strong example is command parsing: AI interprets multi-step spoken instructions like “open file, rename it, then archive it,” triggering sequential automated actions.