A practical guide to generative AI in lending, covering execution, compliance, fraud risks, real-time voice, and on-prem deployment for modern credit teams.

Prithvi Bharadwaj

Updated on

January 28, 2026 at 2:55 PM

Every lending team knows the moment. A borrower is on the line, confused or anxious, and the system pauses, routes, or asks for information that already exists. That silence costs trust. It is in these moments that generative AI in lending stops being a technology discussion and becomes an operational one, focused on timing, clarity, and control.

The urgency is reflected in the numbers. By 2033, the Generative AI in Banking market is forecasted to reach USD 24.86 billion, driven by lenders who need decisions to move at conversation speed. For leaders evaluating generative AI in lending, the real question is execution under regulation, not experimentation.

In this guide, we break down where it is applied across the lending lifecycle, the risks it introduces, and how real-time, compliant execution turns intelligence into borrower action.

Key Takeaways

Execution Drives Value: Generative AI in lending delivers results only when embedded into regulated credit workflows, not when used as a standalone model layer.

Unstructured Data Is the Constraint: The biggest gains come from turning documents and conversations into structured, action-ready credit signals.

Fraud Has Become Generative: Synthetic identities, deepfakes, and fabricated documents now defeat static KYC and rule-based controls.

Voice Reveals Critical Signals: Live conversations expose urgency, risk, and compliance cues that text-based systems cannot capture.

Architecture Is a Risk Decision: On-prem and hybrid deployments provide the control required for low latency, data residency, and audits.

What Generative AI Means for Modern Lending Operations

In lending, generative AI functions as an execution layer that interprets unstructured borrower signals, orchestrates workflow steps, and produces regulator-ready outputs across origination, underwriting, servicing, and collections. Its value comes from how tightly it is embedded into operating systems, not from model novelty.

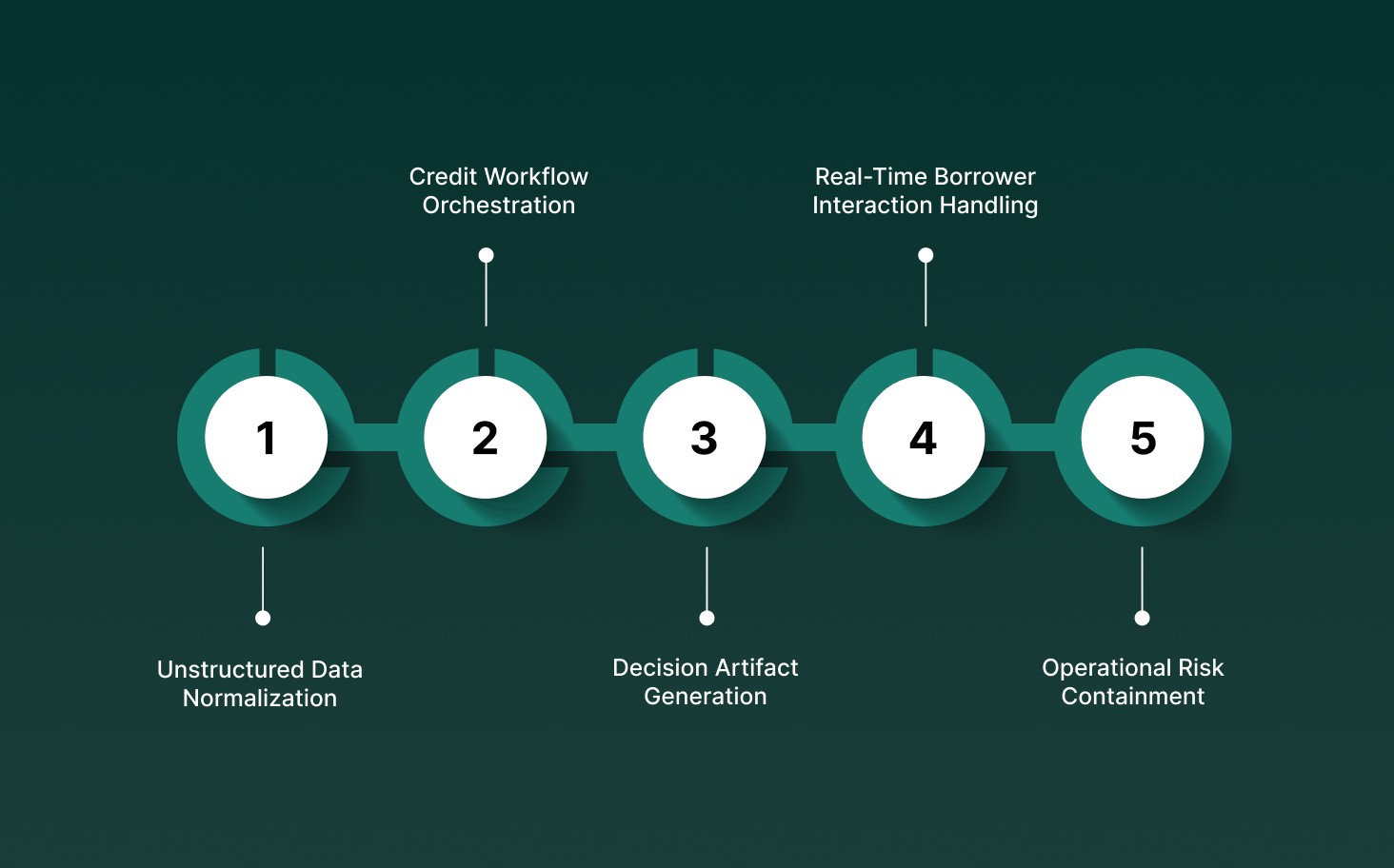

Unstructured Data Normalization: Annual reports, bank statements, call transcripts, emails, and KYC documents are converted into structured lending artifacts such as credit summaries, risk flags, and decision inputs using retrieval-grounded generation and schema-bound outputs.

Credit Workflow Orchestration: Generative systems sequence tasks across intake, verification, underwriting, and servicing, triggering downstream actions such as clarification requests, analyst reviews, or borrower outreach based on confidence thresholds and policy rules.

Decision Artifact Generation: Credit memos, adverse action notices, compliance disclosures, and internal risk notes are produced with traceable source references, allowing auditability without manual document assembly.

Real-Time Borrower Interaction Handling: Lending operations rely on conversational channels for clarifications, follow-ups, and payment discussions. Generative AI interprets intent, detects ambiguity, and routes conversations based on risk tier, delinquency stage, or regulatory sensitivity.

Operational Risk Containment: Guardrails such as deterministic prompts, bounded response formats, and human review checkpoints limit hallucination, policy drift, and inconsistent borrower communication during high-stakes credit interactions.

See how real-time, governed execution turns credit intelligence into borrower action in How AI Lending Is Transforming the End-to-End Borrower Experience.

Where Generative AI Is Applied Across the Lending Lifecycle

In production environments, generative AI in lending operates as a governed execution layer that interprets unstructured signals, enforces credit policy, and drives borrower actions across origination, underwriting, servicing, and recovery under deterministic controls.

1. Loan Origination and Application Intake

Generative AI in lending automates intake by transforming borrower-provided documents, narratives, and conversations into policy-aligned application states while enforcing completeness, consistency, and jurisdictional rules in near real time.

Multi-Modal Intake Normalization: Parses PDFs, scanned statements, tax filings, voice transcripts, and free-text explanations into structured application objects using retrieval-grounded generation with schema validation at ingestion time.

Real-Time Completeness and Consistency Checks: Cross-verifies income figures, entity names, ownership structures, and disclosures across inputs, blocking downstream progression until inconsistencies or missing artifacts are resolved.

Intent-Driven Routing and Product Matching: Interprets borrower intent and usage narratives to route applications into correct product variants, pricing bands, and risk workflows without manual triage.

Example: A commercial lender deploys generative AI to process uploaded financials and borrower voice clarifications during intake, resolving missing guarantor details before underwriting receives the file.

2. Underwriting and Credit Assessment

Generative AI in lending supports underwriting by producing review-ready credit artifacts from heterogeneous data sources while preserving explainability, confidence scoring, and analyst control.

Credit Memo Assembly With Source Traceability: Generates draft credit memo sections that reference audited financials, transaction histories, and external datasets, and embeds citations and confidence levels for analyst verification.

Cross-Source Risk Signal Correlation: Synthesizes financial ratios, cash flow volatility, industry benchmarks, adverse media, and behavioral indicators into consolidated risk summaries aligned with underwriting policy thresholds.

Deterministic Human-in-the-Loop Controls: Enforces mandatory analyst review when confidence scores fall below defined thresholds or when model outputs conflict with historical exposure patterns.

Example: An enterprise bank reduces underwriting preparation time by auto-drafting credit memos while requiring human approval for covenant structure and exposure sizing.

3. Portfolio Monitoring and Early Warning

Generative AI in lending allows continuous credit surveillance by detecting weak signals across borrower behavior, communications, and external events that precede measurable credit deterioration.

Behavioral Drift Detection at Signal Level: Identifies deviations in payment cadence, transaction volume, and borrower communication tone that exceed historical baselines and risk tolerances.

Event-Driven Exposure Reassessment: Correlates earnings releases, litigation, sector disruptions, and macro signals to active portfolios, summarizing borrower-specific impact scenarios.

Actionable Intervention Orchestration: Produces prioritized intervention queues with recommended outreach, covenant reviews, or restructuring assessments tied to risk severity.

Example: A mid-market lender flags borrowers exposed to sector-specific shocks and initiates proactive engagement before contractual defaults occur.

4. Loan Servicing and Account Management

Generative AI in lending manages servicing interactions by resolving borrower requests, enforcing disclosure consistency, and maintaining auditable records of interactions across all communication channels.

Context-Preserving Interaction Resolution: Handles borrower inquiries using full account history, prior communications, and servicing status without forcing agents or borrowers to re-establish context.

Regulatory Disclosure Enforcement: Ensures required disclosures are delivered verbatim and at the correct interaction points during modifications, forbearance requests, or disputes.

Structured Interaction Logging: Generates reviewable summaries and transcripts aligned to internal audit, complaint handling, and regulatory review standards.

Example: A retail lender automates hardship requests while preserving regulator-ready servicing logs for post-interaction audits.

5. Collections and Recovery

Generative AI in lending executes compliant, stage-aware borrower engagement during delinquency while maintaining strict control over tone, disclosures, and escalation boundaries.

Delinquency-Stage Controlled Communication: Dynamically adjusts messaging, disclosures, and escalation paths based on delinquency age, jurisdiction, and borrower risk classification.

Policy-Bound Resolution Generation: Produces repayment plans and settlement options constrained by affordability models, internal policies, and regulatory requirements.

Fail-Safe Escalation and Consent Controls: Enforces call timing rules, opt-out preferences, and immediate human escalation for disputes, vulnerability indicators, or legal thresholds.

Example: A collections operation automates early-stage delinquency outreach while escalating complex cases to human agents with full interaction context.

With Smallest.ai, lenders operationalize generative AI at conversation speed, preserving deterministic workflows, regulatory discipline, and full interaction traceability.

Fraud, Deepfakes, and Synthetic Identities in AI-Driven Lending

As generative AI in lending expands borrower automation, it also allows high-fidelity impersonation, synthetic borrower creation, and document fabrication that bypass legacy KYC, AML, and fraud controls.

Synthetic Identity Construction: Fraud rings assemble statistically valid borrower profiles by combining real SSNs, fabricated employment histories, synthetic transaction trails, and AI-generated narratives that pass rule-based credit and KYC checks.

Voice Deepfake Account Takeover: Low-latency voice cloning allows attackers to impersonate borrowers during servicing or hardship calls, defeating knowledge-based authentication through natural conversational cues and consistent vocal patterns.

Document Fabrication at Scale: Generative models produce bank statements, tax returns, and pay stubs with internally consistent figures, metadata, and formatting that evade optical and template-based validation systems.

Behavioral Camouflage Attacks: Adversaries train models to mimic legitimate borrower communication cadence, sentiment, and repayment behavior during early account stages, delaying fraud detection until exposure increases.

Model Feedback Loop Exploitation: Attackers probe AI-driven lending systems iteratively, refining synthetic inputs based on acceptance signals, effectively reverse-engineering underwriting and verification thresholds.

In AI-driven lending, fraud prevention depends on layered identity verification, behavioral analysis, and real-time human escalation. Static controls fail against adaptive, generative adversaries.

Generative AI Without Real-Time Voice Creates Operational Blind Spots

In lending operations, generative AI systems that rely only on text miss time-sensitive borrower signals, escalation cues, and compliance risks that surface primarily during live voice interactions.

Blind Spot Area | What Breaks Without Real-Time Voice | Operational Impact in Lending |

Latency Management | Text workflows operate asynchronously, delaying borrower clarifications, consent capture, and hardship identification. | Higher application drop-offs, missed regulatory timelines, and delayed risk resolution. |

Risk Signal Detection | Tone, hesitation, stress, confusion, and coercion signals are not observable in text-only channels. | Early distress and fraud indicators go undetected until delinquency or default surfaces. |

Identity Assurance | Static credentials replace live voice verification, allowing sustained impersonation and synthetic identity persistence. | Increased account takeovers and servicing-stage fraud exposure. |

Compliance Enforcement | Disclosures, acknowledgements, and opt-outs cannot be enforced at precise conversational checkpoints. | Inconsistent compliance execution and weak audit defensibility. |

Human Escalation Logic | Text systems lack urgency signals to trigger immediate agent intervention. | Delayed escalation during disputes, vulnerability disclosures, or legal threshold events. |

See how lenders operationalize AI-driven risk signals across underwriting and monitoring in How AI Credit Risk Assessment Is Transforming Risk Management.

Balancing Automation, Compliance, and Human Oversight

In lending, generative AI must operate within enforceable policy boundaries where automation accelerates execution, compliance remains deterministic, and human judgment governs irreversible credit outcomes.

Deterministic Policy Enforcement: Automated lending actions are constrained by rule-bound workflows that lock disclosures, eligibility logic, and escalation paths, preventing model drift during regulated borrower interactions.

Confidence-Gated Decisioning: Generative outputs include confidence scoring and contradiction detection, forcing human review when uncertainty, data conflicts, or exposure thresholds exceed predefined risk tolerances.

Jurisdiction-Aware Workflow Branching: Compliance logic dynamically adjusts disclosures, communication timing, and permissible actions based on borrower location, loan type, and applicable regulatory regimes.

Human-in-the-Loop Escalation Design: High-impact events such as disputes, hardship declarations, or covenant breaches trigger immediate agent takeover with full conversational and decision context preserved.

End-to-End Audit Traceability: Every automated action, generated artifact, and borrower interaction is logged with timestamps, source references, and policy justifications for regulatory examination.

Effective generative AI in lending balances speed with control. Automation executes defined paths, compliance enforces boundaries, and human oversight intervenes precisely where risk becomes irreversible.

Operational Readiness Checklist for Lenders Adopting Generative AI

Operational readiness for generative AI in lending depends on production-grade controls across data, workflows, infrastructure, and governance, not pilot success or model accuracy in isolation.

Readiness Area | What Must Be In Place | Why It Matters |

Data Lineage & Grounding | Approved data sources, version control, freshness validation, and retrieval constraints for all generated outputs. | Prevents unsupported inferences and strengthens audit defensibility. |

Workflow Boundaries | Explicit entry conditions, exit criteria, and no-action states for every AI-driven lending step. | Stops unauthorized progression across regulated credit stages. |

Latency & Throughput | Defined response-time SLAs, peak-load testing, and fallback behavior when inference exceeds thresholds. | Protects borrower experience and time-bound compliance obligations. |

Policy vs Model Separation | Credit rules, disclosures, and regulations are enforced outside the model layer. | Allows policy updates without retraining or redeployment risk. |

Human Escalation Controls | Clear triggers, override authority, and accountability ownership for high-risk scenarios. | Ensures human judgment governs irreversible credit outcomes. |

See how forward-looking risk signals support faster, more controlled credit execution in 9 Types of Predictive Analytics in Banking Used by Top Teams.

Why On-Prem and Hybrid Deployment Matter in Lending AI

In regulated lending environments, on-prem and hybrid deployment architectures provide deterministic control over data, latency, and compliance boundaries that cloud-only AI deployments cannot guarantee.

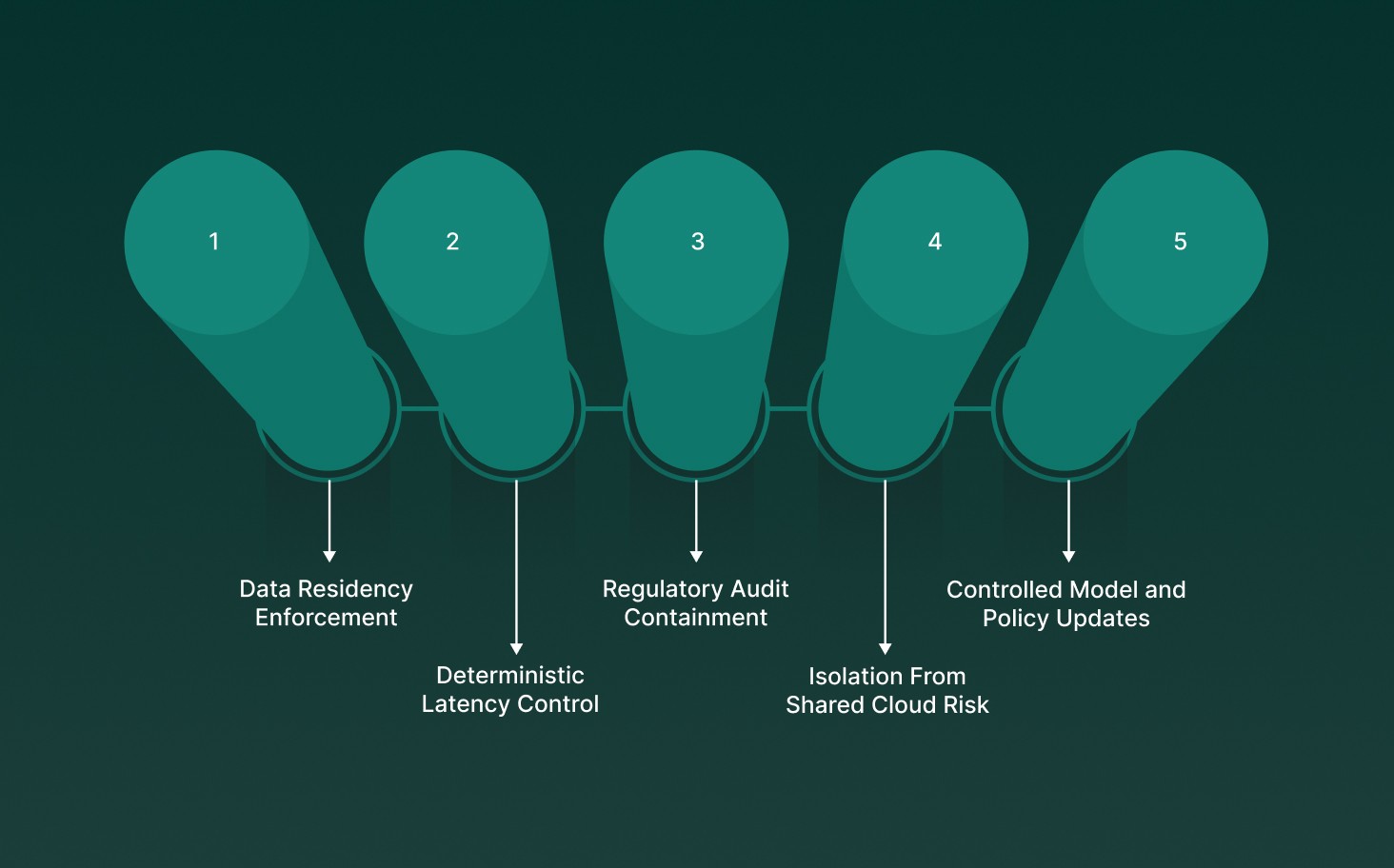

Data Residency Enforcement: Sensitive borrower data, call recordings, and credit artifacts remain within approved jurisdictions and security zones, satisfying regional banking, privacy, and supervisory data-localization requirements.

Deterministic Latency Control: Local inference eliminates network variability, allowing sub-100ms response times required for real-time borrower conversations, authentication, and consent checkpoints.

Regulatory Audit Containment: Logs, models, and interaction artifacts are retained within bank-controlled infrastructure, simplifying regulator access, forensic review, and chain-of-custody validation.

Isolation From Shared Cloud Risk: On-prem deployments prevent cross-tenant leakage, shared-model contamination, and dependency on third-party update cycles that may alter model behavior unexpectedly.

Controlled Model and Policy Updates: Hybrid architectures allow institutions to stage, validate, and roll out model or policy changes without disrupting live lending operations or breaching compliance controls.

For generative AI in lending, deployment architecture is a risk decision, not an IT preference. On-prem and hybrid models deliver the control required for regulated, real-time credit execution.

How Smallest.ai Fits Into Generative AI in Lending Execution

Smallest.ai operates as a real-time voice execution layer for generative AI in lending, translating credit intelligence into controlled borrower conversations under strict latency, compliance, and audit constraints.

Real-Time Voice Inference Layer: Sub-100ms speech generation and understanding support live borrower interactions across origination, servicing, and collections without conversational lag or state loss during credit-critical moments.

Policy-Bound Conversational Control: Voice agents operate within deterministic flow graphs in which disclosures, prompts, and escalation paths are bound to lending SOPs and jurisdiction-specific regulatory requirements.

Conversation-to-System Orchestration: Live calls trigger downstream system actions, such as CRM updates, case creation, payment plan initiation, or analyst review requests, via event-driven integrations.

Audit-Ready Interaction Artifacts: Every call produces structured transcripts, intent labels, timestamps, and action logs aligned with internal audit, complaint handling, and regulatory review needs.

Smallest.ai fits into generative AI in lending by executing decisions through real-time, governed voice interactions. It connects models to outcomes while preserving control, traceability, and regulatory discipline.

Final Thoughts!

Generative AI in lending has reached a point where outcomes matter more than ambition. The differentiator is not insight generation but the ability to act inside regulated workflows, at the speed borrowers expect, with controls that risk teams can defend. Lenders that treat AI as infrastructure, not experimentation, are the ones converting intelligence into durable credit performance.

This is where execution through real-time voice becomes decisive. Smallest.ai allows lenders to operationalize generative AI through low-latency, policy-bound voice interactions that connect decisions to borrower action without losing audit control.

If you are ready to move from models to measurable execution, talk to our team and see how Smallest.ai fits into your lending stack.

Answer to all your questions

Have more questions? Contact our sales team to get the answer you’re looking for