Learn how generative AI fraud detection strengthens risk control with richer signals, synthetic scenarios, and real-time insights for high-risk teams.

Akshat Mandloi

Updated on

January 19, 2026 at 8:38 AM

Fraud teams in banking, payments, lending, and service-heavy operations face rising pressure as call volumes grow and attackers shift tactics quickly. Leaders running voice AI, conversational AI, voice agents, and voice cloning know that many high-risk signals surface during live calls, not after the transaction. When teams rely on shallow logs or delayed reviews, fraud slips through small gaps that widen at scale.

Global demand for stronger systems reflects this shift. The AI in fraud detection market size is forecast to increase by USD 26.5 billion at a CAGR of 21.6% between 2024 and 2029, driven by the need for models that work with richer behavioral and timing cues drawn from real conversations.

Enterprises strengthening generative AI fraud detection gain clearer signals earlier in the caller lifecycle, especially when voice patterns feed their models in real time. This creates a stronger base for advanced generative AI fraud detection workflows.

In this guide, you will see how these gains turn fragmented fraud workflows into high-clarity, high-confidence systems built for real operational pressure.

Key Takeaways

Real-time behavioral signals change fraud scoring: Voice patterns such as pacing swings, hesitation shifts, and scripted phrasing reveal early-risk cues that text logs fail to capture.

Synthetic data solves extreme fraud imbalance: GAN-driven scenarios expand rare fraud cases beyond the 0.1–0.17 percent baseline, raising model recall without exposing customer data.

Concept drift arrives within days, not months: Adaptive systems update scoring as fraud tactics shift, preventing model decay that traditional retraining cycles cannot keep up with.

GNNs expose coordinated fraud rings: Network-level mapping uncovers multi-account links across devices, IPs, and merchants, surfacing schemes hidden inside clean individual transactions.

Behavioral biometrics cut false alarms sharply: Signals such as cursor movement, tap cadence, and typing rhythm reduce false positives while preserving true fraud detection accuracy.

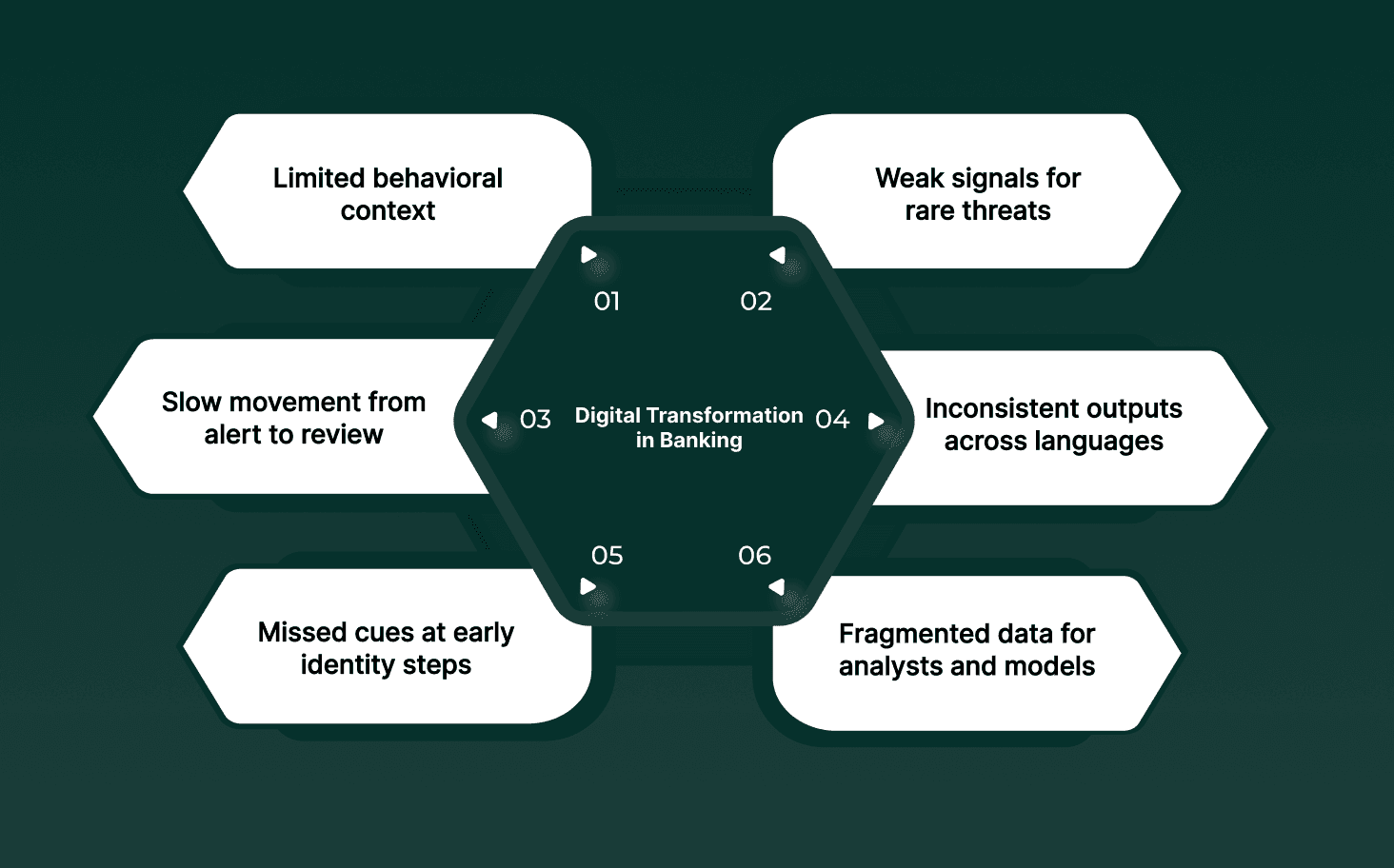

Where Teams Struggle When Fraud Detection Lacks Generative AI

Fraud shifts faster than most detection stacks can adapt. When signals remain shallow, teams miss the subtle cues that reveal early risk. This limits the foundation needed for strong generative AI fraud detection in later stages, since the upstream data stays thin and uneven.

Limited behavioral context: Voice pacing swings, hesitation points, or scripted phrasing patterns remain invisible in text-only logs.

Weak signals for rare threats: Sparse examples of uncommon fraud types prevent models from learning how these cases typically unfold.

Slow movement from alert to review: Analysts depend on delayed call summaries, which reduces the accuracy of risk scoring during live interactions.

Inconsistent outputs across languages: Accent shifts or bilingual callers produce uneven data, leaving gaps in multilingual risk scoring.

Missed cues at early identity steps: Crucial indicators at account access or verification stages remain uncaptured, lowering downstream accuracy.

Fragmented data for analysts and models: Teams work with logs that lack timing cues, tonal patterns, or context transitions, which weakens overall fraud control.

A setup like this leaves analysts reacting to fraud rather than shaping stronger detection signals at the source, which is exactly where generative models gain their strength once the right inputs exist.

If you want to see how voice AI shapes risk control and customer interactions across regulated BFSI teams, check out the Top AI voice agents for BFSI (Banking, Financial Services, and Insurance) in 2025.

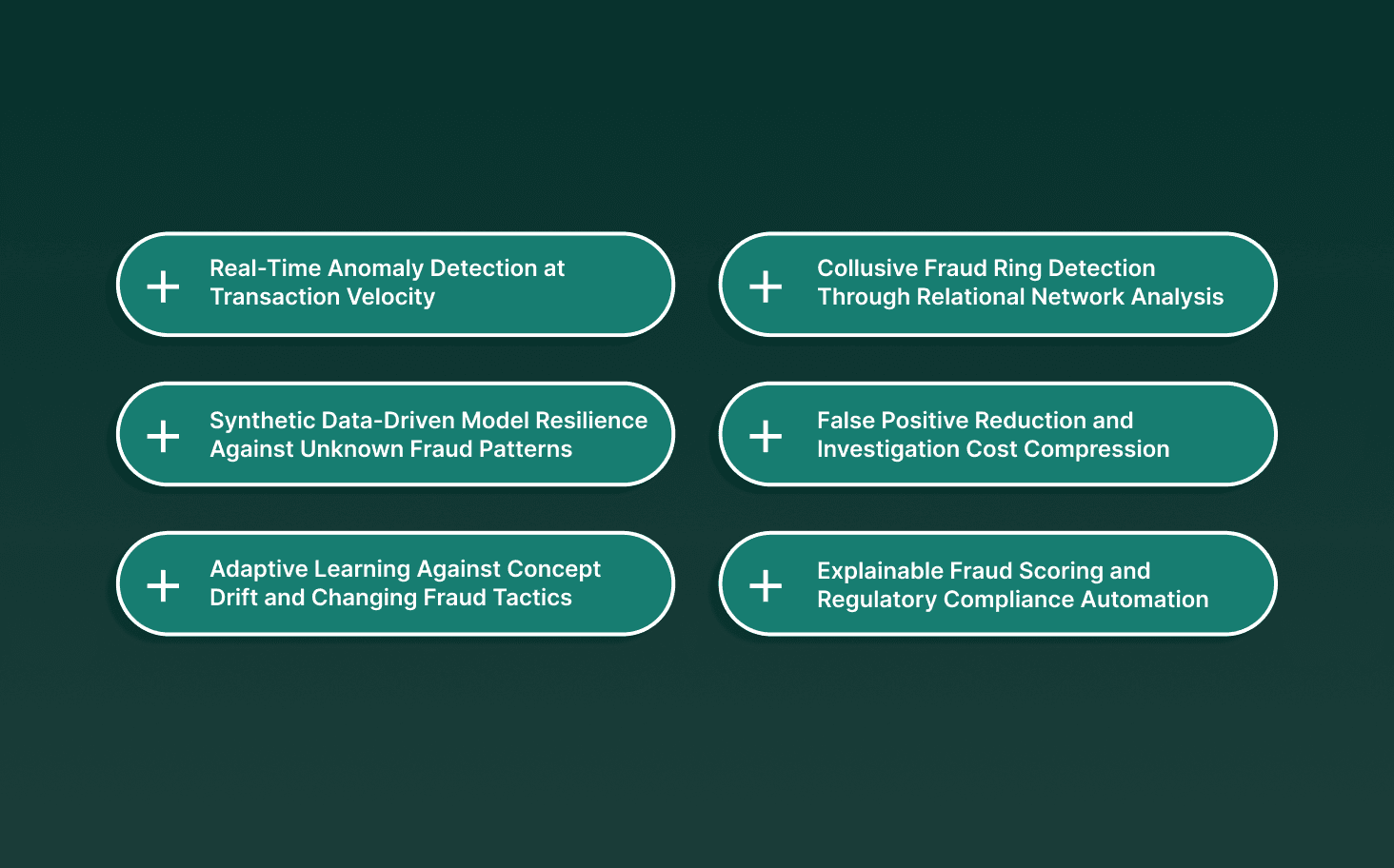

Key Gains Enterprises See With Generative AI in Fraud Control

1. Real-Time Anomaly Detection at Transaction Velocity

Generative AI systems process transaction streams at microsecond speed across hundreds of attributes without slowing payment flow. Visa-level pipelines handle high-volume activity under production latency constraints, allowing live pattern checks across device, location, and sequence behavior.

Microsecond-scale pattern matching: Processes hundreds of attributes per second to catch behavioral deviations before settlement.

Behavior velocity analysis: Identifies irregular rhythms, device shifts, and impossible travel paths within active streams.

Automated step-up response: Triggers MFA or freezes instantly when risk thresholds are crossed.

2. Synthetic Data-Driven Model Resilience Against Unknown Fraud Patterns

GAN-based synthetic data expands rare fraud examples beyond historical limits. This strengthens model recall in environments where real fraud data is highly imbalanced and sensitive to privacy rules.

Synthetic anomaly expansion: Creates edge-case scenarios that overcome extreme dataset imbalance.

Privacy-preserved training: Synthetic transactions retain statistical shape while removing customer identifiers.

Fraud-ring scenario generation: Builds multi-account patterns that rule-based systems fail to surface.

3. Adaptive Learning Against Concept Drift and Changing Fraud Tactics

Generative pipelines detect shifts in attacker behavior and retrain models continuously. This prevents fast model decay and avoids the long lag tied to manual refresh cycles.

Continuous drift monitoring: Identifies shifts such as transitions from card fraud to account takeover attempts.

Predictive threat modelling: Studies communication cues and behavioral anomalies to flag intent before execution.

Automated threshold adjustment: Adapts scoring baselines as volumes and tactics shift.

4. Collusive Fraud Ring Detection Through Relational Network Analysis

GNNs combined with generative patterns capture relationships across accounts, devices, merchants, and IP nodes. This reveals coordinated schemes hidden inside otherwise clean transactions.

Hidden network dependencies: Maps links between accounts, devices, and IPs to surface organized activity.

Cross-region link analysis: Connects fraud signals across countries and financial entities in one pass.

Synthetic identity spotting: Detects new-account clusters through network shape rather than single-transaction traits.

5. False Positive Reduction and Investigation Cost Compression

Generative systems trained with balanced precision-recall metrics reduce excessive alerts while preserving true detection accuracy. Behavioral biometrics add friction-proof signals that fraud actors struggle to mimic.

Behavioral biometric layering: Uses cursor movement, tap cadence, and typing rhythm for stronger signals.

Precision-recall optimisation: Cuts false positives while keeping strong catch rates.

Investigation automation: Directs high-confidence alerts to automated actions and limits human review to borderline cases.

6. Explainable Fraud Scoring and Regulatory Compliance Automation

LLM-powered scoring produces auditable narratives tied to transaction attributes and network cues. This supports AML, KYC, and emerging AI governance requirements by clarifying why each flag occurred.

LLM reasoning narratives: Generates clear explanations linked to behavior and transaction features.

Feature attribution ranking: Highlights factors that influenced the score, improving transparency.

Compliance-ready audit trails: Creates immutable logs tying each flag to specific model signals.

See how real-time voice signals, multilingual clarity, and sub-100ms responses strengthen your fraud models. Book a demo.

Real World Generative AI Fraud Detection Examples

These examples highlight real problems enterprises faced between 2023 and 2025 and how generative models or synthetic data improved fraud control.

Visa Real Time Scoring: Visa faced rising card-not-present attacks. Its 2024 generative model scored transactions within milliseconds and cut false positives by 85%

Paypal Scam Alerts: PayPal saw growing peer-to-peer payment scams. It's 2025, AI alerts studied behavioral patterns and warned users before risky transfers were sent.

HSBC: HSBC faced large volumes of suspicious activity that were hard to review manually. AI Screens over 1.2 billion transactions monthly with AI models; detects 2-4× more suspicious activity and has reduced false positives by 60%.

Wells Fargo: Wells Fargo saw rising account-takeover attempts across digital channels. AI used behavioral patterns and biometric signals to highlight unusual login activity and reduce takeover risk.

U.S. Bank: U.S. Bank dealt with fast-moving fraud across online banking and card activity. AI tools supported early detection by learning transaction patterns and flagging irregular movements faster than rule-based checks.

Frost Bank: Frost Bank needed stronger protection against digital fraud as customer activity increased. AI monitored interactions in real time and highlighted unusual behavior that pointed to potential fraud.

ICICI Bank: ICICI Bank struggled with spotting rare fraud patterns across large transaction sets. Machine-learning models studied spending behavior and flagged unusual activity before losses escalated.

As fraud patterns shift quickly, these cases show how generative methods give teams broader coverage, clearer signals, and earlier visibility across high-risk activity.

How Industries Apply Generative AI To Reduce Fraud Risk

Industries facing large-scale fraud pressure are turning to richer behavioral and conversational signals that support generative AI fraud detection. The shift comes from a need to catch intent patterns earlier, simulate threats with higher accuracy, and strengthen scoring models that must adapt to quick changes in fraud tactics.

Industry | How They Use Generative AI To Reduce Fraud Risk |

|---|---|

Banking | Flags abnormal caller behavior during account access by studying pacing swings, repeated clarifications, and stress-linked voice cues. |

Cards & Payments | Uses synthetic dispute variants to spot rare patterns and tighten triage for high-volume review queues. |

Insurance | Tests edge-case claim scenarios to catch scripted phrasing and unusual response timing in claims calls. |

Lending & Credit | Applies behavior cues from multilingual calls to strengthen identity checks during early approval steps. |

E-commerce & Merchants | Builds simulated transaction flows to identify irregular storefront activity across mixed data streams. |

Healthcare | Surface anomaly signals during intake calls to highlight irregular patient or provider patterns tied to identity misuse. |

Practical Steps for Adding Generative AI to Fraud Workflows

Teams moving toward generative AI fraud detection benefit most when the foundation is built on clean signals, reliable call data, and structured flows that models can learn from. The focus is not model choice alone but the quality and consistency of inputs that shape how well generative systems detect subtle shifts in fraud behavior.

Map high-friction fraud touchpoints: Identify caller stages where hesitation shifts, repeated phrasing, or unusual timing patterns appear most often.

Collect voice and conversation signals: Use call audio, pacing swings, and clarification loops to expand the behavioral inputs that models draw from.

Build synthetic scenario sets: Create controlled variants of high-risk events to widen pattern coverage for generative AI in fraud detection training runs.

Feed multilingual cases into one pipeline: Unify accents and mixed-language calls so fraud scoring remains consistent across all customer segments.

Connect real-time signals to triage rules: Route calls with risk cues to analysts while the interaction is still active, improving generative AI fraud prevention outcomes.

Run shadow-mode trials: Test fraud detection using generative AI beside existing rules to measure precision gains without affecting live decisions.

Create feedback loops from analyst actions: Capture how analysts classify edge cases so models refine their scoring patterns over time.

For teams handling high-risk insurance calls and early-stage verification, see how voice signals strengthen fraud control in Real-Time Insurance Fraud Detection Using Voice AI.

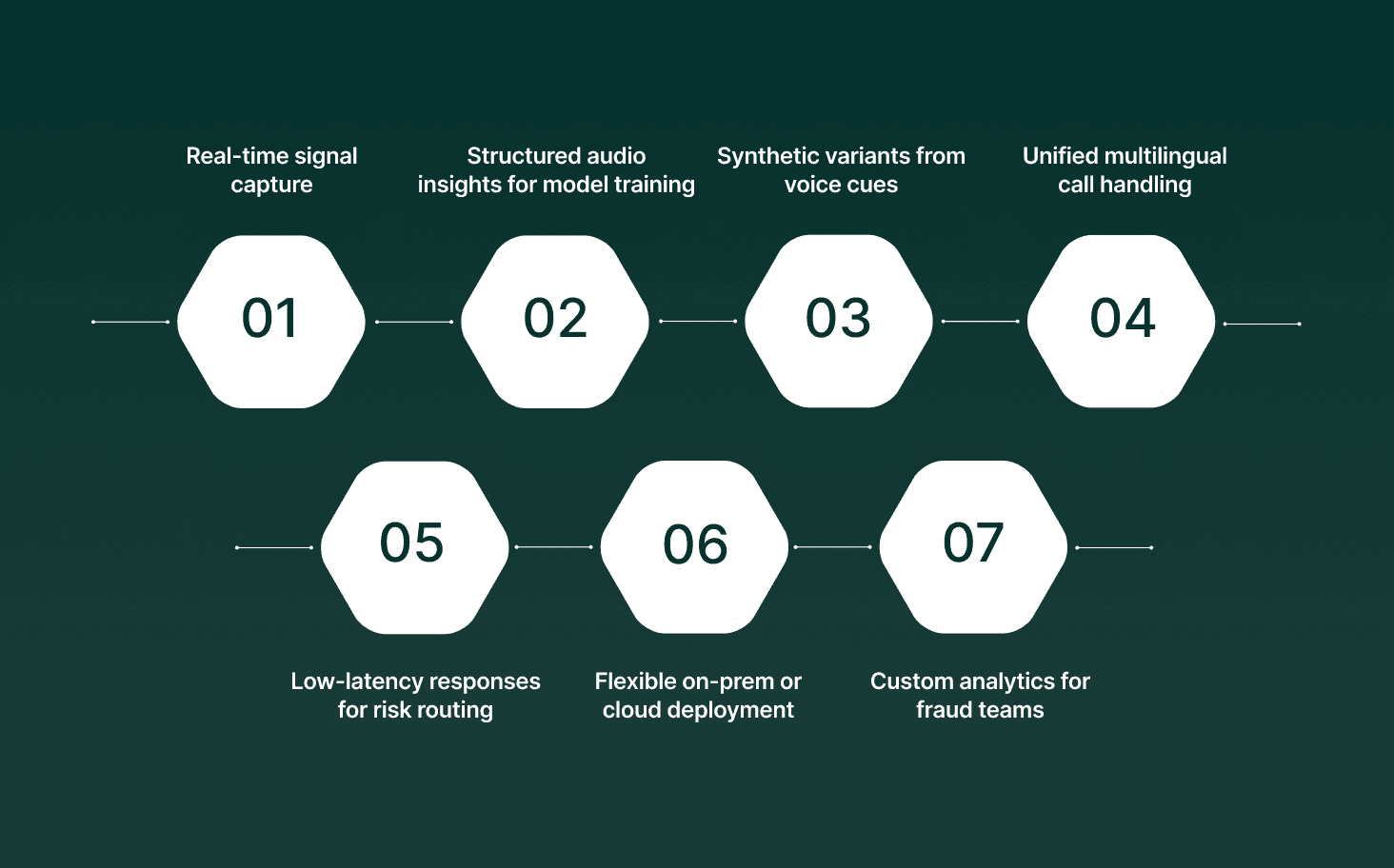

How Smallest.ai Supports Stronger Fraud Detection Models

Fraud teams working with voice-heavy workflows gain an edge when their signals capture intent shifts, pacing movements, bilingual patterns, and stress-linked moments in real time. Smallest.ai strengthens generative AI fraud detection by giving fraud models richer audio and conversation data that traditional logs miss, along with real-time agent capabilities that surface risk cues early in the call flow.

Real-time signal capture: Lightning Voice AI extracts pacing swings, hesitation shifts, and repeated phrasing directly from live calls.

Structured audio insights for model training: Electron SLMs turn raw audio into clean behavioral signals that support generative AI in fraud detection.

Synthetic variants from voice cues: Captured call patterns create controlled variants that widen coverage for generative AI for fraud detection training runs.

Unified multilingual call handling: Natural voices across 16 languages keep signals consistent for global customers.

Low-latency responses for risk routing: Sub-100ms voice output helps route suspicious callers to human teams without slowing the interaction.

Flexible on-prem or cloud deployment: SOC 2, HIPAA, and PCI support full control for regulated industries running gen AI for fraud detection.

Custom analytics for fraud teams: Voice Agentic Platforms surface call patterns linked to identity risk, feeding cleaner inputs into fraud detection using generative AI workflows.

Smallest.ai gives fraud teams the real-time signals, structured behavioral data, and deployment control needed to build sharper fraud models with generative AI.

Final Thoughts!

Fraud teams achieve the strongest results when their systems leverage clearer behavioral signals, faster feedback, and models that adapt without lengthy retraining cycles. As fraud patterns shift, the winners will be the enterprises that ground their workflows in deeper audio and conversational cues rather than narrow log-based checks. This is where generative AI fraud detection shows its real strength, especially for teams handling voice-heavy interactions.

Smallest.ai gives these teams a direct path to stronger detection. Voice AI, conversational AI, voice agents, and voice cloning produce real-time cues that support generative AI fraud detection with richer audio patterns, cleaner timing data, and multilingual clarity. Enterprises gain sharper scoring, earlier risk escalation, and smoother caller experiences without slowing their operations.

To see how these capabilities fit your workflows, connect with our team. Book a demo to experience Smallest.ai in action.

FAQs About Generative AI in Fraud Detection

1. How does generative AI fraud detection work with voice or call-based signals that traditional models ignore?

Generative systems convert pacing shifts, hesitation cues, and conversation patterns into structured signals that strengthen generative AI fraud detection pipelines, especially for early-stage identity checks.

2. Can generative AI fraud prevention help with attacks that do not appear in historical datasets?

Yes. Synthetic scenarios widen pattern coverage, allowing generative AI fraud prevention models to learn from threats that have never occurred in production.

3. What advantage does generative AI in fraud detection bring to multilingual or accent-heavy customer interactions?

Unified acoustic signals improve scoring accuracy, giving generative AI in fraud detection a consistent base across mixed-language calls.

4. How does gen AI for fraud detection handle quick shifts in attacker behavior without heavy manual retraining?

Adaptive pipelines detect shifts in real time, helping gen AI for fraud detection maintain accuracy even when tactics change quickly.

5. Why do enterprises pair graph analysis with generative AI for fraud detection instead of using transaction logs alone?

Relational signals expose fraud rings that single-transaction models overlook, giving generative AI for fraud detection deeper visibility into hidden networks and coordinated activity.