Explore explainable AI in insurance, covering core techniques, regulated use cases, real-time voice workflows, and how insurers build audit-ready AI decisions

Kaushal Choudhary

Updated on

January 28, 2026 at 2:26 PM

Insurance leaders have accepted automation, yet many still face an uncomfortable moment when a claim, premium, or underwriting decision cannot be clearly explained. That gap is why explainable AI in insurance has moved from technical debate to operational priority. When customers, regulators, or internal teams ask “why,” opaque models create friction, delays, and trust erosion that insurers cannot afford.

The urgency is growing fast. The explainable AI market size is projected to grow from USD 8.01 million in 2024 to USD 53.92 million by 2035, reflecting rising regulatory pressure and enterprise demand for accountable automation. As explainable AI in insurance reshapes claims, pricing, underwriting, and voice interactions, insurers must rethink how AI decisions are built, executed, and defended.

In this guide, we break down where explainability matters most, how it works in practice, and what insurers should prioritize next.

Key Takeaway

Explainability Is Execution-Critical: Insurance AI must expose feature drivers, confidence thresholds, and rule paths at runtime to remain auditable and defensible.

Black-Box AI Breaks Regulated Decisions: Opaque models fail to support claims, pricing, fraud, and customer workflows that require decisions to be explained after execution.

Explainability Must Be Built Into Systems: Effective implementations combine inference-time attribution, rule enforcement, lineage tracking, and human escalation.

Voice AI Increases Explainability Risk: Live insurance calls require turn-level intent confidence, policy-bound responses, and deterministic human fallback.

Platforms Determine Explainability Quality: Insurance-grade platforms embed explainability into execution and audit layers, not post-hoc reporting tools.

What is Explainable AI in Insurance?

Explainable AI in insurance refers to AI systems that expose decision logic, feature influence, confidence levels, and escalation paths across underwriting, claims, pricing, fraud, and customer interactions, allowing insurers to validate, audit, and defend outcomes in regulated environments.

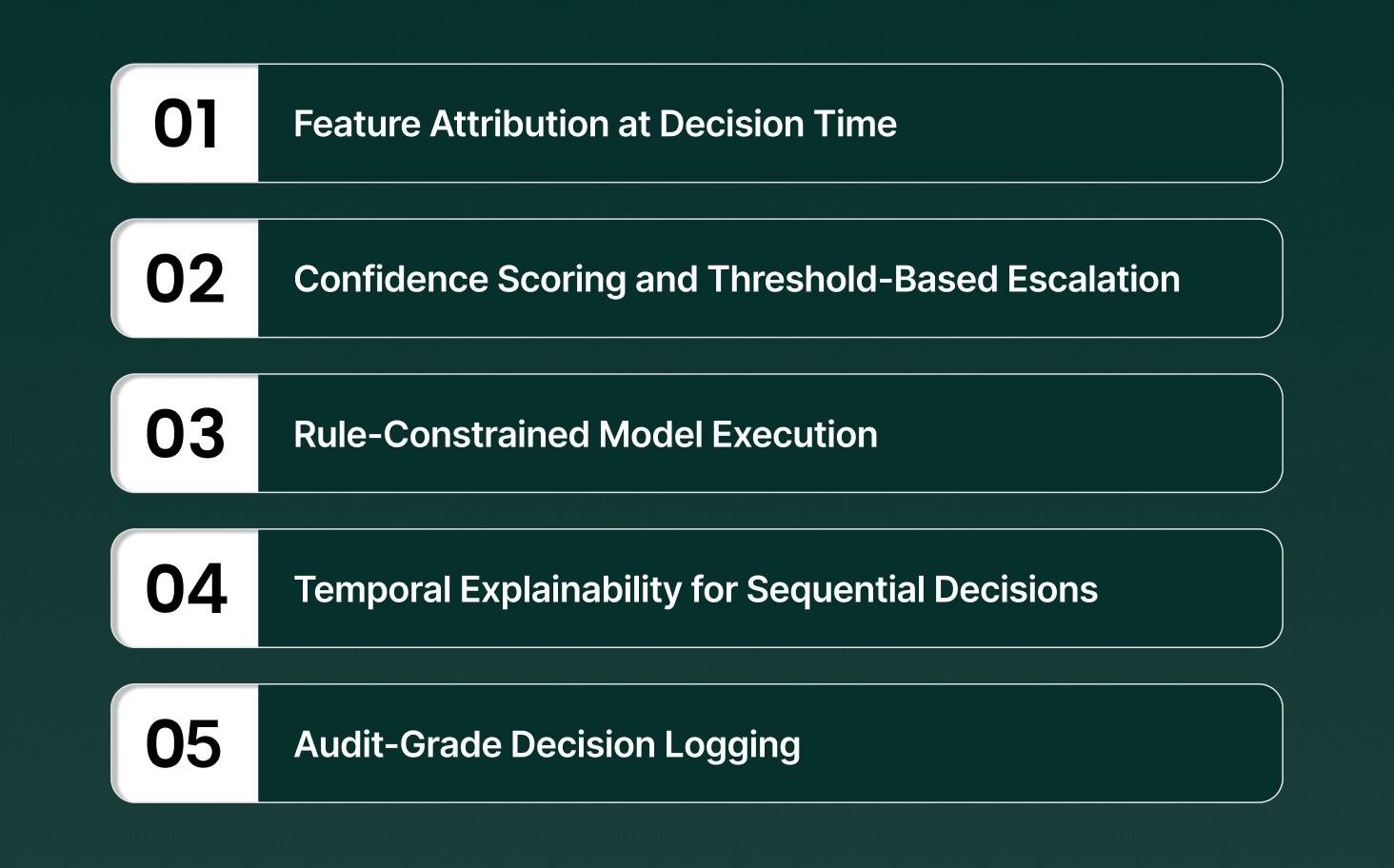

Core Techniques Powering Explainable AI in Insurance

Feature Attribution at Decision Time: Quantifies the exact contribution of input variables such as claim history, loss ratios, policy tenure, or call metadata at the moment a decision is generated, allowing post-decision traceability without re-running models.

Confidence Scoring and Threshold-Based Escalation: Assigns probabilistic confidence to each prediction and triggers human review when confidence falls below defined thresholds, which is critical for claims denials, fraud flags, and underwriting exceptions.

Rule-Constrained Model Execution: Combines statistical models with deterministic business rules so regulatory constraints, underwriting guidelines, and SOPs override or bound model outputs in real production workflows.

Temporal Explainability for Sequential Decisions: Captures how decisions evolve across time, such as multi-call claim interactions or staged underwriting reviews, rather than explaining a single static prediction.

Audit-Grade Decision Logging: Records inputs, model versions, feature weights, confidence scores, and human interventions in immutable logs that satisfy internal audit, regulator review, and dispute resolution requirements.

Explore how explainable AI principles extend into payer operations, claims, and member engagement in AI in Health Insurance: 6 Use Cases Top Carriers Depend On

Key Use Cases of Explainable AI in Insurance

Explainable AI in insurance converts probabilistic model outputs into auditable decision artifacts by exposing feature causality, constraint enforcement, uncertainty bounds, and execution lineage across regulated insurance workflows.

Underwriting Decisions

Explainable underwriting systems expose how risk features, interaction terms, monotonic constraints, and underwriting rules jointly determine eligibility, exclusions, and coverage limits during automated policy evaluation.

Causal Feature Attribution: SHAP vectors and interaction matrices quantify the marginal and joint impact of loss frequency, exposure period, asset age, and occupancy class on underwriting outcomes.

Constraint-Aware Decision Paths: Hard underwriting constraints enforce eligibility floors and ceilings independent of model scores, with overrides explicitly logged and versioned.

Reproducible Underwriting Lineage: Each decision persists model hash, feature snapshot, constraint set, and confidence interval for regulatory reproduction.

Example: A fleet policy is declined because vehicle age monotonic constraints override a favorable loss history despite a positive model score.

Pricing and Rating Decisions

Explainable pricing systems decompose premium outputs into factor-level contributions while validating regulatory constraints, interaction effects, and competitive adjustments at quote execution time.

Premium Factor Decomposition: Partial dependence gradients isolate premium deltas caused by exposure units, claims volatility, geography, and deductible selections.

Fairness Risk Isolation: Correlation audits surface proxy variables that indirectly influence premiums through interaction effects rather than explicit rating factors.

Quote Lineage Control: Every price binds to rating tables, calibration dataset version, elasticity assumptions, and constraint configuration.

Example: A homeowner premium increase is attributed to deductible–region interaction effects, not absolute location risk.

Claims Triage and Settlement

Explainable claims engines reveal why claims are auto-approved, fast-tracked, or escalated by exposing policy clause activation, anomaly drivers, and confidence thresholds.

Clause Execution Mapping: Decision logic maps claim attributes directly to policy clauses, endorsements, and exclusion triggers applied during adjudication.

Anomaly Driver Disclosure: Statistical deviations are decomposed into timing variance, cost inflation, provider frequency, and documentation inconsistencies.

Escalation Threshold Enforcement: Confidence bands enforce deterministic human review for borderline or high-liability claims.

Example: A motor claim is escalated due to repair cost deviation exceeding historical variance for the same damage class.

Fraud Detection

Explainable fraud systems convert probabilistic suspicion into investigator-actionable evidence through interpretable patterns and entity-level relationships.

Graph-Based Evidence Surfacing: Network graphs expose shared entities, temporal clustering, and repeated behavior across claims.

Evidence-Weighted Escalation: Fraud escalation requires threshold satisfaction across multiple causal indicators, not single risk scores.

Legal Defensibility Artifacts: Fraud conclusions persist, rule triggers, temporal context, and supporting evidence for prosecution readiness.

Example: Multiple injury claims linked to the same medical provider within synchronized submission windows.

Voice and Conversational Systems

Explainable voice AI exposes conversational state transitions, intent uncertainty, and policy bindings in real time during insurance calls.

Turn-Level State Attribution: Each utterance logs ASR confidence, intent probability distribution, extracted entities, and response rationale.

Regulatory Boundary Enforcement: Disclosures, commitments, and denials execute only within rule-approved conversational states.

Post-Call Decision Reconstruction: Full replay of conversational timelines with reasoning metadata for audits and dispute handling.

Example: A claim intake call escalates when entity extraction confidence drops below predefined compliance thresholds.

Explainable AI in insurance is execution infrastructure, not interpretability tooling, allowing insurers to deploy automation that withstands audit, litigation, and real-time operational scrutiny.

See how Smallest.ai delivers real-time, explainable voice AI with deterministic controls, audit-ready call logs, and compliant human handoffs built for regulated insurance operations.

Explainable AI Meets Real-Time Voice and Conversational Systems

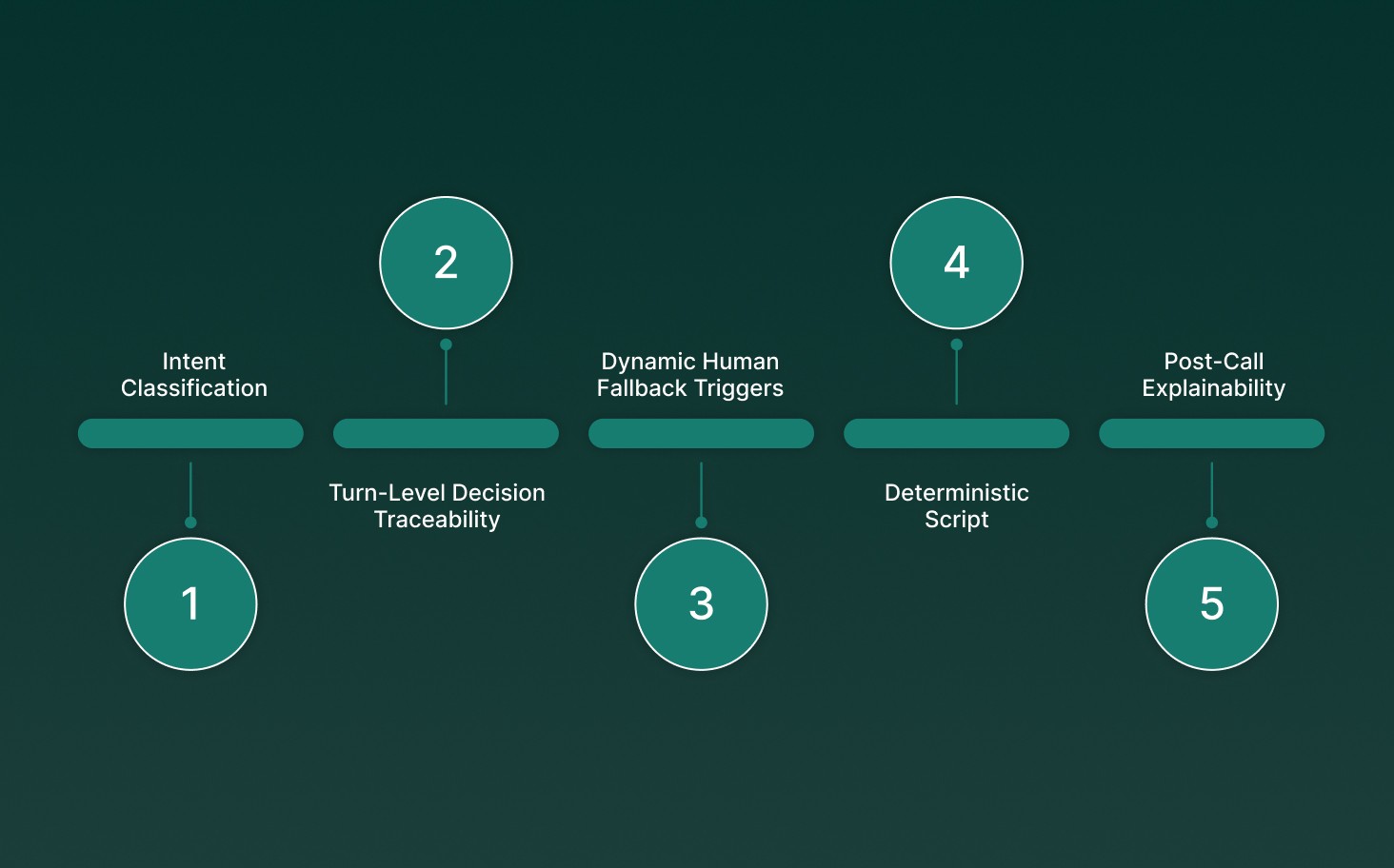

When AI decisions are executed inside live voice and conversational systems, explainability shifts from post-decision reporting to real-time operational control. In insurance, where calls drive claims intake, coverage clarification, payments, and dispute resolution, AI must expose intent interpretation, confidence, and next-action logic while the conversation is still in progress.

Intent Classification With Confidence Exposure: Real-time speech models must surface intent probabilities, not only the predicted intent, so systems can distinguish between similar but high-risk intents, such as claim initiation versus policy modification.

Turn-Level Decision Traceability: Each conversational turn must log recognized entities, extracted values, and decision transitions, allowing insurers to reconstruct how a call progressed and why a specific outcome occurred.

Dynamic Human Fallback Triggers: Explainable systems expose uncertainty thresholds that automatically transfer calls to human agents when ASR confidence drops, entity extraction conflicts occur, or policy rules are ambiguously satisfied.

Deterministic Script and Policy Enforcement: Conversational agents must operate within explainable, rule-constrained flows that ensure coverage explanations, disclosures, and commitments remain compliant across jurisdictions and product lines.

Post-Call Explainability for QA and Compliance: Voice AI must generate structured call summaries that map conversational decisions to policy clauses, SOP steps, and system actions, supporting audits, training, and regulator inquiries.

In insurance, real-time voice AI without explainability introduces irreversible risk, making transparent conversational decisioning essential for compliant, scalable automation.

How Insurers Operationalize Explainable AI

Operationalizing explainable AI in insurance means embedding transparency, controls, and auditability directly into production systems, not layering explanations after decisions are made.

Inference-Level Explainability: Models emit feature contributions, confidence scores, and rule evaluations alongside predictions for every decision event.

Decision-Oriented Orchestration: Model outputs flow through rule-bound engines that enforce policy logic, jurisdictional constraints, and escalation paths.

Versioned Decision Lineage: Each outcome is linked to the model version, feature schema, and training snapshot for full reproducibility.

Structured Human Review: Low-confidence or high-impact cases are routed to experts with machine-generated explanations.

Governance by Design: Explainability standards, thresholds, and metrics are defined jointly across data, compliance, legal, and operations teams.

Explainable AI becomes operational when it is enforced through system architecture, governance, and workflows rather than added as a reporting layer after deployment.

Challenges and Limitations of Explainable AI in Insurance

Explainable AI in insurance faces practical limits around causality, latency, system complexity, and regulatory alignment when applied to real-time, production-grade decision workflows.

Challenge | Technical Constraint | Insurance Impact |

Causality Limits | Feature attribution reflects correlation, not proven causation. | Weakens legal defensibility in disputes. |

Runtime Overhead | Inference-time explanations increase latency and compute cost. | Risks SLA breaches in live calls and pricing. |

Pipeline Complexity | Decisions span multiple models and rules engines. | Hard to reconstruct end-to-end logic. |

Regulatory Fragmentation | Explainability standards vary by region and product. | Requires multiple explanation formats. |

Usability Gap | Explanations may be technically correct but unreadable. | Limits adoption by claims and compliance teams. |

Explainable AI in insurance demands architectural tradeoffs to balance interpretability, performance, and regulatory compliance.

Explore how explainable, real-time AI principles are reshaping regulated recovery workflows in Why Debt Collection Agencies Are Turning to Conversational AI in 2025? 6 Applications & Benefits

Best Practices of Explainable AI for Insurance

Explainable AI best practices in insurance prioritize runtime visibility, decision reproducibility, and structured governance so automated outcomes remain accountable during audits, disputes, and live operations.

Bind Explanations to Inference: Capture feature attributions, confidence scores, and rule evaluations at decision time and persist them with the outcome, not post hoc.

Decouple Prediction and Control: Pass model outputs through deterministic decision layers that enforce policy rules, jurisdictional limits, and escalation logic.

Version All Decision Inputs: Store model binaries, feature schemas, preprocessing logic, rule sets, and thresholds to fully reproduce historical decisions.

Use Confidence-Gated Automation: Define confidence bands that govern autonomous execution, human review, or execution halt for high-impact decisions.

Monitor Explainability Drift: Track shifts in feature influence, explanation patterns, and confidence distributions alongside accuracy and latency.

Explainable AI in insurance succeeds when explainability is enforced through system design, controls, and monitoring rather than treated as documentation after deployment.

Where Black-Box AI Breaks Down in Insurance Workflows

Black-box AI produces decisions without exposing underlying logic, feature drivers, or confidence levels. In insurance, this opacity creates audit, compliance, and customer-experience risk because decisions must be explainable and reproducible.

Unverifiable Claims Denials: Models reject claims based on latent correlations without surfacing evidentiary factors, weakening dispute resolution and legal defense.

Indefensible Pricing Actions: Premium adjustments lack transparent rationale, preventing validation of fairness, proportionality, and regulatory compliance.

High False Fraud Flags: Opaque anomaly scores generate excessive false positives, increasing investigation workload without actionable evidence.

Uncontrolled Customer Automation: Voice and chat systems act without confidence exposure or fallback logic, causing irreversible CX and compliance failures.

Audit and MRM Gaps: Decisions cannot be reproduced due to missing feature states, model paths, and threshold records.

Black-box AI fails in insurance because decisions must be inspectable, defensible, and traceable by design.

Understand how explainable decision frameworks extend into lending and financial risk workflows in How AI Credit Risk Assessment Is Transforming Risk Management

What to Look for in an Explainable AI Platform for Insurance

An explainable AI platform in insurance must support decision traceability at runtime, not retrospective analysis. The focus is on operational control, audit readiness, and deterministic behavior across regulated workflows.

Inference-Time Feature Attribution: The platform must expose per-decision feature contribution at runtime, tied to the exact input vector and model version, not sampled or approximated post hoc.

Deterministic Confidence and Escalation Controls: Native support for confidence thresholds that trigger human review, workflow branching, or hard stops when uncertainty, entity conflicts, or policy gaps occur.

Rule-Bound Decision Paths: Ability to bind AI outputs to underwriting rules, pricing constraints, compliance logic, and SOPs so predictions cannot bypass domain controls.

Audit-Grade Decision Lineage: Immutable logs capturing inputs, transformations, model state, explanation artifacts, and human overrides, queryable by regulator, risk, or compliance teams.

Structured Explainability for Voice and Chat: Turn-level explanation exports linking intents, entities, confidence scores, and policy references for post-call audits and dispute resolution.

Insurance-grade explainable AI platforms embed explainability into execution, governance, and escalation, not dashboards.

Future Trends in Explainable AI for Insurance

Explainable AI in insurance is evolving into a decision infrastructure, shaped by regulatory alignment, real-time automation, and the need for enforceable trust in production systems.

Automated Explanation Generation: Models increasingly generate structured, policy-aligned natural language explanations directly from decision artifacts such as feature attribution, rule triggers, and confidence thresholds.

Real-Time Explainability at Execution: Explainability is shifting to inference-time delivery, enabling claims, pricing, and voice systems to expose reasoning while decisions are being executed.

Immutable Decision Records: Integration with tamper-resistant ledgers is enabling immutable storage of decision lineage, model state, and explanation artifacts for audit and dispute resolution.

Stakeholder-Specific Explanations: Explanation outputs are adapting to role context, delivering technical detail for auditors, operational summaries for agents, and simplified rationale for customers.

Explainability Across Multi-Model Systems: New frameworks are emerging to unify explanations across chained models, rules engines, and conversational systems rather than isolated predictions.

The future of explainable AI in insurance lies in execution-level explainability that governs how decisions are made, chained, and defended in production environments.

How Smallest.ai Allows Explainable, Real-Time Voice AI for Insurance

Smallest.ai is designed for execution-grade explainability in live voice workflows, where decisions are made mid-call, under regulatory constraints, and must remain inspectable after the interaction ends.

Deterministic Voice Agent Flows: Every voice agent runs on predefined, rule-bound state machines. Each transition, prompt, and response is traceable to a specific intent, policy rule, and confidence threshold.

Turn-Level Confidence Scoring: Speech recognition, intent classification, and response generation emit confidence scores per turn, allowing explainable escalation when ambiguity, silence, or entity conflicts appear.

Policy-Anchored Response Generation: Voice outputs are generated solely from approved policy documents, scripts, or knowledge bases, establishing a clear link between spoken responses and their source artifacts.

Real-Time Human Fallback Triggers: Low confidence, regulatory keywords, or out-of-scope requests trigger deterministic handoff to human agents, preserving explainability at failure boundaries.

End-to-End Call Auditability: Each call produces structured logs covering audio input, transcriptions, intent paths, decision rules applied, and final outcomes, ready for compliance review.

Smallest.ai delivers explainable voice AI by constraining real-time execution rather than retrofitting explanations after the fact.

Final Thought!

As insurance workflows become faster and more automated, the real differentiator is not how advanced the model is, but how confidently its decisions can be examined, challenged, and upheld. Explainability determines whether AI remains a controlled system or turns into operational risk once it reaches real customers, regulators, and disputes.

This is where execution matters. Smallest.ai is built for real-time, explainable voice AI that keeps insurance conversations deterministic, auditable, and compliant at scale.

If you are evaluating how to deploy voice automation without sacrificing control, talk to a Smallest.ai expert to see it in action.

Answer to all your questions

Have more questions? Contact our sales team to get the answer you’re looking for