Compare top voice-to-text software for real-time accuracy, low latency, and production use. Learn what works at scale and what fails in live systems. Read more.

Abhishek Mishra

Updated on

February 9, 2026 at 1:03 PM

You are on a live customer call, the speaker changes mid-sentence, numbers are dictated fast, and accents vary. The transcript lags, misses context, and breaks the flow. That moment explains why teams search for voice-to-text software that actually works in real time, not post-call cleanup tools.

The urgency is real. The voice and speech recognition software market is projected to reach $23.11 billion by 2030, driven by contact centers, voice agents, and real-time products. Buyers evaluating voice-to-text software now care less about notes and more about latency, control, and production readiness.

In this guide, we break down what matters, what scales, and what separates demos from deployable systems.

Key Takeaways

Voice-to-text is production infrastructure, not a feature: Real-time systems depend on streaming inference, deterministic decoding, and latency guarantees, not post-call transcription accuracy or UI-level tooling.

Latency metrics matter more than raw accuracy benchmarks: Time-to-first-transcript, partial hypothesis refresh, and stabilization behavior determine usability in live agents, captions, and conversational workflows.

Use-case fit depends on architecture, not labels: Voice-to-text, dictation, and transcription differ fundamentally in streaming model, speaker handling, and decoding strategy. Misalignment causes failures at scale.

Infrastructure choices define reliability and compliance: On-prem or VPC deployment, model lifecycle control, and failure isolation determine whether systems meet regulatory, concurrency, and uptime requirements.

Modern platforms converge speech, text, and action: Leading systems integrate STT with multimodal reasoning and workflow execution, allowing real-time agents rather than isolated transcription pipelines.

What Is Voice to Text Software?

Voice-to-text software converts live or recorded speech into machine-readable text using acoustic modeling, language modeling, and real-time inference pipelines. Modern systems operate as low-latency AI services that power dictation, transcription, and embedded speech recognition across applications, devices, and workflows.

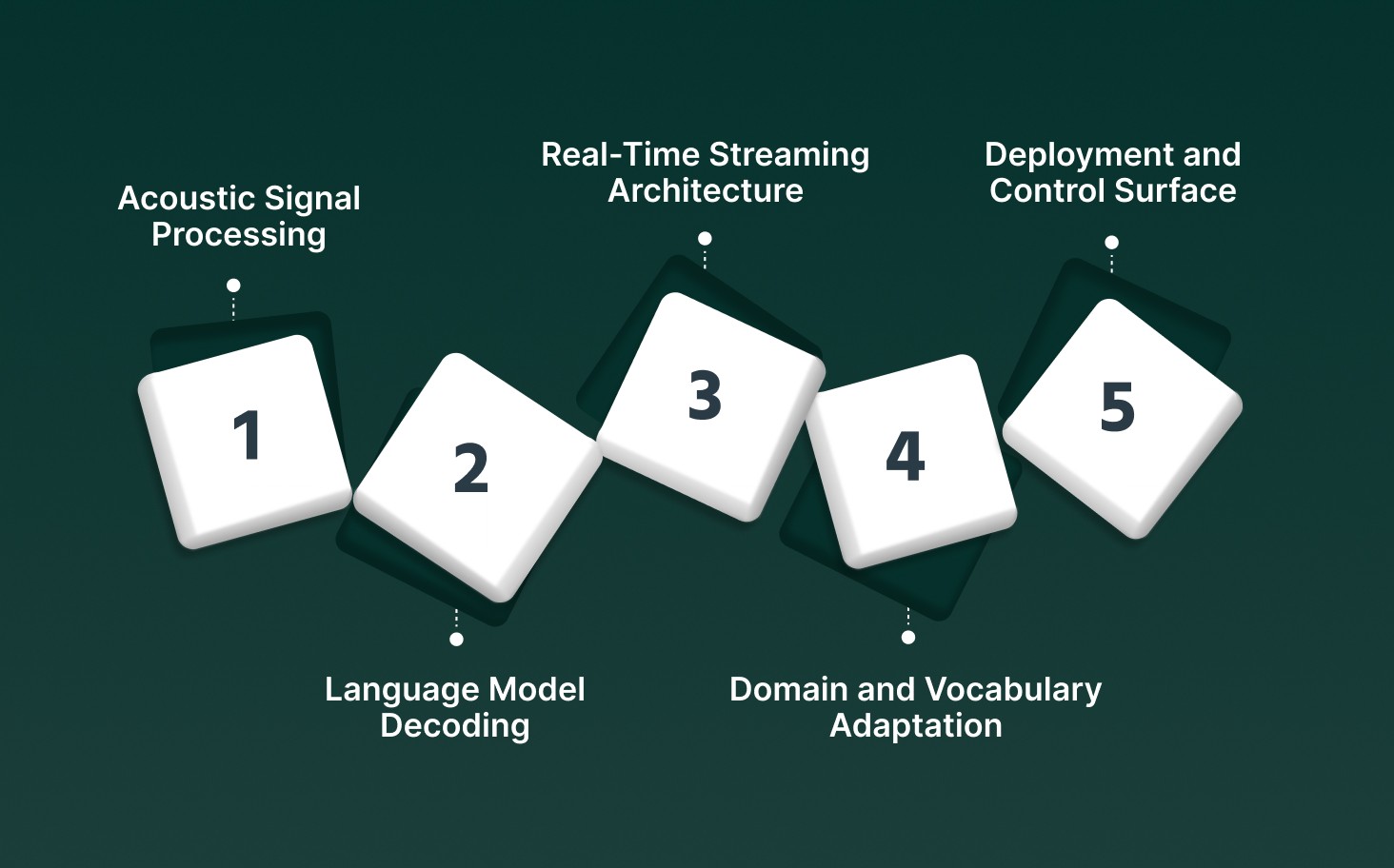

Acoustic Signal Processing: Audio is sampled, normalized, and segmented into phoneme-level features using neural encoders that handle noise, overlapping speech, and variable microphone quality.

Language Model Decoding: Probabilistic language models resolve homophones, numerics, abbreviations, and grammar by scoring token sequences in context rather than transcribing words in isolation.

Real-Time Streaming Architecture: Production-grade voice-to-text programs process partial hypotheses continuously, allowing text output within milliseconds instead of waiting for end-of-utterance completion.

Domain and Vocabulary Adaptation: Enterprise systems support custom lexicons, phrase boosting, and contextual biasing so industry terms, product names, and regulated language are transcribed correctly.

Deployment and Control Surface: Voice-to-text software runs as APIs, SDKs, or on-prem services with configurable latency targets, data retention policies, and compliance boundaries.

Voice-to-text software functions as a foundational speech infrastructure, not a surface-level typing feature. Its value depends on model behavior under real-world audio, system latency, and deployment control rather than interface polish.

Voice to Text vs Dictation vs Transcription

Although these terms are often used interchangeably, they describe distinct speech-processing workflows with different latency models, accuracy constraints, and system architectures. Understanding the difference is critical when selecting voice infrastructure for real-time writing, meetings, or production workloads.

Dimension | Voice to Text Software | Dictation Software | Transcription Software |

Primary Purpose | Convert live or recorded speech into text for downstream systems | Replace keyboard input with spoken text in active writing workflows | Convert completed audio or video into structured text records |

Latency Model | Streaming or near-real-time (partial hypotheses every 100–300 ms) | Ultra-low latency for continuous typing feedback | Batch or post-processing with full-context optimization |

Audio Input Pattern | Live streams, APIs, microphones, telephony, embedded devices | Single-speaker, close-mic, controlled environments | Multi-speaker recordings, meetings, calls, media files |

Language Modeling Strategy | Balanced for speed and accuracy using incremental decoding | Aggressive contextual correction and punctuation inference | Full-context decoding with diarization, timestamps, and re-scoring |

Speaker Handling | Optional speaker separation depending on use case | Assumes a single speaker | Explicit speaker diarization is a core requirement |

Editing Assumptions | Text may be post-processed by applications or agents | Text is edited inline during generation | Text is reviewed and edited after completion |

Vocabulary Control | Phrase boosting and runtime biasing via APIs | User-trained vocabularies and command grammars | Custom dictionaries and post-hoc correction |

Typical Accuracy Trade-off | Optimized for speed under live conditions | Optimized for perceived correctness while typing | Optimized for maximum final accuracy |

Common Use Cases | Voice interfaces, live captions, call analytics, voice agents | Writing emails, documents, code, and notes | Meetings, interviews, podcasts, compliance records |

Evaluate real-time speech infrastructure, latency behavior, and production control before committing to any vendor by reviewing Who are the leading AI platforms for developing voice assistants?

Top 8 Voice-to-Text Software

Modern voice-to-text software has shifted from offline transcription utilities to real-time, infrastructure-grade speech systems that power voice agents, contact centers, and multimodal products. These platforms are evaluated on latency determinism, streaming accuracy, control surfaces, and production governance rather than UI convenience.

1. Pulse STT

Pulse STT by Smallest.ai is an enterprise-grade speech recognition system built for real-time voice infrastructure. It delivers ultra-low latency transcription, broad language coverage, and production-ready speech intelligence for global conversational applications.

Key Features

Pulse STT (Speech-to-Text): High-accuracy real-time speech recognition with sub-70ms Time to First Transcript and low word error rates across 30+ languages, accents, and dialects. Built for live conversations and streaming audio at scale.

Advanced Speech Intelligence: Goes beyond transcription with built-in speaker diarization, real-time sentiment detection, emotion recognition, and automatic language identification for multilingual environments.

Auto Language Detection and Code Switching: Detects the dominant spoken language and adapts instantly during transcription, supporting natural multilingual conversations without manual configuration.

Profanity Filtering and Word Boosting: Allows suppression of offensive language and prioritization of custom keywords or domain terms to improve recognition precision for industry-specific vocabulary.

Real-Time Streaming Transcription: Supports live audio ingestion with quick partial transcripts and minimal delay, suited for voice agents, contact centers, live captions, and interactive voice systems.

On-Prem Deployment Option: Runs on local infrastructure for organizations that require strict data control, ultra-low latency, and compliance with regulated data environments.

Enterprise Security and Compliance: Aligned with SOC 2 Type II, HIPAA, PCI, and ISO-based security practices, supporting high-security production workloads in both cloud and on-premise deployments.

Best For: Enterprises and product teams building real-time voice applications, global customer communication systems, speech analytics platforms, and AI voice agents that require fast, accurate, and secure speech recognition at scale.

Contact Sales to book a demo with a voice AI expert and experience Pulse STT and Hydra multimodal speech intelligence in production.

2. Decagon AI

Decagon is an enterprise voice AI platform that delivers real-time, human-like customer conversations across voice, chat, email, and SMS, with deep integrations into existing support and CRM systems.

Key Features:

Real-Time Voice AI: Low-latency, interruption-aware voice agents handle intent shifts mid-sentence, allowing natural dialogue flow without hold times or rigid IVR trees.

Brand-Aligned Voice Profiles: Fine-grained control over language, tone, speed, pronunciation, and terminology guarantees agents sound consistent with brand standards across all calls.

Cross-Channel Memory: Persistent context across voice, chat, SMS, and email allows agents to recall prior interactions and deliver continuity without forcing customers to repeat information.

Best For: Large enterprises seeking always-on voice support that integrates with CRMs, helpdesks, and CPaaS stacks while preserving brand voice, conversation context, and smooth human escalation paths.

3. Ada

Ada is an enterprise AI customer service platform offering voice, chat, and email automation, designed to resolve customer inquiries instantly through natural, low-latency conversations integrated with existing support stacks.

Key Features:

Instant Voice Resolution: Ada Voice answers calls immediately, handling high-volume, repetitive inquiries without IVR menus, reducing abandonment caused by hold times exceeding 30 seconds.

Natural Language Reasoning: Uses multiple AI models to manage open-ended, real-world speech patterns, adapting responses dynamically instead of relying on scripted flows.

Omnichannel Continuity: Shares memory and context across voice, messaging, and email, allowing smooth escalation to human agents with full conversation history.

Best For: Enterprises in retail, insurance, travel, and technology seeking scalable voice automation that integrates with IVR, CRMs, and ticketing systems while maintaining consistent omnichannel customer experiences.

4. Yellow AI

VoiceX is Yellow.ai’s enterprise-grade voice AI offering, designed to deliver autonomous, human-like voice conversations at scale, with deep context handling, multilingual support, and tight integration across omnichannel customer service stacks.

Key Features:

Autonomous Voice Conversations: VoiceX handles complex, multi-turn voice interactions using context retention, interruption handling, and intent switching, allowing up to 90% self-service without scripted IVR flows.

Multilingual, Human-Like Speech: Supports over 135 languages with dialect and tone customization, powered by DynamicNLP, achieving up to 97% intent accuracy across global customer bases.

AI-to-Human Continuity: Smoothly transfers calls from AI to human agents with full context, summaries, and real-time AI assist, improving agent productivity and reducing resolution times.

Best For: Large enterprises in BFSI, healthcare, retail, and utilities that require high-volume voice automation, omnichannel continuity, multilingual support, and measurable CSAT and cost improvements.

5. Kore AI

Kore.ai is an enterprise-grade agentic AI platform designed to build, orchestrate, and govern AI agents across voice, chat, search, and process automation, with a strong emphasis on security, scalability, and measurable business outcomes.

Key Features:

Agentic Voice AI: Native, low-latency voice AI agents handle interruptions, context shifts, and multi-turn conversations, allowing human-like voice interactions across global, high-volume contact center environments.

Multi-Agent Orchestration: Coordinates specialized AI agents dynamically, enforcing deterministic workflows for compliance-critical use cases while allowing autonomous resolution for routine customer and employee interactions.

Enterprise Knowledge + Action: Combines agentic RAG search with system actions, allowing AI agents to retrieve trusted enterprise knowledge, update records, schedule tasks, and complete workflows with full auditability.

Best For: Large enterprises in banking, healthcare, telecom, and retail that require secure, compliant, omnichannel AI agents capable of handling billions of interactions with governance, observability, and ROI tracking.

6. Echowin AI

Echowin is an AI receptionist and call automation platform that allows businesses to build, train, and deploy ultra-low-latency voice AI agents for phone calls, chat, and messaging channels within minutes.

Key Features Relevant Today

Ultra-Low Latency Voice AI: Sub-750ms response times with natural turn-taking and smart interruption handling, allowing real-time phone conversations that closely match human call center interactions.

No-Code Agent Training: Plain-English instruction-based agent training with automatic learning from websites, documents, and FAQs, eliminating flow builders and reducing setup time dramatically.

Action-Oriented Automation: Built-in tools and 7,000+ Zapier integrations allow agents to book appointments, send emails or SMS, update CRMs, route calls, and execute workflows during live calls.

Best For: SMBs and mid-market teams in healthcare, home services, automotive, legal, and retail that need 24/7 phone automation, multilingual support, and fast deployment without engineering overhead.

7. Replicant AI

Replicant is a contact-centre-focused voice AI platform that deploys human-like AI agents to autonomously resolve high-volume, tier-1 phone calls, with strict guardrails, enterprise reliability, and guaranteed ROI.

Key Features:

Human-Like Voice Resolution: AI agents use realistic voices, natural pacing, and interruption handling to resolve up to 80% of inbound calls across 30+ languages without IVR trees.

Deterministic, Hallucination-Free AI: Agents strictly follow enterprise-defined rules and workflows, guaranteeing brand safety, compliance, and predictable outcomes even during peak seasonal call volumes.

Conversation Intelligence at Scale: Automatically captures and analyzes 100% of voice, chat, and email interactions to surface QA insights, agent gaps, call drivers, and compliance risks in real time.

Best For: Large contact centers in insurance, healthcare, retail, travel, transportation, and financial services seeking to automate tier-1 calls, reduce agent attrition, and improve CSAT without increasing headcount.

8. Poly AI

PolyAI is an enterprise-grade, voice-first conversational AI platform designed to automate complex customer service calls using proprietary speech recognition, controlled LLM reasoning, and branded voice agents built for production contact centers.

Key Features:

Purpose-Built Speech Recognition: PolyAI uses customer-service-trained ASR with granular tuning controls, allowing accurate intent capture across accents, noise conditions, and real-world call variability.

Controlled LLM Reasoning Layer: A custom LLM adaptor enforces deterministic responses, function calling, and tool usage, preventing hallucinations while guaranteeing compliant, brand-safe automation.

Voice-First Omnichannel Architecture: Designed natively for phone conversations, PolyAI extends voice intelligence across channels while preserving conversational state, logic, and enterprise context.

Best For: Large enterprises running high-volume inbound call centers in regulated or customer-sensitive industries that require reliable voice automation with strict control, observability, and deep contact-center integrations.

Enterprise Voice AI & STT Platform Pricing Comparison

Platform | Pricing Model | Entry Price |

Tiered subscription + usage | Free → $49 → $1,999 | |

PolyAI | Usage-based | Custom |

Replicant | Flat annual license | Custom |

Ada | Usage-based enterprise | Custom |

Yellow.ai | Free + enterprise | Free tier available |

Decagon | Enterprise contract | Custom |

echowin | Subscription + usage | $49.99/month |

The top voice-to-text platforms are no longer transcription tools; they are real-time speech infrastructure layers designed for scale, compliance, and conversational reliability in production systems.

What to Look for in Voice-to-Text Software for Production Use

Selecting production-grade voice-to-text requires metrics and capabilities aligned with real-world enterprise workloads: low latency, domain adaptivity, reliable decoding under noisy conditions, and secure, scalable deployment.

Low Latency Streaming Output: Sub-100 ms partial text outputs with forward-looking re-scoring are critical for real-time conversational feedback loops.

Domain Lexicon and Biasing: Support for runtime phrase boosting and custom vocabularies for industry jargon, product SKUs, acronyms, and multilingual code-switching.

Robust Noise & Overlap Handling: Models trained on multi-speaker overlap and environmental noise that maintain low WER in telephony, kiosk, and field recordings.

Deployment Flexibility & Sovereignty: Options for on-premise or private VPC inference to meet data residency, compliance, and latency SLAs.

Integration & Observability APIs: Endpoints with webhook events, token-level logs, confidence scores, and real-time error diagnostics for operational monitoring.

See how real-time speech recognition, streaming inference, and action orchestration come together in production by exploring What Are AI Phone Agents and How They Work

Voice to Text Software for Real-Time Applications

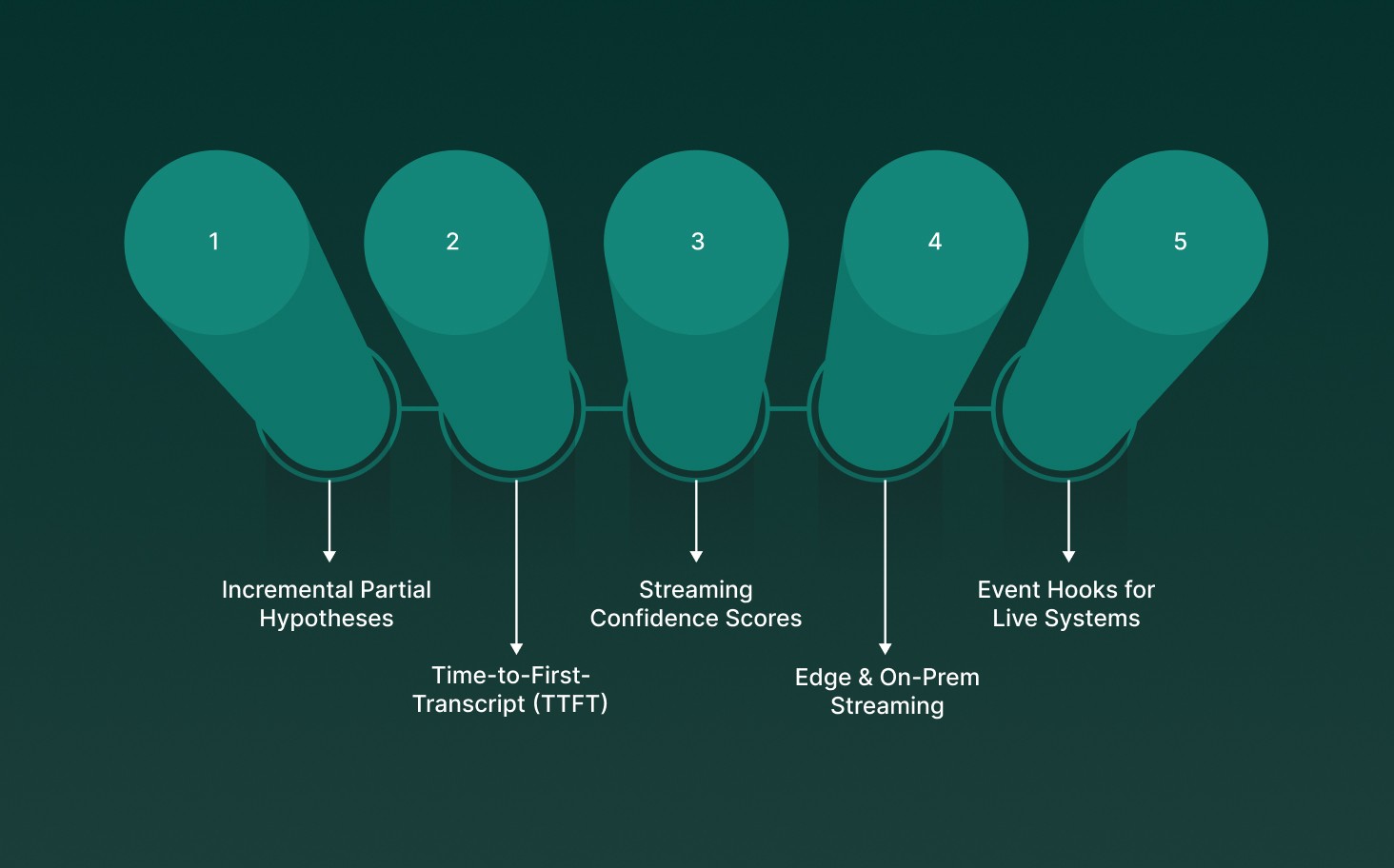

Real-time voice-to-text requires architectural support for streaming inference, partial hypotheses, incremental decoding, and integration with downstream systems for live interaction and operational workflows.

Incremental Partial Hypotheses: Continuously outputs intermediate text segments for real-time predictors, allowing agents and UI to react before utterance completion.

Time-to-First-Transcript (TTFT): Sub-70 ms TTFT guarantees perceptually instantaneous feedback in conversational and interactive environments.

Streaming Confidence Scores: Per-token confidence allows dynamic error correction, routing to fallback models, or escalation to live agents mid-conversation.

Edge & On-Prem Streaming: Local streaming inference reduces jitter and latency variance without a round-trip to centralized cloud STT endpoints.

Event Hooks for Live Systems: Real-time event callbacks, interim transcript streams, and WebSocket/GRPC support synchronize text with UI/agent workflows.

Understand how multilingual speech models handle accents, code-switching, and real-time inference at scale by reviewing Pre-trained Multilingual Voice Agent Models and Features

Why Infrastructure Matters More Than Apps

In voice-to-text systems, infrastructure determines latency ceilings, accuracy, stability, cost curves, and regulatory viability. Applications inherit these constraints and cannot compensate for weak inference, networking, or deployment layers.

Inference Path Determinism: Dedicated inference pipelines reduce jitter from shared compute, stabilizing decoding latency under peak concurrent streaming loads.

Model Lifecycle Control: Infrastructure governs version pinning, rollback safety, and controlled model upgrades without breaking downstream transcription workflows.

Data Residency Enforcement: On-prem and VPC isolation allow strict audio locality, retention control, and auditability required for regulated speech data.

Throughput Scaling Physics: GPU scheduling, batching strategy, and memory bandwidth define sustainable real-time concurrency limits, not application UX layers.

Failure Isolation Mechanics: Circuit breakers, model fallbacks, and health probes at the infrastructure level prevent cascading transcription failures during partial outages.

Voice-to-text reliability is infrastructure-bound. Applications surface value, but compute topology, streaming pipelines, and deployment control decide whether real-time speech systems hold under production pressure.

Final Thoughts!

Voice-to-text has shifted from a convenience feature to a core production dependency. The difference now shows up under load, during interruptions, and when accuracy must hold across accents, numbers, and real-time decisions. Systems that fail here introduce hidden costs that compound fast.

If you are building or scaling real-time voice workflows, infrastructure choice determines outcomes. Pulse STT is built for this layer, delivering production-grade speech intelligence designed for live systems rather than after-the-fact transcripts.

Talk to a voice AI expert and see how Smallest.ai performs in real production conditions.

Answer to all your questions

Have more questions? Contact our sales team to get the answer you’re looking for