Explore real-world AI voice recognition use cases for identity verification, fraud prevention, and secure live-call workflows across high-risk environments.

Nityanand Mathur

Updated on

January 29, 2026 at 2:11 PM

Every time a call begins with “Can you verify your identity,” friction enters the conversation. Customers repeat details, agents pause workflows, and security teams worry whether the person on the line is who they claim to be. This moment is often when teams start searching for AI voice recognition as a way to confirm identity through voice instead of manual checks.

Interest in AI voice recognition often rises after fraud incidents or rising call volumes expose gaps in traditional verification. In 2024, the US Federal Trade Commission reported that phone-based impersonation scams led to losses exceeding $1.2 billion, making voice interactions a high-risk entry point for fraud prevention planning. As a result, operations leaders and compliance teams look for voice-based identity signals that work during live conversations.

In this guide, we walk through how AI voice recognition functions, where it fits within voice systems, and how organizations apply it across real-world workflows.

Key Takeaways

Speaker Identity, Not Language: AI voice recognition focuses on identifying who is speaking using vocal traits, not interpreting spoken words.

Complementary to Speech Recognition: Voice recognition verifies identity, while AI speech recognition software solutions handle transcription and intent processing.

Built for Real-Time Conditions: Systems can verify speakers from short utterances and remain reliable across telephony, VoIP, and mobile channels.

Best Fit for High-Risk Voice Workflows: Use cases center on authentication, fraud detection, compliance tracking, and secure access during live calls.

Infrastructure Determines Scale: Successful deployment depends on low-latency audio handling and concurrent call support, not model accuracy alone.

What Is AI Voice Recognition Technology?

AI voice recognition refers to systems that identify a speaker based on voice characteristics rather than the meaning of spoken words. It focuses on who is speaking, using acoustic and behavioral signals, instead of what is being said. This capability is widely used in authentication, access control, and speaker-specific automation.

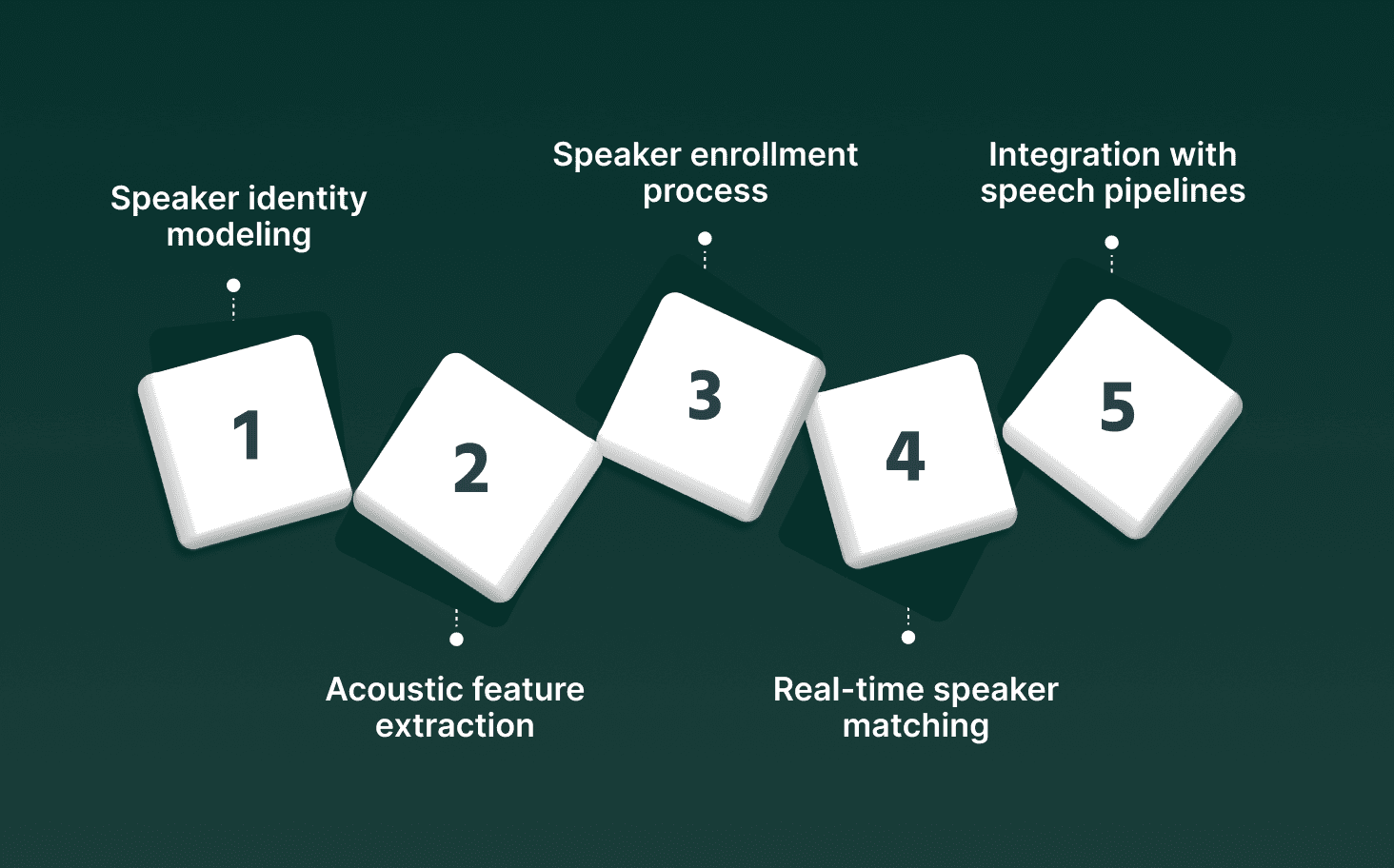

Key components of AI voice recognition include

These components work together to convert spoken language into accurate, usable voice data.

Speaker identity modeling: The system builds a voice profile using features such as pitch range, vocal tract shape, cadence, and frequency patterns that remain relatively stable for an individual.

Acoustic feature extraction: Raw audio is converted into measurable elements like Mel-frequency cepstral coefficients, formants, and spectral energy, which serve as inputs for machine learning models.

Speaker enrollment process: A verified voice sample is captured and stored as a reference template. This template is later compared during live interactions for matching or verification.

Real-time speaker matching: Incoming audio streams are analyzed and scored against enrolled voiceprints, often within milliseconds, supporting live call flows and secure access use cases.

Integration with speech pipelines: While distinct from AI speech recognition, voice recognition often operates alongside speech recognition in AI systems, especially where identity verification and transcription must occur together.

In practice, AI-powered voice recognition adds an identity layer to voice-driven workflows, helping systems confirm who is speaking before acting on spoken input.

If improving identity verification and call handling accuracy is a priority, explore how modern call flows connect securely with Interactive Voice Response System Solutions

How AI Voice Recognition Works

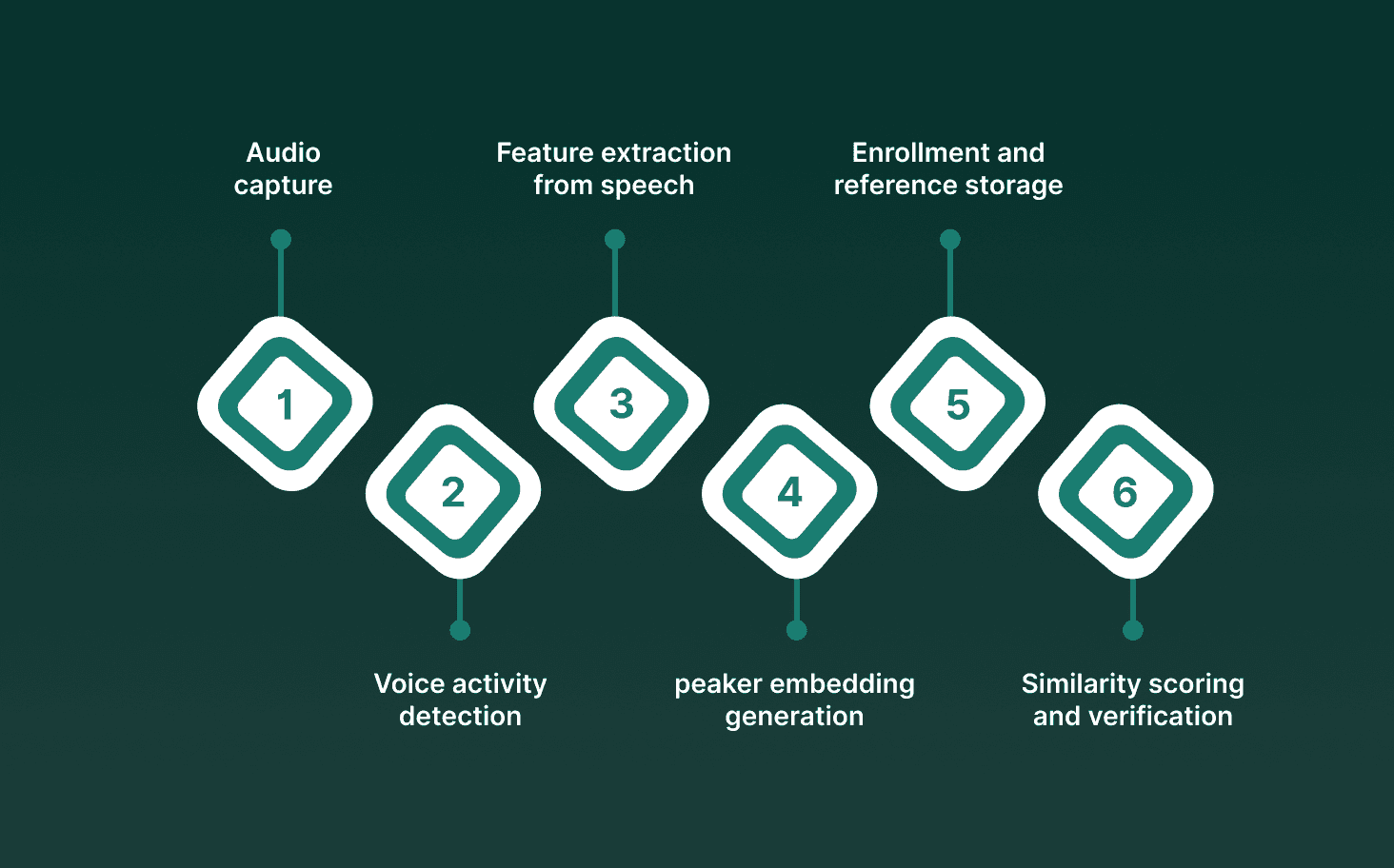

AI voice recognition operates through a multi-stage pipeline that converts live audio into speaker-specific signals, then matches those signals against known voice profiles. Each step is designed to preserve identity cues while accounting for channel noise, device variation, and natural changes in speech.

Audio capture and signal normalization: Spoken input is recorded through telephony, web, or mobile channels and normalized to a consistent sampling rate while filtering background noise, silence, and echo that can distort speaker markers.

Voice activity detection (VAD): The system isolates segments containing human speech and removes non-speech frames, which reduces false matches and improves recognition accuracy during short or interrupted utterances.

Feature extraction from speech: Acoustic features such as Mel-frequency cepstral coefficients, pitch contours, jitter, shimmer, and spectral envelopes are computed to represent how the voice is produced, not what words are spoken.

Speaker embedding generation: Deep neural models convert extracted features into compact numerical representations, often called embeddings, that uniquely represent an individual’s vocal signature across sessions.

Enrollment and reference storage: During enrollment, multiple voice samples are averaged to create a stable voiceprint, accounting for variability caused by emotion, speaking pace, or microphone quality.

Similarity scoring and verification: Live audio embeddings are compared against stored voiceprints using cosine similarity or probabilistic scoring, producing a confidence score used for acceptance, rejection, or further verification.

In deployed systems, AI voice recognition often runs alongside AI speech recognition software solutions, allowing platforms to confirm speaker identity while AI for speech recognition handles transcription and intent detection.

For projects where speed and on-device security matter, start with the Top Lightweight AI Models for Edge Voice Solutions.

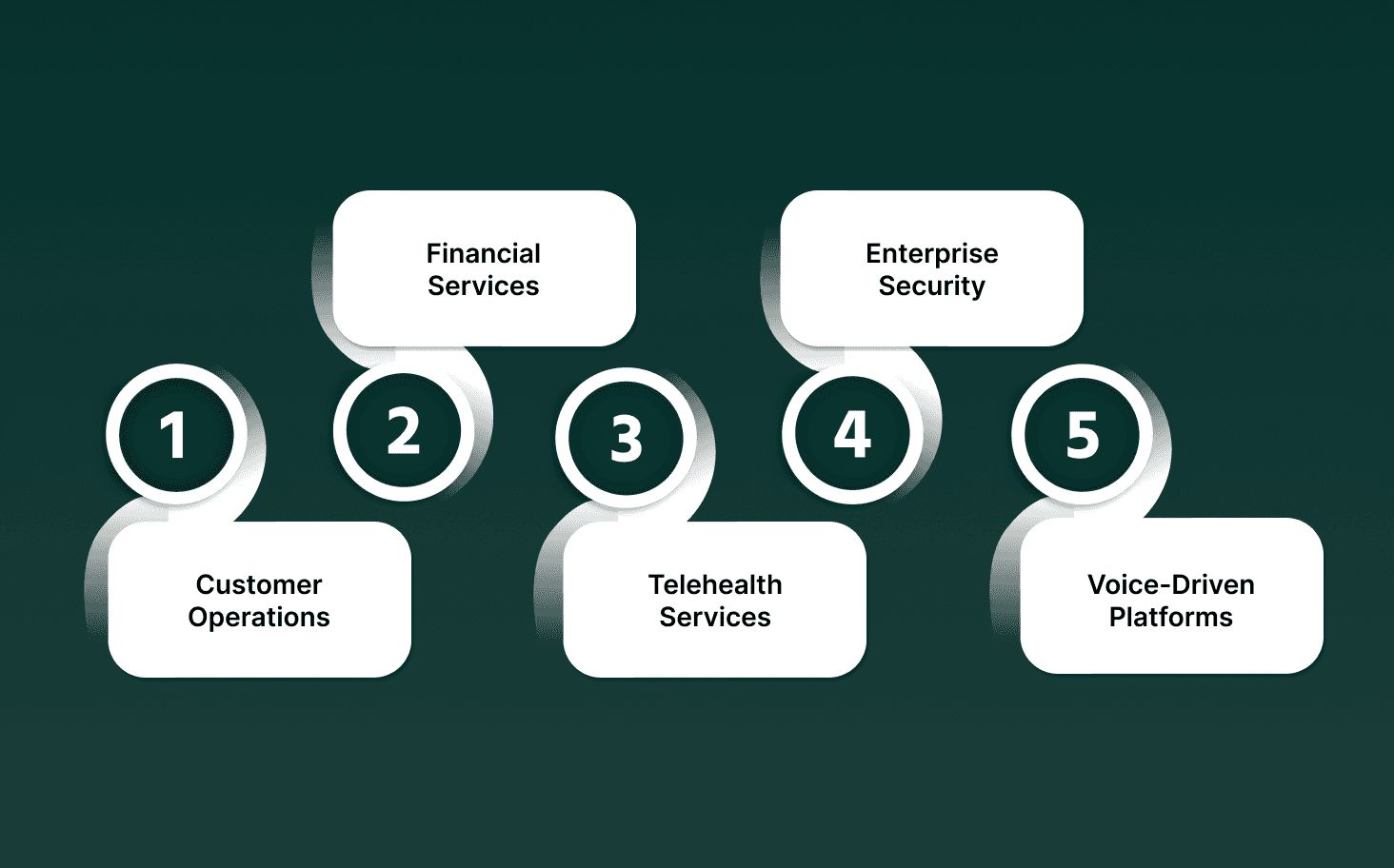

Top Applications of AI Voice Recognition Across Industries

AI voice recognition is applied in scenarios where verifying speaker identity through voice signals adds security, continuity, or operational control. These applications rely on stable vocal traits rather than spoken content, which separates them from AI speech recognition use cases.

1. Contact Centers and Customer Operations

In contact centers, AI voice recognition confirms caller identity early in the interaction, before any sensitive action takes place.

Voice-based customer authentication: Matches a caller’s live voice against an enrolled profile to verify identity without PINs, security questions, or agent involvement.

Fraud and impersonation detection: Flags mismatches when a different voice attempts to access an existing account, even if personal data is provided correctly.

Agent-assisted verification support: Provides agents with real-time confidence scores that guide whether to continue self-service or escalate verification.

2. Financial Services and Regulated Transactions

Banks, lenders, and payment platforms use voice recognition as an added identity layer during voice interactions.

Secure account access: Validates the speaker before balance checks, payment approvals, or account changes during calls.

Call monitoring for compliance: Detects speaker switches during high-risk interactions such as loan confirmations or debt recovery calls.

Cross-session identity continuity: Recognizes returning customers across multiple calls, even when language or phrasing changes.

3. Healthcare and Telehealth Services

Healthcare providers apply AI-powered voice recognition, where identity validation must occur quickly and without friction.

Patient identity confirmation: Confirms a patient before sharing records, appointment details, or clinical instructions over voice channels.

Clinician access control: Verifies medical staff before allowing system access during phone-based triage or documentation workflows.

Call audit support: Associates specific voice identities with actions taken during clinical or administrative calls.

4. Enterprise Security and Access Control

Enterprises use voice recognition as part of multi-factor or voice-first authentication systems.

Voice as a biometric factor: Supplements passwords or tokens with speaker verification during remote access or service desk calls.

Remote workforce validation: Confirms employee identity during internal helpdesk or IT support interactions.

Continuous verification during sessions: Checks that the same speaker remains present during sensitive conversations.

5. Smart Devices and Voice-Driven Platforms

Consumer and enterprise devices rely on voice recognition to separate users sharing the same system.

User-specific access: Differentiates voices on shared devices to apply correct permissions or preferences.

Personalized system responses: Routes actions based on the recognized speaker profile rather than device-level settings.

Integration with speech pipelines: Works alongside AI speech recognition software solutions that handle transcription and command execution.

Across these use cases, AI voice recognition adds an identity layer that speech recognition in AI systems does not provide, allowing voice interactions to proceed with verified speaker context.

Experience real-time voice performance, sub-100ms latency, and enterprise-grade security with voice agents built to scale. Try Smallest.ai today.

Voice Recognition vs Speech Recognition: Key Differences

Voice recognition and speech recognition solve two distinct problems within audio AI systems. One focuses on identifying the speaker, while the other converts spoken language into text. Although they often operate together, their models, inputs, and outputs serve different purposes.

Aspect | AI Voice Recognition | AI Speech Recognition |

Primary purpose | Confirms who is speaking by matching vocal patterns to a known identity | Determines what is being said by converting speech into text |

Core output | Speaker match score or identity decision | Text transcript with timestamps and confidence scores |

Input focus | Acoustic characteristics such as pitch stability, resonance, cadence, and vocal tract shape | Phonemes, words, syntax, and language structure |

Model training data | Labeled speaker samples recorded across varied conditions | Large-scale paired audio and text datasets |

Sensitivity to language | Language-agnostic since voice traits persist across languages | Language-dependent and trained separately for each language |

Typical use cases | Voice-based authentication, fraud prevention, access control, speaker tracking | Transcription, call analytics, voice search, and conversational interfaces |

Interaction with other systems | Often precedes downstream actions like approvals or routing | Feeds NLP systems for intent classification and response generation |

In production environments, AI-powered voice recognition validates speaker identity, while AI speech recognition software solutions handle transcription, allowing speech recognition in AI systems to act with verified context.

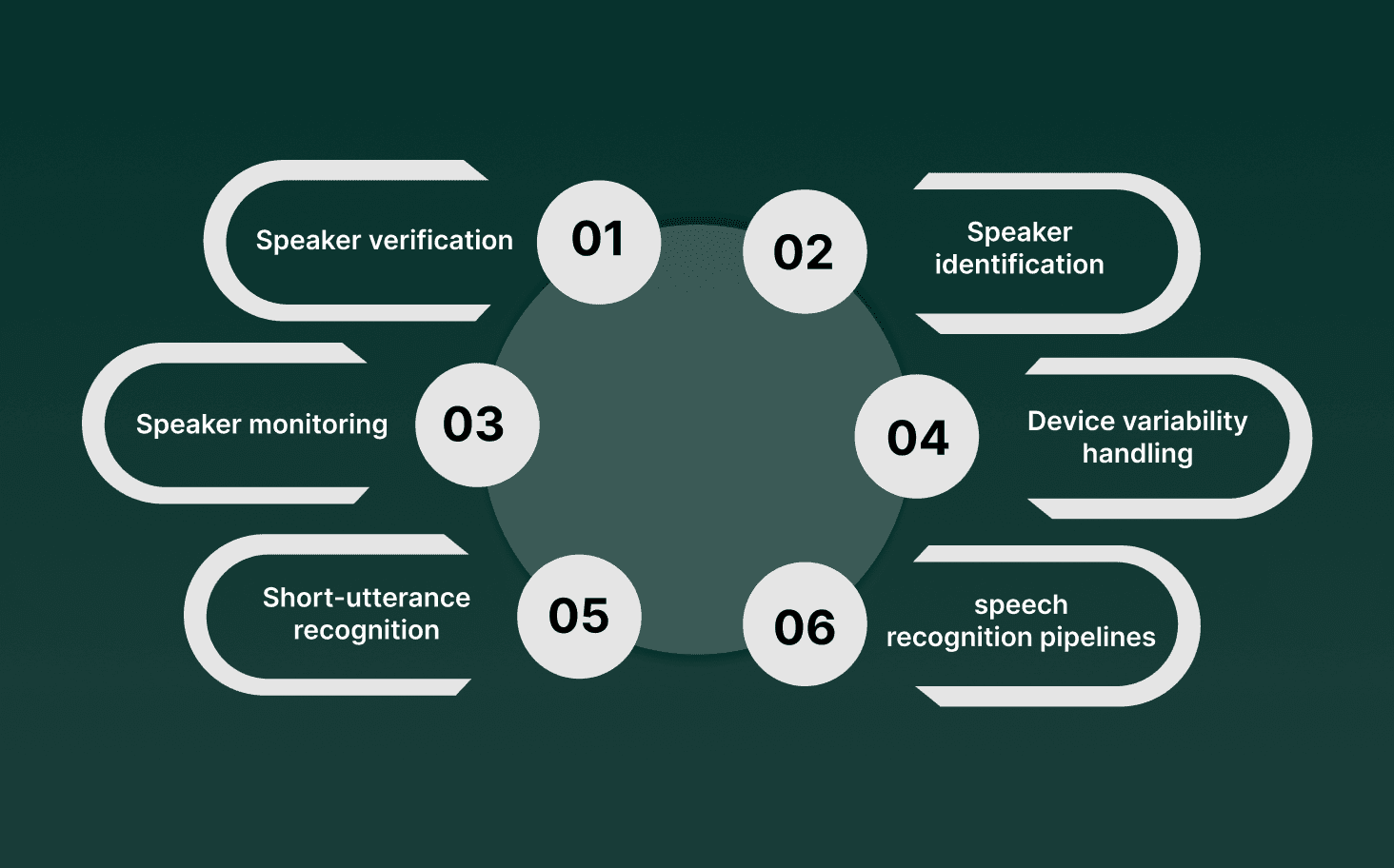

Core Capabilities of AI Voice Recognition Systems

AI voice recognition systems provide identity-aware controls within voice-driven workflows by analyzing speaker characteristics in real time. These capabilities are designed for environments where confirming who is speaking is as important as processing spoken content.

Speaker verification: Confirms a claimed identity by comparing live audio against an enrolled voiceprint, commonly used for secure login, account access, and authorization workflows.

Speaker identification: Determines the speaker from a predefined group without a prior identity claim, useful in call routing, compliance monitoring, and multi-speaker audio analysis.

Continuous speaker monitoring: Tracks the active speaker throughout a conversation to detect handoffs, interruptions, or unauthorized participants during live calls.

Channel and device variability handling: Maintains recognition reliability across landlines, mobile networks, VoIP calls, headsets, and varying microphone quality through normalization and adaptation techniques.

Short-utterance recognition: Performs identity matching from brief spoken segments, which is critical for real-time systems where long enrollment phrases are impractical.

Coexistence with speech recognition pipelines: Operates alongside AI speech recognition and speech recognition in AI platforms, allowing identity checks to occur before or during transcription and intent handling.

When combined with AI-powered voice recognition and AI for speech recognition in enterprise systems, these capabilities support secure, low-latency voice interactions without disrupting natural conversation flow.

Business Impact and Operational Value

AI voice recognition delivers measurable operational outcomes by reducing manual identity checks and adding automated speaker validation to voice workflows. Its value shows up in cost control, risk reduction, and faster call handling across high-volume environments.

Lower average handle time: Voice-based identity confirmation occurs early in the call, removing scripted security questions and reducing agent-led verification steps.

Reduced fraud exposure: Speaker mismatch detection blocks impersonation attempts even when personal data or account details are correctly stated.

Higher call completion rates: Callers complete self-service flows without being transferred to agents for identity checks, supporting faster resolution.

Consistent verification standards: Every call is evaluated using the same acoustic criteria, removing agent variability from authentication decisions.

Improved audit and traceability: Speaker-level tagging helps teams trace who performed or authorized actions during regulated voice interactions.

Scalable identity checks: AI-powered voice recognition scales across thousands of concurrent calls without adding headcount or increasing per-call effort.

By adding speaker awareness alongside AI speech recognition and AI for speech recognition pipelines, organizations gain identity assurance that supports reliable, low-latency voice operations.

How Smallest.ai Supports AI Voice Recognition at Scale

Smallest.ai supports AI voice recognition by providing real-time audio infrastructure, low-latency voice processing, and enterprise-ready deployment controls that voice identity systems depend on. Its platforms act as the execution layer where speaker analysis, verification, and speech workflows operate together during live interactions.

Real-time audio streaming foundation: Waves delivers low-latency, streaming-grade audio generation and handling, which allows voice recognition models to process speaker signals without buffering delays during live calls.

Consistent voice signal quality: Stable audio output characteristics reduce variance across sessions, helping downstream AI-powered voice recognition models compare speaker traits reliably across calls.

Live call execution via Atoms: Atoms runs real-time voice agents that can trigger speaker verification checks at specific call stages such as greeting, authorization steps, or transaction approval moments.

API-driven orchestration: Developer APIs allow voice recognition logic to plug into call flows programmatically, coordinating with AI speech recognition software solutions for transcription and intent handling.

Enterprise deployment support: Platforms are built to operate across contact centers, outbound calling, and regulated environments where speech recognition in AI systems must operate under strict latency and reliability requirements.

Scalable concurrent call handling: Architecture supports high call volumes, allowing AI for speech recognition and speaker validation to run in parallel across large operational workloads.

By combining real-time voice execution with programmable control layers, Smallest.ai provides the infrastructure needed to run AI voice recognition alongside speech recognition systems in production environments.

Conclusion

Voice has become a primary entry point for customer access, transactions, and sensitive requests. As voice channels scale, identity checks must keep pace without slowing conversations or shifting risk back to agents. This is where AI voice recognition fits naturally into modern voice systems, adding speaker awareness without interrupting how people speak or interact.

For teams evaluating AI voice recognition, the real question centers on execution at scale. Live calls demand low latency, stable audio handling, and precise coordination between identity checks and speech processing. Without the right real-time infrastructure, even accurate models struggle in production environments.

Smallest.ai provides the real-time voice foundation that identity-aware systems rely on, supporting live call execution, programmable workflows, and high-volume operations where voice verification and speech processing run side by side.

Explore how Smallest.ai helps run voice-driven systems reliably at scale. Get a demo!

FAQs About AI Voice Recognition

1. Can AI voice recognition work effectively with very short voice samples?

Yes. Modern AI voice recognition systems can verify a speaker using short utterances by focusing on stable vocal traits like pitch behavior and spectral patterns, even when AI speech recognition outputs minimal text.

2. Does AI voice recognition require the same training data as speech recognition in AI?

No. Speech recognition in AI relies on paired audio and text datasets, while AI powered voice recognition uses labeled speaker audio recorded across channels, devices, and conditions without needing transcripts.

3. How do AI speech recognition software solutions interact with voice recognition systems?

In production systems, AI speech recognition software solutions handle transcription, while AI for speech recognition runs alongside voice recognition models that confirm speaker identity before actions like authentication or approvals occur.

4. Can AI offer speech recognition and voice recognition together in real-time calls?

Yes. Many real-time platforms support pipelines where AI offers speech recognition for live transcription, while AI voice recognition runs in parallel to validate speaker identity within the same interaction.

5. Does language affect the accuracy of AI voice recognition?

Voice recognition accuracy remains consistent across languages because it analyzes how speech is produced rather than spoken words, unlike AI speech recognition, which depends on language-specific acoustic and linguistic models.